Generate AI Art Using Your Own Writing

One of the most challenging parts of finishing a post is coming up with a good image.

The AI art tools like DALL-E, Midjourney, and Stable Diffusion are cool, but a lot of the magic comes down to prompt engineering >, which is non-trivial.

Prompt Engineering is almost equal parts art and science currently.

If prompt engineering is the hard part, then let’s use AI to write the prompts.

In other words, AI might be able to make you a cool piece of art, but only if you tell it how (and in very specific and strange ways >).

Your writing was creative already, so let that do the work.

But what if you could tell it how automatically, just based on the text in what you wrote?

That’s the idea I became obsessed with last night, and I was able to get a working proof of concept in less than 30 minutes! This stuff is just nuts.

How to build it

Ok, as I said in the tweet, there are a few key steps:

Summarize the text

Turn that into a prompt

Create an image from the prompt

My technique—and be gentle because it’s a very quick POC—is to combine the first two steps into a few-shot > learning template. For anyone who just WTF’d, few-shotting is basically showing an AI the right answer a few times and then giving it a similar problem with no answer.

So I wanted the template to both 1) train the AI to write a prompt, and 2) feed it my text to build the prompt from. I did that by mixing those two in the Summary portion of the template.

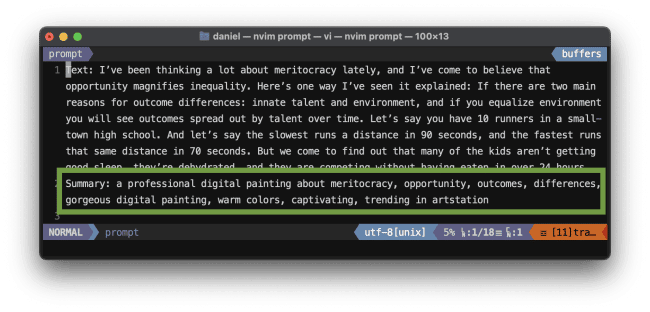

One of the training shots, showing the mixture of prompt and content summarization

You can tweak this language to make it match your blog.

Specifically, the:

a professional digital painting about…

…was static in all the summaries I trained it with. And so was:

gorgeous digital painting, warm colors, captivating, trending in artstation…

Which means when I ask this tool for the real (non-training) prompt—using my new text—I don’t have to add those things!

Training the prompter

Some guru will be able to show me a better way, no doubt.

So that’s pretty much it. You just need the text to get summarized, which includes a label (I used Text), and then a summary that is your output on a separate line (I used Summary). Then, as your final step, send the thing you want to summarize (which is the completed prompt), and leave the answer blank!

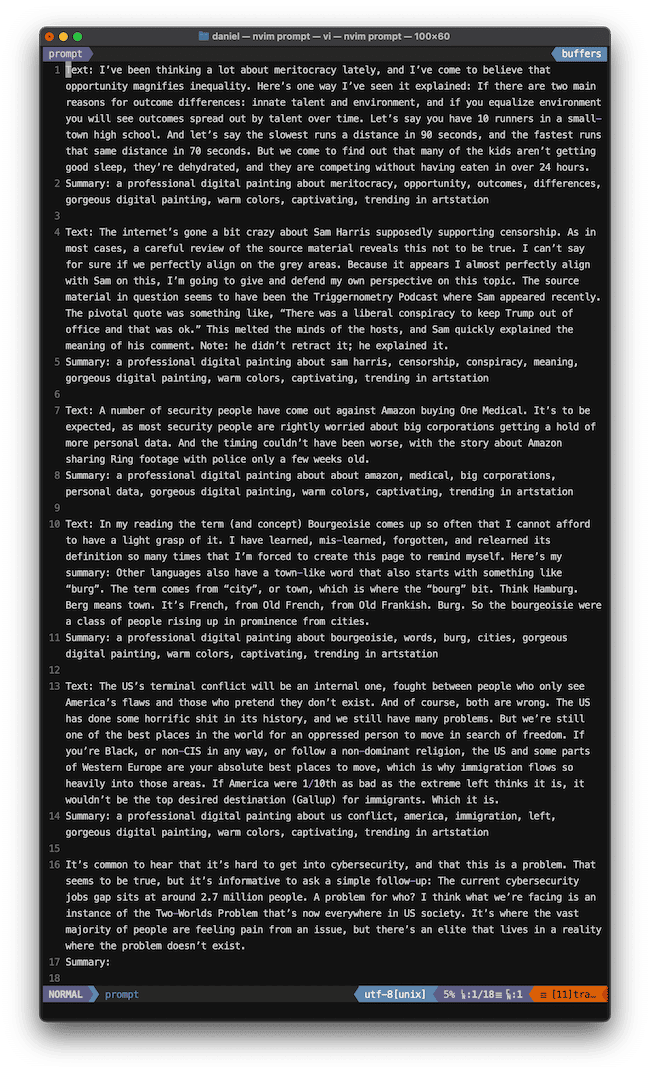

Here’s what the whole promt looks like, with all the "Text:" portions being taken from other blog posts of mine:

The full series of Text/Summary training scenarios

Remarkably solid prompt quality

Using this technique I was able to generate some pretty decent prompts, which surprised me. Here’s the opening of a blog post I did recently, and I wanted to see if this technique could create a decent prompt for it.

It’s common to hear that it’s hard to get into cybersecurity, and that this is a problem. That seems to be true, but it’s informative to ask a simple follow-up: The current cybersecurity jobs gap sits at around 2.7 million people. A problem for who? I think what we’re facing is an instance of the Two-Worlds Problem that’s now everywhere in US society. It’s where the vast majority of people are feeling pain from an issue, but there’s an elite that lives in a reality where the problem doesn’t exist.

That’s not the whole article, of course, but it’s already got some decent stuff to key off of. So how did this trainer do? Here’s the prompt it created:

a professional digital painting about cybersecurity, jobs gap, two-worlds problem, pain, elite, reality, gorgeous digital painting, warm colors, captivating, trending in artstation

Seriously?!?! That’s stunning. So not only did it keep my DALL-E 2 prompt stuff in there, but it also added the summary nested in the middle!

So it extracted these terms from the text: cybersecurity, jobs gap, two-worlds problem, pain, elite, reality, and then it seamlessly blended them into the specific art styling I’ve told it I want for my site.

You can tell which is which by the icons.

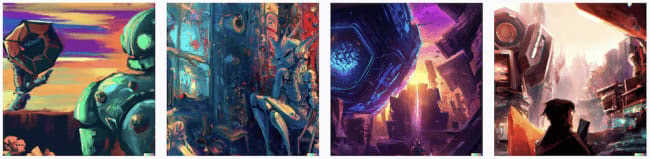

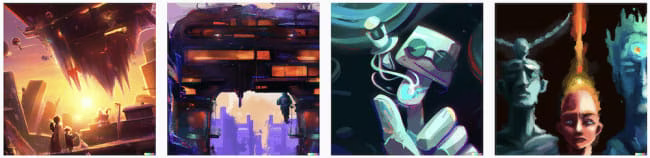

So I’m pretty excited at this point, but now for the big question. How do the images look based on this prompt? Here are some of the outputs of running this prompt on DALL-E, Midjourney, and Stable Diffusion.

Output from three different AI-engines using the GPT-3-created Prompts

Are you f-ing kidding me. This is ridiculously good.

Oh, and you know what? I’ve not even begun, because I can comb through all the different art styles that these engines can make, and I can add a particular style I like to the prompt training. Actually I have multiple ideas there:

Hardcode the art style you want for your blog

Hardcode a color scheme

In your custom prompt creator tool, factor in the content for the art style!

Add a custom art call to the tool, so you can ask for different art styles based on mood

Creating a simple utility, bac

I’m a bit over-stimulated right now, but here’s what I’ve done so far to make this kind of slick and usable. I’ve created a simple utility called Blog Art Creator (bac), which sounds fancy but is nothing but a silly shell script.

I have the training scenarios in a single file, training.txt.

I save my new content to another file, content.txt.

I have the final prompt in another file, prompt.txt.

The utility just concatanates text, so it combines the training data, your content, and the prompt, and sends all of that to GPT-3. I use this cli tool > within my script:

gpt3 –engine text-davinci-001 –temperature 0.75 –freq-penalty 1 –pres-penalty 1

Which leaves us with this for the, um, "code".

A dirty, no-good hack

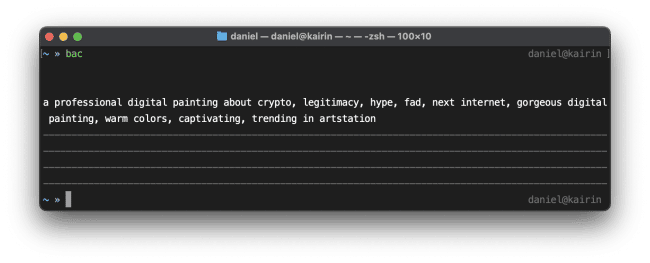

So then I just run bac.

bac

Now, as you know, you can create some pretty lame looking stuff if you don’t do your prompts right. So let’s test this with a brand new prompt from a much earlier, random blog post.

People are hyped about the metaverse, and it’s honestly understandable. First, we’re going through some shit as a species right now. Social tension, the best part of Star Wars’ last three movies were the previews, and there’s a global pandemic. Part of this metaverse hype is just being excited to be excited, and I get it.Metaverse lore has lots of fun stuff in it—from AR/VR to headsets to avatars to moving between worlds. But I think we can reduce the metaverse to one concept and two axes.The metaverse is about experiencing a better life than in meatspace.Most important to this is content.Second most important to this is interface.ContentContent is life. It’s the source of meaning. It’s what gives provides us with evolution’s nectar of happiness in the form of hormone releases when we struggle and achieve something.

Gaming is Metaverse 1.0 >Which produced this prompt:

a professional digital painting about the metaverse, hype, understandable, exciting, better life, content, interface, gorgeous digital painting, warm colors, captivating, trending in artstation

Running the bac command and getting output

And created these images from DALL-E in the first two runs.

In. Sane.

I will absolutely be using this for my own stuff going forward.

Next steps

What I’ll work on in immediately is breaking apart the art styling from the content summarization and tagging. Basically I want to be able to change the art in a separate file and have that get added into all the training shot strings separately from the labeling as part of the bac utility. Same with the labeling.

So actually:

Break apart the art styling so I can change it without editing training.txt

Create a parser for going to get content from my site, either randomly or by search term, and implement that as command switches to bac

Add the automatic art switching based on terms found in the content, e.g., create "dramatic" and "serious" art if the content summary contains "depression", "war", "future", etc, with a more moody color scheme as well. Implement those as switches too.

End of POC

Well I’m stoked. I can’t wait to work on this more tonight, and I really hope you enjoy messing with the ideas and finding tons of ways to improve it. And share it out > or hit me up > if you want to hack on upgrades/variations.

Notes

Next steps for me would also include automating the submission to the image generation services, but that’s a bit kludgy right now. I’ll add that to the automation once it’s available and/or easier to submit to and get results from.