We're All in Fractal Microcults

We now have an infinite number of ways to strongly disagree with someone

I just finished listening to an extraordinary episode of Making Sense with Sam Harris, where Roger McNamee was the featured guest. He is a long-time tech analyst and investor, and actually used to be an advisor for Facebook before becoming an outspoken critic.

Roger’s points were legion, and covered the now familiar ground regarding the collection of our data by Facebook, Google, and the hordes of data brokers who assemble and sell curated profiles on all of us. It was a good summary of the scope and impact, but it was covered territory for anyone in infosec or privacy.

I’m not sure he was correct about Google’s malicious motives in creating GMail, but it sure was an interesting narrative. I took it with a bit of skepticism, though.

He talked about how Google had search, but realized they needed to know more about the people searching—so they invented GMail and scanned every email for data about you and your preferences. He says they then realized they needed your location too, so they created Google Maps. It was quite a Mr. Burns-esque picture he painted.

To me, the most interesting point he made was how strange it was that nobody is challenging these companies for doing what they’re doing. He asked how it was that this became their data in the first place. Who gave all these data brokers authorization to sell your data? How did it become theirs somehow? When we never entered into an agreement with them. It was a fascinating question.

"Data is the new oil." is a popular sentiment in tech right now.

His argument reminded me of oil. Oil comes from the sun’s energy stored in dinosaur bones and other organic material. Who owns the sun’s energy? Well—according to current laws—whoever has the resources to find it first, and to claim ownership of a location and method of extraction.

What if I told you that the vast majority of your privacy risk comes not from the seedy darkweb, but from completely legal data brokers?

That’s precisely what Google, Facebook, and all these various data brokers have done with our data. They’ve taken our collective sun—which is the fundamental data about our lives—and claimed it as their own.

With the first-hop services like Google and Facebook you probably sign away your rights in the agreement, but I wouldn’t know because I’ve never read it. And neither have you.

They own it. They can sell it. And they can do so without us even knowing. If you want recourse, sure—take some time off of work and they’ll show up with 37 lawyers prepared to battle you for 20 years if necessary, which would cost you thousands of dollars a month.

The point isn’t that we can’t fight big companies—the point is that we’re not even realizing it’s profoundly strange for someone to own sunlight, or the personal data about billions of humans.

Anyway, all this was interesting, but Sam (thankfully) kept pulling Roger out of the weeds and back to the important questions. Namely, what went wrong in 2016? What is wrong with Facebook claiming it’s a platform and not a media company?

Sam was operating with his default state of Good Faith to both sides, even though only one was present. He could see—as can I and many others—that there’s a difference between being a platform for conversation and being a media company, and he was reluctant to blame the platforms fully for the misinformation campaigns that have become so common.

The real question that Sam was asking was excellent, which is basically:

This was specifically his question for Twitter.

Why not draw the line at the First Amendment and be done with it? Why contort yourself into knots over infinite nuance and interpretation when there’s already a (somewhat) clear backstop in the form of the Constitution?

I think that’s brilliant, and I think the answer is obvious from the way Twitter is handling these issues. They see the impact of not taking action against harmful memes and viral hate speech as being far worse than taking action, and I have to say that in many of the cases I’ve heard about, I agree.

What this highlights is simply that things have changed. It reminds me a lot of Sam’s recent conversation with Nick Bostrom, actually. These platforms might end up being the first black ball that we pull from the urn, and it might require that we get a whole lot more controlling over what can be said. That frightens me greatly as well, since I think we’re equally unready to exert that level of protective control without it becoming more of a threat than dangerous speech.

So that brings me to the idea that I had when walking for 45-minutes and listening to the podcast.

I think that law like the First Amendment might have to change based on the evolution of human society and technology. It’s depressing in a similar way to what Yuval Harari talks about in some of his work, i.e, that religion and Capitalism and all these various ideas that have served us in the past might eventually become outdated to the point of becoming useless. And at that point we’ll need something new.

That isn’t to say that it’s time to discard the First Amendment, but rather that it’s possible for something so sacred and so pure as the First Amendment—or Capitalism, or fill_in_the_blank that we’ve always loved—to become such a bad fit with our current reality that we have to modify it to survive.

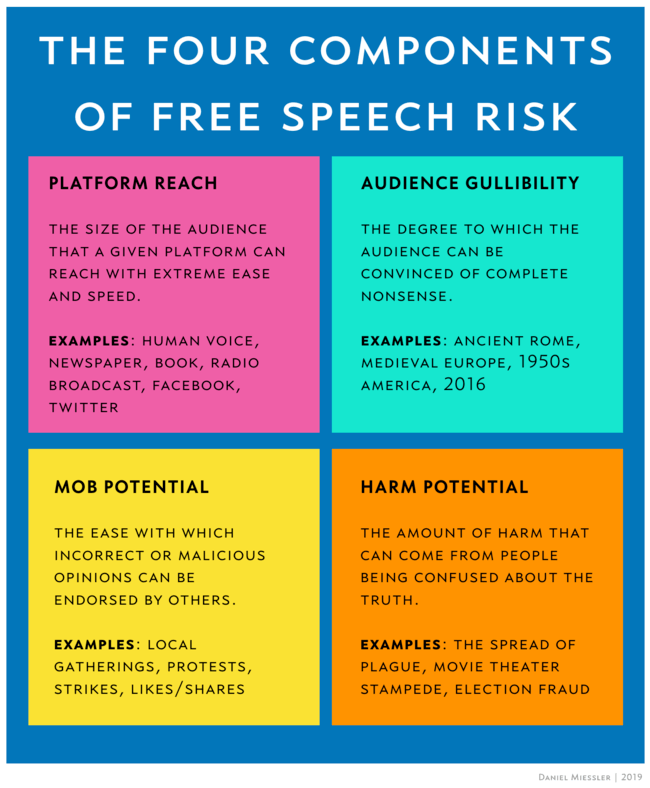

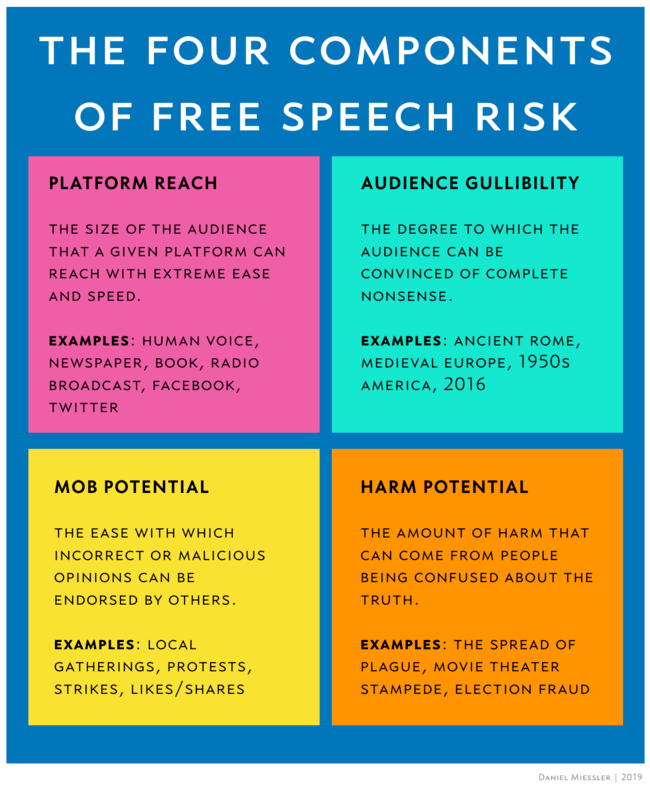

The way I tried to capture this was to look for elements of risk in free speech, and imagine those element values at various stages of human history. I think the components might look something like:

Platform Reach

Audience Gullibility

Mob Potential

Harm Potential

Platform Reach is how many people can hear you when you pronounce a bit of free speech. Audience Gullibility is how resistant people are to bullshit. Mob Potential is the ability to get others to agree quickly to a given argument or sentiment. And Harm Potential is how bad it could be if one’s free speech were to be harmful or malicious.

I’m not a historian, so apologies if I’m being sloppy here.

In the late 1700’s the platform was the voice, the letter, and the book, which are either small, slow, or limited in penetration. And even though people were far less educated in the past, they were also more indoctrinated with a government or relgion’s dogma—which likely immunized them to other brands of mental debris. And the harm potential back then of a dangerous stump speech or a letter or a book was definitely significant at the top end, but in modern times the potential to change voting patterns, cause social strife, and disrupt herd immunity are arguably even more severe.

Get a weekly breakdown of what's happening in security and tech—and why it matters.

Really, check out the Nick Bostrom podcast with Sam.

The point here is that we may be at an inflection point where ideas can be weaponized in a way that’s so bad we need regulation to assist. I’m not saying I want this to happen—I’m saying it might be happening regardless of what we want.

The Supreme Court has also recognized that the government may prohibit some speech that may cause a breach of the peace or cause violence.

Legal Information Institute, Cornell Law School

Legal Information Institute, Cornell Law SchoolWe have to think about all the various combinations of these four variables, and imagine worst-case combinations.

In the worst case, you have maximally gullible people, who are most open to believing a new narrative, who are being force-fed a false truth, on a platform that reaches hundreds of millions, where it’s trivially easy to form a mob, where it’s relatively easy to cause significant harm either in the short or long-term.

That’s kind of where we are.

Never before have we had this specific combination of these risk variables. And that might mean it’s time to start adjusting how we think about ideas.

Of course, if we can improve any of these variables it greatly reduces the risk. If we have a smarter population, if nobody’s able to be malicious on the platform, or if viral movements get shut down quickly, or if it’s somehow not easy to cause true harm to people—all those factors can make things better.

But I don’t see how we’re going to fix any of them, let alone all of them.

I think what will end up happening is that either Twitter is going to move towards the Constitution—since they can’t possibly police everything without some major breakthroughs in ML—or the Constitution (and similar law elsewhere) is going to have to move towards Twitter.

That means instead of having a specific list of things you can’t say within the protection of free speech, the scope of what’s considered dangerous (like yelling fire in a theater) will expand greatly.

Such laws might say something like:

People who act in bad faith, with the intent to harm either individuals or groups, and use platforms designed to reach over 1,000 people, where the outcomes can conceivably result in harm—will be in violation of the Conscious Harm and Communication Act (CHACA).

A thing that we hopefully won’t need

A thing that we hopefully won’t needImagine how much interpretation there will be in there. Imagine how much controversy there will be about what applies and what doesn’t.

We don’t really have to imagine. Just look at Twitter.

Listen to the podcast.

The coolest point from Roger was the fact that we’re passively accepting the ownership of our data, and we shouldn’t.

Sam’s point was that we already have a line in the sand in the form of the Constitution, so why not use that?

I think Harari’s point is salient here, i.e., that we might have simply evolved past that being a useful protection anymore. Ideas + Maliciousness + Gullibility + Global Tech Platforms might be our first Black Bostrom’s Ball.

What will laws look like that protect global health in this realm? I think we can expect them to be broad and open to interpretation—much like we see today with Twitter.