The Chinese Room Problem With the 'LLMs only predict the next token' Argument

I'm sure you've heard the argument that LLMs aren't really thinking because, according to them,

LLMs are just predicting the next token...

The problem with this argument is the same exact thing applies to the human brain. You can just as easily say,

The human brain is nothing but a collection of neurons that finds patterns and generates output.

The reason output argument

There's a related argument for something's ability to reason, that says something like,

If you give a problem and the output could only have been the result of reason, then the thing you gave it to must be able to reason.

And one counter to that argument is called the Chinese Room Argument, which basically says that computers can't ever really be thinking because they don't truly understand what they're doing.

The paper's analogy is a person inside a room with a set of instructions on how to translate Chinese characters into English. The person inside the room doesn't actually understand Chinese; they just follow the instructions to produce the correct output.

The paper argues that this room itself is like a computer—any and all computers actually—where there is complex work coming out of it, but at no point is there actual thinking or understanding happening.

The problem with the Chinese Room Argument

The extraordinary mistake in this argument is not realizing that the brain of a Chinese-speaking human—is also a Chinese machine.

When Chinese goes into the ears or eyes of a Chinese speaker, what comes out is understanding of what that Chinese meant. The speaker has no idea how that happened. They simply look at the characters on the page, or hear the words, and they somehow know what they mean.

This is exactly as if those Chinese characters are being passed into another room inside of the Chinese speaker’s brain, and inside the brain there is someone in a room with actual Chinese understanding.

We know for certain that this is what's happening inside the brain of every human who understands a language. Or anything else.

Once we understand something, the ability to produce out output from that understanding turns into a Chinese room. We throw input, and our brain throws back output. From where? We have no earthly idea.

Our brains are Chinese rooms.

Somehow, we have forgotten this. We've completely overlooked the fact that we haven’t a slightest idea where all this knowledge, and understanding, and creativity, is coming from when we pose problems to our own minds.

Confusing substrate and output

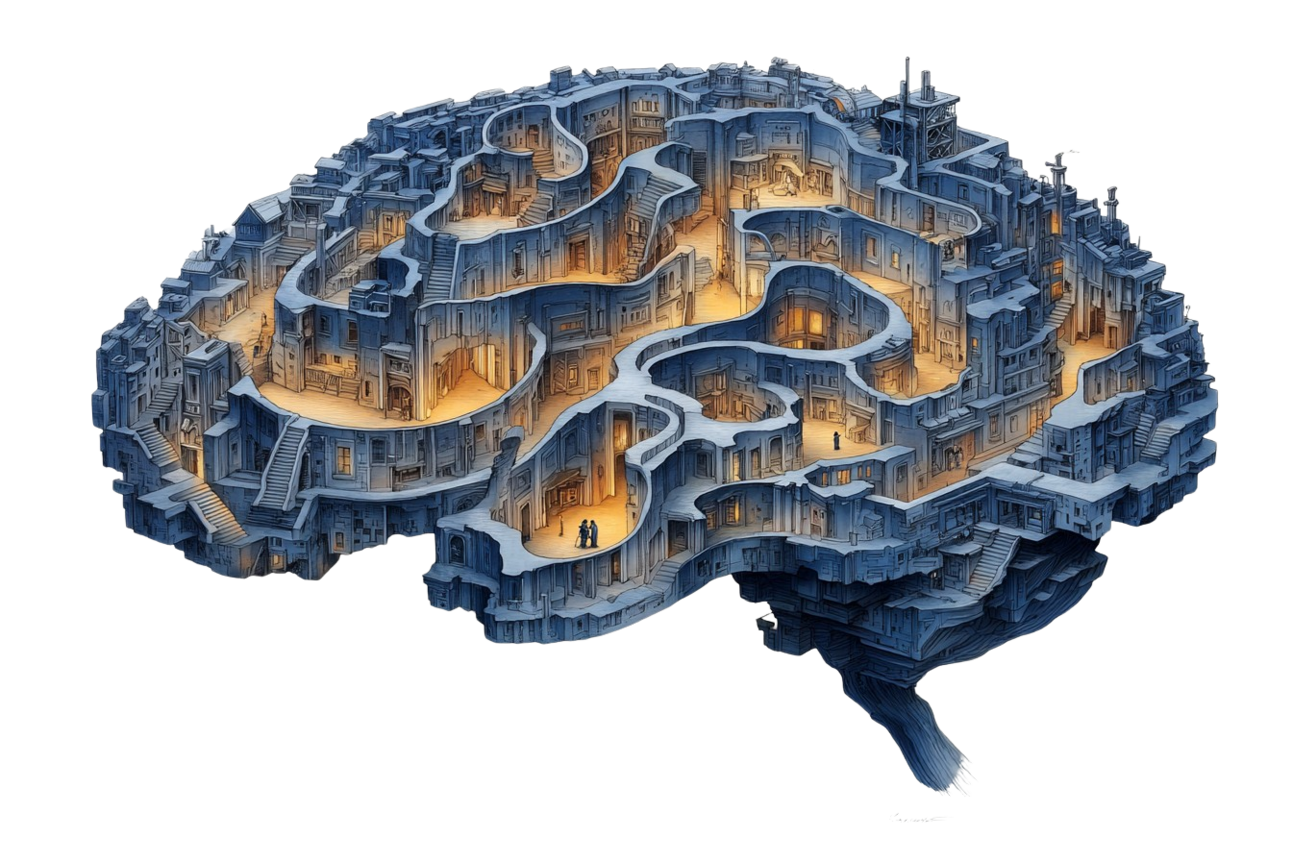

If you slice into a human brain and look at what it's made of, you will find nothing but neurons, axions, and synapses. Just as you will find nothing but layers of weights in the brain of a Transformer. Two different substrates, but ultimately an architecture of connections and activations.

In both cases, you have a completely opaque, non-deterministic, and highly mysterious process around how it generates output.

If you want to know if something can reason, I propose the only way to find out is to:

- Decide on a definition of reasoning

- Give it problems that require reasoning to solve

- See if it can solve them

Anything less is dishonest at some fundamental level.

Yes, humans can reason. But we're really sloppy with how we think we know we can. And as a result, we're similarly sloppy with our definition of reasoning.

Most attempts end up being No True Scotsman appeals that basically say,

It's truly reasoning if and only if it reasons like I think humans do.

We have no idea how humans are able to reason or be creative.

Very much like Transformers, our brains are black boxes that produce wondrous output through a vast network of small nodes that light up together when given input.

Any materialist who claims that we are fundamentally different than Transformers has all their work in front of them, since they neither know how humans do it nor how transformers do it.