World Model + Next Token Prediction = Answer Prediction

Table of Contents

Summary >

A new way to explain LLM-based AI

Thanks to Eliezer Yudkowsky, I just found my new favorite way to explain LLMs—and why they’re so strange and extraordinary.

Here’s the post that sent me down this path.

Eliezer Yudkowsky ⏹️ @ESYudkowsky

Eliezer Yudkowsky ⏹️ @ESYudkowsky "it just predicts the next token" literally any well-posed problem is isomorphic to 'predict the next token of the answer' and literally anyone with a grasp of undergraduate compsci is supposed to see that without being told.

2:17 PM • Aug 23, 2024

445 Likes 26 Retweets

54 RepliesAnd here’s the bit that got me…

Literally any well-posed problem is isomorphic to 'predict the next token of the answer'.Eliezer Yudkowski

well-posed problem = prediction of next token of answer

Like—I knew that. And I have been explaining the power of LLMs similarly for over two years now. But it never occurred to me to explain it in this way. I absolutely love it.

Typically, when you’re trying to explain how LLMs can be so powerful, the narrative you’ll get from most is…

There’s no magic in LLMs. Ultimately, it’s nothing but next token prediction.

(victory pose)

A standard AI skeptic argumentThe problem with this argument—which Eliezer points out so beautifully—is that—with an adequate understanding of the world—there’s not much daylight between next token prediction and answer prediction.

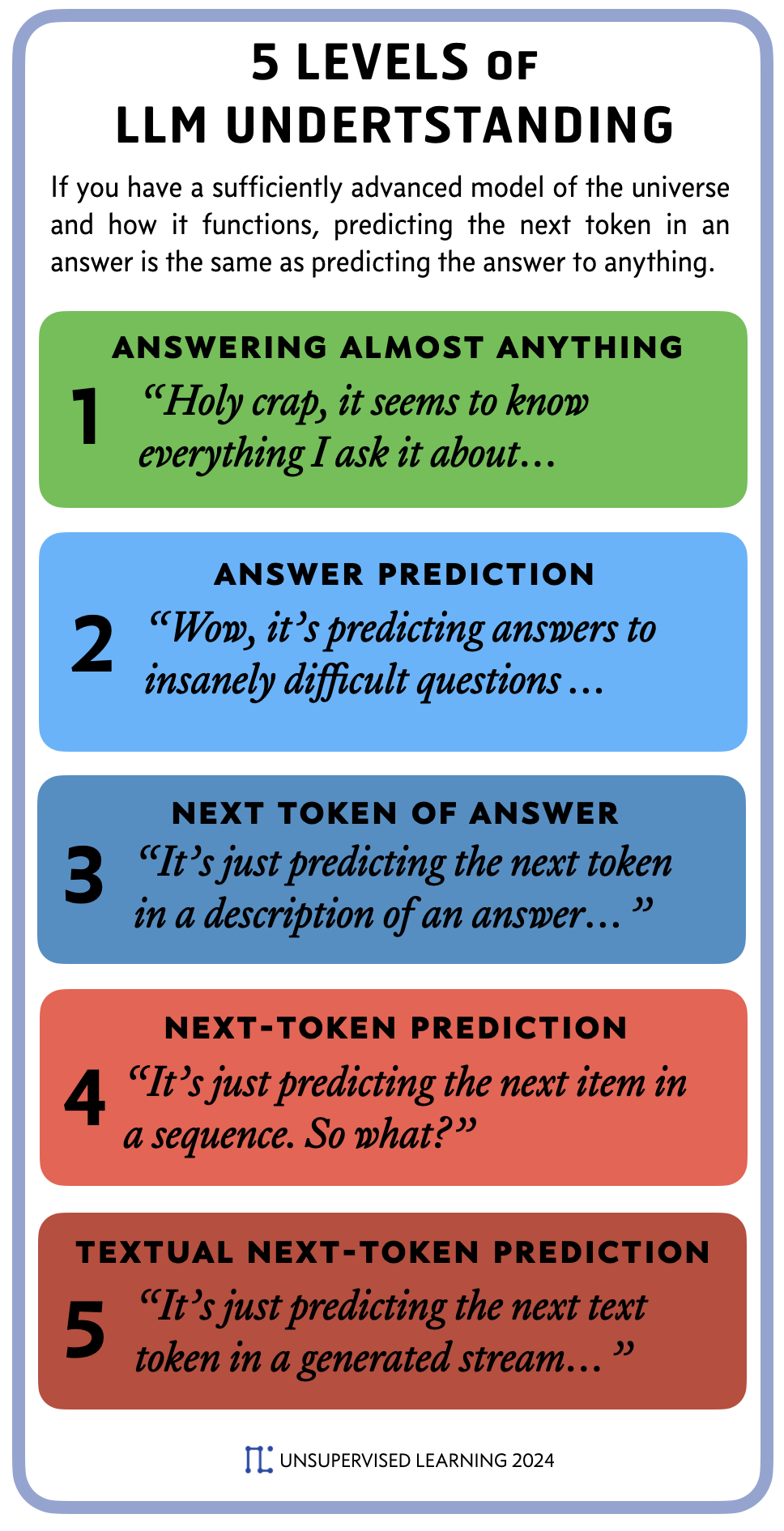

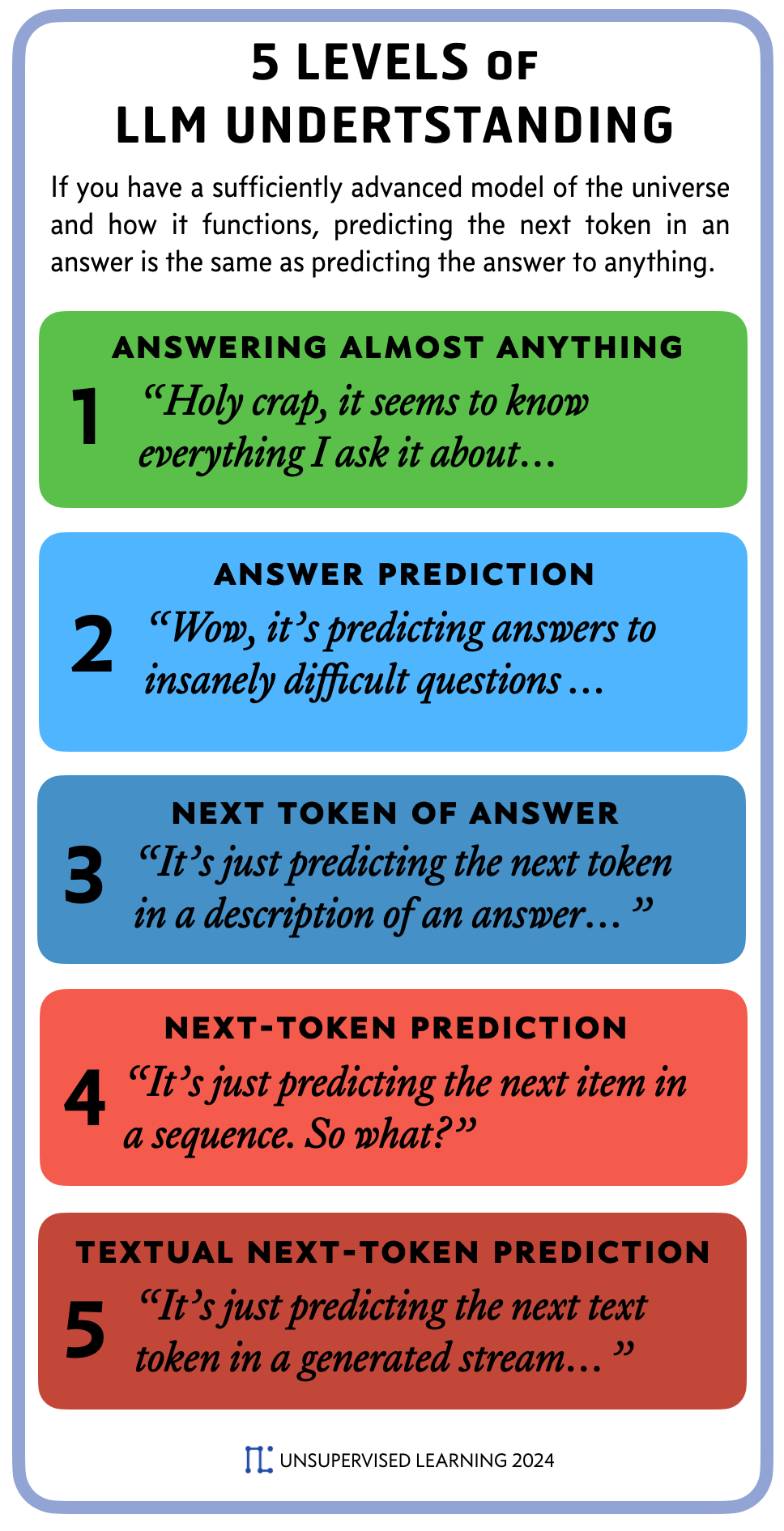

So, here’s my new way of responding to the "just token prediction" argument, using 5 levels of jargon removal.

The 5 Levels of LLM Understanding

TIER 1: "LLMs just predict the next token in text."

TIER 2: "LLMs just predict next tokens."

TIER 3: "LLMs predict the next part of answers."

TIER 4: "LLMs provide answers to really hard questions."

TIER 5: "HOLY CRAP IT KNOWS EVERYTHING."

That resonates with me, but here’s another way to think about it.

Answers as descriptions of the world

If you understand the world well enough to predict the next token of an answer, that means you have answers. 🤔

Or:

The better an LLM understands reality and can describe that reality in text, the more "predicting the next token" becomes knowing the answer to everything.

But "everything" is a lot, and we’re obviously never going to hit that (see infinity and the limits of math/physics, etc.).

So the question is: What’s a "good enough" model of the universe—for a human context—to be effectively everything?

Human vs. absolute omniscience

If you’re tracking with me, here’s where we’re at—as a deductive argument1 > .

If you have a perfect model of the universe, and you can predict the next token of an answer about anything in that universe, then you know everything.

But we don’t have a perfect model of the universe.

Therefore—no AI (or any other known system) can know everything.

100% agreed.

But the human standard for knowing everything isn’t actually knowing everything. The bar is much lower than that.

The human standard isn’t:

Give me the location of every molecule in the universe

Predict the exact number of raindrops that will hit my porch when it rains next

Predict the exact price of NVIDIA stock at 3:14 PM EST on October 12, 2029.

Tell me how many alien species in the universe have more than a 100 IQ equivalent.

These are—as far as we know of physics—completely impossible to know because of the limits of the physical, atom-based, math-based world. So we can’t ever know "everything", or really anything close to it.

But take that off the table. It’s impossible, and it’s not what we’re talking about.

What I’m talking about is human things. Things like:

What makes a good society?

Is this policy likely to increase or decrease suffering in the world?

How did this law effect the outcomes of the people it was supposed to help?

These questions are big. They’re huge. But there’s an "everything" version of answering them (which we’ve already established is impossible), and then there’s the "good enough" version of answering them—at a human level.

I believe LLM-based AI will soon have an adequately deep understanding of the things that matter to humans—such as science, physics, materials, biology, laws, policies, therapy, human psychology, crime rates, survey data, etc.—that we will be able to answer many of our most significant human questions.

Things like:

What causes aging and how do we prevent or treat it?

What causes cancer and how do we prevent or treat it?

What is the ideal structure of government for this particular town, city, country, and what steps should we take to implement it?

For this given organization, how can they best maximize their effectiveness?

For this given family, what steps should they take to maximize the chances of their kids growing up as happy, healthy, and productive members of society?

How does one pursue meaning in their life?

Those are big questions—and they do require a ton of knowledge and a very complex model of the universe—but I think they’re tractable. They’re nowhere near "everything", and thus don’t require anywhere near a full model of the universe.

In other words, the bar for practical, human-level "omniscience" may be remarkably low, and I believe LLMs are very much on the path to getting there.

The argument in deductive form

Here’s the deductive form of this argument.

If you have a perfect model of the universe, and you can predict the next token of an answer about anything in that universe, then you know everything.

But we don’t have a perfect model of the universe.

Therefore—no AI (or any other known system) can know everything.

However, the human standard for "everything" or "practical omniscience" is nowhere near this impossible standard.

Many of the most important questions to humans that have traditionally been associated with something being "godlike", e.g., how to run a society, how to pursue meaning in the universe, etc., can be answered sufficiently well using AI world models that we can actually build.

Therefore, humans may soon be able to build "practically omniscient" AI for most of the types of problems we care about as a species.2 >

🤯

Guess what? We do it too…

Finally there’s another point that’s worth mentioning here, which is that every scientific indication we have points to humans being word predictors too.

Try this experiment right now, in your head: Think of your favorite 10 restaurants.

…

As you start forming that list in your brain—watch the stuff that starts coming back. Think about the fact that you’re receiving a flash of words, thoughts, images, memories, etc., from the black box of your memory and experience.

Notice that if you did that same exercise two hours from now—or two days from now—the flashes and thoughts you’d have would be different, and you might even come up with a different list, or put the list in a different order.

Meanwhile, the way this works is even less understood than LLMs! At some level, we too, are "just" next tokens predictors, and it doesn’t make us any less interesting or wonderful.

Summary

It all starts with the sentence "next tokens prediction is isomorphic with answer prediction".

This means "next token prediction" is actually an extraordinary capability—more like splitting the atom than a parlor trick.

Humans seem to be doing something very similar, and you can watch it happening in real-time if you pay attention to your own thoughts or speech.

But the quality of a token predictor comes down to the complexity/quality of the model of the universe it’s based on.

And because we can never have a perfect AI model of the universe, we can never have truly omniscient AI.

Fortunately, to be considered "godlike" to humans—we don’t need a perfect model of the universe. We only need enough model complexity to be able to answer the questions that matter most to us.

We might be getting close.

1 A deductive argument is where you must accept the conclusion if you accept the premises above, e.g., 1) All rocks lack a heartbeat, 2) This is a rock. 3) Therefore, this lacks a heartbeat.2

2 Thanks to Jai Patel, Joseph Thacker, and Gabriel Bernadett-Shapiro for talking through, shaping, and contributing to this piece. They’re my go-to friends for discussing lots of AI topics—but especially deeper stuff like this.