Why We'll Have AGI by 2025-2028

People are thinking a lot about when we're going to get Artificial General Intelligence (AGI), and I think it's coming faster than most.

AGI is distinct from regular AI because regular AI is "narrow," meaning it's only good at a very specific thing and can't adapt to complexity like humans. An AI that's good at multiple things, or is able to handle complexity, would be monumental. It would be direct competition for humans—especially human workers.

For the longest time, the consensus of the best experts was that either this would 1) never happen, or 2) it would come many decades from now.

But, given what's happened in the last year with GenAI and ChatGPT, that's now changing. Given all these developments, my prediction is a 60% chance of AGI in 2025 and a 90% of AGI in 2028.

But before we get too deep into it, we need to more accurately define what we mean by AGI. Here's Sam Altman's definition:

For me, AGI…is the equivalent of a median human that you could hire as a co-worker.Sam Altman

This is a decent attempt, but I think it has a couple of problems. First, it seems to focus on knowledge work but it doesn't say so. Like this isn't an average construction worker he's talking about. AGI things are not robot things.

Second, the word "median" is problematic. Why not say average? Because mean and median are two different types of average, and they have different meanings, that's why.

Anyway, here's my definition that I think is more in line with what people really mean when they talk about AGI:

An AI system capable of replacing a knowledge worker making the average salary in the United States.Daniel Miessler

I think this is a better definition because it's more specific. Payscale did a study of 302 different knowledge worker salary profiles, and found the average salary to be $87,342.

Cool, so let's say that an AGI is a system that can replace an average knowledge worker in the US making an average salary.

So how could that happen?

Paths to AGI

A lot of people are skeptical of imminent AGI because they've made the mistake of thinking it has to come from one component.

They're imagining some new model like GPT-6 being AGI-capable by itself. That's one way to get there, but it's not the only way. And I'd argue it's not how we'll get there first.

I think we'll get there through an AGI system, not an AGI component.

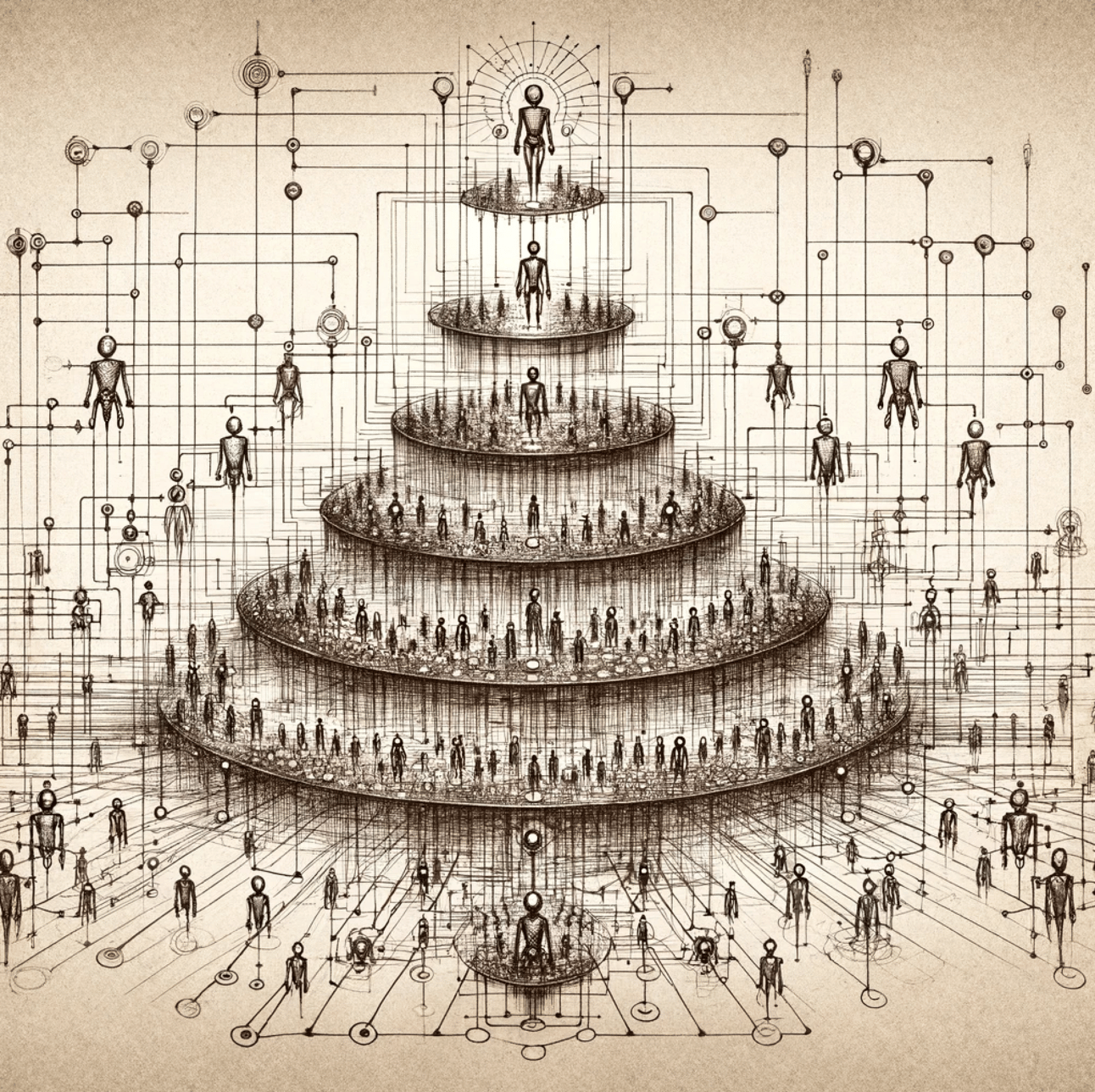

Systems vs. components

- Ant colonies are more powerful than ants

- Families are more powerful than dads

- Companies are more powerful than employees

This isn't just numbers. It's the combination of having objectives at different levels, the division of labor, and the execution of those roles that all make progress toward a shared goal.

And here's the crazy connection to AGI:

We just need a system of AIs that works together to accomplish a shared goal.

So, if it's a Customer Service AGI, it might have:

- a top-level agent

- an agent over multiple sub-departments

- multiple teams of actual service representatives under each sub-department

The teams of agents will be trained in a particular region, on a particular language, and they'll be accustomed to certain types of questions and problems. They might have slightly different goals as well. But they're all unified by the goal of the tier above it. Same thing all the way up the tiers to the top.

So what you end up with is a system of AIs that are not individually AGI-capable, but the system as a whole is.

And remember, the standard is pretty low here. Our AGI definition is something that replaces a single worker! But a system like this, with all the various tiers of agents, will likely be able to replace an entire department.

There's another way in which this bar is low. If you want to replace the head of customer service for Bank of America, that's a very senior position, and the person you need to find will have years of experience in very similar roles. You can't just grab someone who has a career running accounting teams.

So we don't even need the Customer Services AGI system to be the same system that we use for Accounting or Threat Intelligence. We can hire a separate AGI system for that.

But it's still AGI.

Why? Because it has (at least) replaced the capabilities of an average knowledge worker making an average US salary, which is our definition.

Summary

This is why AGI is coming sooner rather than later.

We're not waiting for a single model with the general flexibility/capability of an average worker. We're waiting for a single AGI system that can do that.

To the human controlling it, it's the same. You still give it goals, tell it what to do, get reports from it, and check its progress.

Just like a co-worker or employee.

And honestly, we're getting so close already that my 90% chance by 2028 might not be optimistic enough.