Why Biometric Data Breaches Won’t Require You To Change Your Body

Image by burak kostak

I hear a lot of people in InfoSec say things like,

I guess if my biometrics get hacked I’ll just change my face! Or, New Security Best Practice—Change face, eyes, and fingerprints every 90 days!

These are funny, but it’s important to realize that there’s a difference between you and pictures of you.

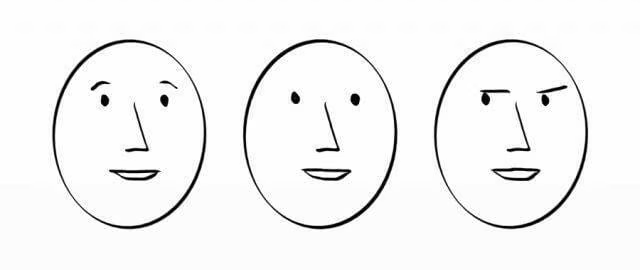

Imagine, for example, that there’s a security guard at the TSA that authenticates people using a comparison of images from a stick figure camera.

from XKCD

If you look at these pictures above, taken by Randall Munroe with that camera, you can see differences in the people, and those differences are used to authenticate them. So if the TSA guard knows that Philip is the guy with the beret, he can either let him in, or not.

These pictures could be out there in the world, circulated in airports, and even read by automated scanners to determine who to let in or not let in, but we wouldn’t be tempted to say that these pictures have stolen us in some foundational way.

In the case of the stick figure camera this is obvious because the stick figure image of Philip that it makes is just a a feeble abstraction of the real Philip.

The part a lot of people are missing is that modern biometrics are just stick figure cameras with better resolution.

They don’t capture eyes, or voices, or fingerprints. What they do is make crude diagrams of those things.

But wait, you say. Stick figure diagrams can’t confuse humans into thinking they’re a real person! And you can’t log into an iPhone with a stick figure diagram of a fingerprint, while you can with TouchID data.

That’s true, and that brings us to the second piece of this idea: it’s the combination of the diagram and the reader that matters.

Humans don’t authenticate other peoples’ faces based on reality either. They actually create a representation in the brain of a person they’re viewing, as perceived by the eyes, the brain, etc., which is turned into a concept.

It’s just another drawing. The original, eye-based kind.

Then the reader—going back hundreds of thousands of years—was also the human eye (and brain), and that completed the pair: the eye takes the image, stores it as a concept, and then the eye reads it and checks for a match.

With modern biometrics we have a different pair: a machine takes the diagram, and a machine reads it to see if it matches. But it’s still just a representation of the thing and not the thing itself.

Why does this matter?

Because to change the authentication system you just have to change either the image or the reader. The object itself is largely irrelevant.

The more complexity you have in a stick figure drawing, the more choices you actually are making about what to sample and how to store that representation. With a system like TouchID there was a lot of data there, and a lot of choices about how to capture it (sensor), manipulate it, and then store it (algorithm). With FaceID there’s far more.

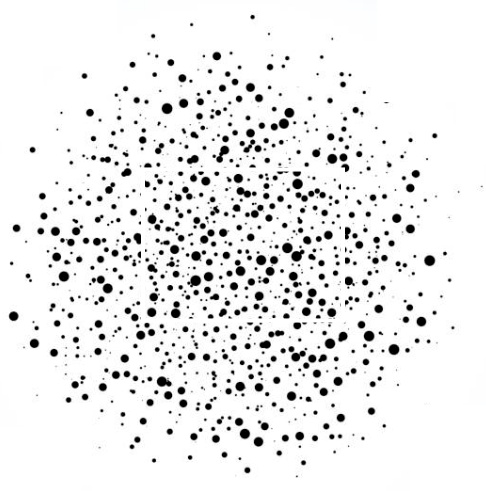

Imagine a representation of a fingerprint in a biometric system as something like a randomly situated, three-dimensional matrix of dots the size of a basketball. As you rotate this ball around in your hands, with all the various sizes of the dots, and distances between them, that’s the fingerprint. That’s your stick figure picture.

It’s also important to understand that this figure is a destructive representation, meaning that—like a hashing > algorithm—there’s no way to go from the representation to the original because biometric systems aren’t storing photographs of the body.

If you adjust the algorithm for capture of the image—or the algorithm for how it’s saved into the 3D array of dots—in any way, well, now you have a completely different representation that would require an update on the reader side to match it.

Another clear way to see how biometric authentication data of this kind is virtually useless to attackers is to look at the Threat Modeling around a few scenarios.

It’s clearly possible to make an easily spoofable sensor/algorithm pair, as we saw with the Samsung implementation > that could be unlocked with a picture taken with a smartphone. The solution there is to 1) build a decent product, and 2) perform some minimal testing of the most obvious attack scenarios.

The real risk to biometric authentication will come at some (likely distant) point in the future where it’s possible to: 1) take extraordinarily high resolution images of peoples’ faces, eyes, hands, etc., and then 2) recreate those body parts in three dimensions with such detail and precision that they can trick any sensor.

But there are two points here. First, this isn’t a realistic threat right now because of the difficulty of reproducing lifelike 3D replicas from images. Second—and most important to this point—this has nothing to do with biometric hash data being stolen.

For hash data, the thing being leaked would not include the actual face (or even a high resolution image of it). It’s just a list of destructive, one-way hashes. This is very different from having a social security number (SSN) or a date of birth (DOB) stolen because those things are numbers. If they’re stolen then the attacker gains the true representation of that thing, which is the number itself.

So what’s the takeaway here?

The magic of a biometric system is in the pairing of the diagram data and the reader, and it’s up to us—as people who make and deploy technology—to determine what those diagrams look like and what readers check those diagrams for authenticity.

So—no—we’re not in danger of needing to change our faces, eyes, or fingerprints anytime soon. If there were a breach of biometric authentication data at some point in the future (it’s when, not if) all we’ll need to do is update the software (and/or hardware) that reads the abstractions of those biomarkers.

It’s important to note, of course, that "just update your systems!" isn’t nearly as easy as it sounds. We should expect some percentage of systems to be deployed that will accept leaked versions of your likeness for a period of time after a breach. But we shouldn’t conflate that problem with needing to change our faces.

Biometric authentication is all about creating and comparing technical facimiles of what we are, and the solution to a biometric data breach is updating those abstractions, not updating ourselves.

Notes

I find it both interesting and sad that after all that it still brings us back to patching.

It’s also fascinating to realize the difference between spoofing vs. a machine vs. spoofing vs. a human. The ability to edit human voices and make them say anything you want, for example, is about to become a major disruptor.

There’s a separate problem of being able to spoof things with such great accuracy that no sensor will be able to tell the difference between it and the real thing. Take for example a molecule-by-molecule match of a person or something. It’s far away, but we need to be aware of the limitation.

Thanks to Steven Harms for talking through some of these concepts with me.