The Biggest Advantage in Machine Learning Will Come From Superior Coverage, Not Superior Analysis

I think there’s confusion around two distinct and practical benefits of Machine Learning (which is somewhat distinct from Artificial Intelligence >).

Listen to the Audio Version > of this essay.

Superior Analysis — A Machine Learning solution is being compared to a human with the best training in the world, e.g., a recognized oncologist, and they’re going head to head at doing analysis on a particular topic in a situation where the attention of the human is not a factor. In this situation the human is at near-maximum potential. They’re awake, alert, and their full attention is focused on the problem for as long as necessary to do the best job they can.

Superior Coverage — A Machine Learning solution is being compared to a non-existent human and/or a crude automated analysis of some sort simply because there aren’t enough humans to look at the content. In this case, it doesn’t matter how good a human could do in the situation as compared to the algorithm, because it would require hundreds, thousands, millions, or billions of additional humans to perform that job.

There’s also a third case where algorithms are better than junior humans in the field but not humans with the best training and experience.

Many people in tech are conflating these two use cases when criticizing AI/ML.

In my field of Information Security, for example, the popular argument—just as in many fields—is that humans are far superior to algorithms at doing the work, and that AI is useless because it can’t beat human analysts.

Humans being better than machines at a particular task is irrelevant when there aren’t enough humans to do the work.

If there is, say, 100 lines of data produced by a company, and you have a team of 10 analysts spread across L1, L2, and L3 who are looking at that data over the course of a day, then you’re getting 100% coverage of the content. And compared to an algorithm the humans are just going to win. At this point even the L1 analyst will do so, and definitely the L2s and L3s. That’s an example of Superior Analysis not being true.

But I would argue that most companies don’t have anywhere near enough analysts to look at the data that their companies are producing. Just taking a stab at the numbers, I’d guess that the top 5% of companies might have a ratio that has them looking at 50-85% of the data they should be—and that’s in a pre-data-lake world where existing IT tools aren’t producing near their potential data output that could be analyzed with enough eyes.

For most companies, however (say the top 90%) they probably have human security analyst ratios that only allow 5-25% coverage of what they wish they were seeing and evaluating. And for the bottom 10% of companies I’d say they’re looking at less than 1% of the data they should be, likely because they don’t have any security analysts at all.

This visibility problem is exacerbated by a few realities:

Due to our constantly adding new IT systems, and doing more business, the amount of data that needs to be analyzed is growing exponentially, with many small organizations producing terabytes per day and large organizations producing petabytes per day.

We’ve only just started to properly capture all the IT data exhaust from companies into data lakes for analysis, so we’re only seeing a tiny fraction of what’s there to see. Once data lakes become common, the amount of data for each company will hockey-stick, which is a separate problem from creating more data because there is more business and more IT systems. In other words—producing more data from existing tools and business vs. having more tools and doing more business.

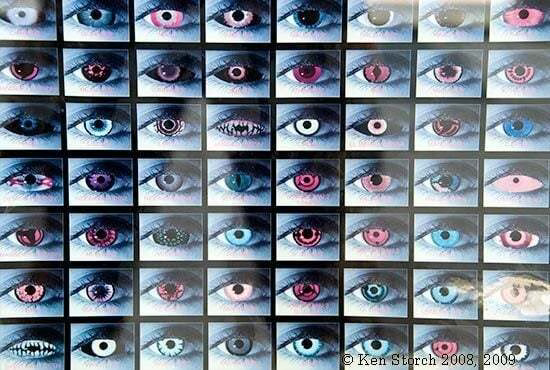

Machines operate 24/7 with no deviation in quality over time, because they don’t get tired. If they just worked for 2 weeks in a row looking at 700 images a minute, they’ll do just as good on the next 700 as they did the first.

It’s much easier to train algorithms than humans, and then roll out that change to the entire environment and/or planet where applicable.

Even if you could train enough humans to do this work, the training would be highly inconsistent due to different life experience and constant churn in the workforce.

I’m reminded of the open source "many eyes" problem, where the source code being open doesn’t matter if nobody is looking at it. That’s exactly the type of problem that I’m talking about here with the Superior Coverage advantage.

When you combine these factors you finally see full advantage of algorithmic analysis: there’s just no way for humans to provide the value that machines can—not because humans are worse at analysis, but because we can’t scale human knowledge and attention to the match the problem.

If you take all the things in the world that need evaluation by human experts—things like everyone’s heart rate, everyone’s IT data, the efficiency of global energy distribution, evaluation of visual sensor data for possible threats, finding bugs in the world’s source code, finding asteroids that might hit Earth, etc.—I don’t think it’s realistic to expect humankind to ever be able to look at even a tiny fraction of 1% of this content.

When you hear someone dismissing Machine Learning by saying humans are better, ask yourself what percentage of the data in question a potential human workforce can realistically evaluate.

And this is assuming a human would be better at doing the analysis if they were able to do the work.

If (once?) the algorithms become better than human specialists (see Chess, Go, Cancer Detection, Radiology Film Analysis, etc.) the Superior Analysis factor becomes an exponent to the Superior Coverage factor—especially when you consider the fact that you can roll an algorithmic update out via copy and paste as opposed to retraining billions of humans.

Exciting times.