Summary: Algorithms to Live By

My book summaries > are designed as captures for what I’ve read, and aren’t necessarily great standalone resources for those who have not read the book.Their purpose is to ensure that I capture what I learn from any given text, so as to avoid realizing years later that I have no idea what it was about or how I benefited from it.

My One-Sentence Summary

Many problems that we all deal with as part of life have practical solutions that come from computer science, and this book gives a number of examples.

Content Extraction

There are many algorithms that come from computer science that can be used to improve human decision making in everyday life.

The Secretary Problem is a form of the Optimum Stopping problem, where you’re not sure when you should stop searching for an optimum form of something. You could keep searching and maybe find something better, but that might be a waste of time you should be spending on something else. There is an actual answer: which is 37%. Optimum Stopping is about avoiding stopping too early or too late. If you follow this optimal strategy you will also have a 37% chance of finding the best thing.

This is also related to the look-then-leap rule, which is where you spend a certain amount of time looking and not choosing anyone, and then after that point you pick the very first person that’s better than everyone you’ve seen so far.

It’s a whole other game if you have a metric you’re going by: like typing speed. If that’s the case just wait for the person who satisfies a high standard and pull the trigger.

That’s called The Threshold Rule.

All quotes here are from the book itself unless otherwise indicated: Christian, Brian. Algorithms to Live By: The Computer Science of Human Decisions (p. 14). Henry Holt and Co. Kindle Edition

Any yardstick that provides full information on where an applicant stands relative to the population at large will change the solution from the Look-Then-Leap Rule to the Threshold Rule and will dramatically boost your chances of success.

A 63% failure rate, when following the best possible strategy, is a sobering fact.

If you’re a skilled burglar and have a 90% chance of pulling off each robbery (and a 10% chance of losing it all), then retire after 90/10 = 9 robberies.

Most people do something like the look-then-leap rule, but they leap too early.

Exploring vs. enjoying (pattern vs. novelty)

Every day we are constantly forced to make decisions between options that differ in a very specific dimension: do we try new things or stick with our favorite ones?

If you don’t know anything about the situation other than how many times a thing has happened, say (3 out of 5), then the proper estimate for whether it will happen again is attained by adding one to the numerator and two to the denominator. So, 4 out of 7.

When balancing favorite experiences and new ones, nothing matters as much as the interval over which we plan to enjoy them.

And…

A sobering property of trying new things is that the value of exploration, of finding a new favorite, can only go down over time, as the remaining opportunities to savor it dwindle.

So when you’re at the start of your interval, you should be doing more and more exploration, and when you’re at the end of your interval, you should do more exploitation.

He points out that since Hollywood is doing so many sequels, they seem to be at the end f their lifespan.

Robbins specifically considered the case where there are exactly two slot machines, and proposed a solution called the Win-Stay, Lose-Shift algorithm: choose an arm at random, and keep pulling it as long as it keeps paying off. If the arm doesn’t pay off after a particular pull, then switch to the other one.

Exploration in itself has value, since trying new things increases our chances of finding the best. So taking the future into account, rather than focusing just on the present, drives us toward novelty.

To try and fail is at least to learn; to fail to try is to suffer the inestimable loss of what might have been.

Chester Bernard

The framework I found, which made the decision incredibly easy, was what I called—which only a nerd would call—a "regret minimization framework." So I wanted to project myself forward to age 80 and say, "Okay, now I’m looking back on my life. I want to have minimized the number of regrets I have." I knew that when I was 80 I was not going to regret having tried this. I was not going to regret trying to participate in this thing called the Internet that I thought was going to be a really big deal. I knew that if I failed I wouldn’t regret that, but I knew the one thing I might regret is not ever having tried. I knew that that would haunt me every day, and so, when I thought about it that way it was an incredibly easy decision.

Jeff Bezos

Chester BernardUpper Confidence Bound algorithms are those that minimize regret. They basically have you select options not based on what’s likely, but by what’s possible. He goes on to say that the best defense against regret is optimism.

Practically, this means selecting possible adventures based on their potential to be good, not factoring in their potential to be bad.

To live in a restless world requires a certain restlessness in oneself. So long as things continue to change, you must never fully cease exploring.

Taking the ten-city vacation problem from above, we could start at a "high temperature" by picking our starting itinerary entirely at random, plucking one out of the whole space of possible solutions regardless of price. Then we can start to slowly "cool down" our search by rolling a die whenever we are considering a tweak to the city sequence. Taking a superior variation always makes sense, but we would only take inferior ones when the die shows, say, a 2 or more. After a while, we’d cool it further by only taking a higher-price change if the die shows a 3 or greater—then 4, then 5. Eventually we’d be mostly hill climbing, making the inferior move just occasionally when the die shows a 6. Finally we’d start going only uphill, and stop when we reached the next local max. This approach, called Simulated Annealing, seemed like an intriguing way to map physics onto problem solving.

Sorting

This is the first and most fundamental insight of sorting theory. Scale hurts.

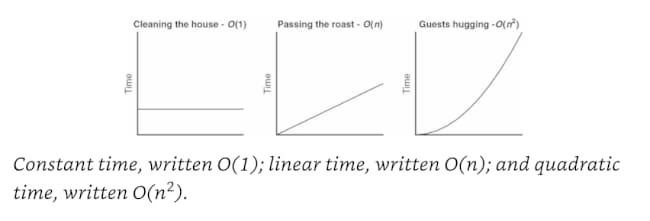

Big-O notation is an indication of how much scale hurts the solving of your problem.

It gets worse from there. There’s "exponential time," O(2n), where each additional guest doubles your work. Even worse is "factorial time," O(n!), a class of problems so truly hellish that computer scientists only talk about it when they’re joking—as we were in imagining shuffling a deck until it’s sorted—or when they really, really wish they were.

Sorting something that you will never search is a complete waste; searching something you never sorted is merely inefficient. Err on the side of messiness.

The verdict is clear: ordering your bookshelf will take more time and energy than scanning through it ever will.

Caching

He makes an argument that a slower mind in old age could simply be a search problem, because the database is exponentially larger than when you’re 20.

There’s a general concept of clumps of caches, with smaller faster ones close by, a medium fast one nearby, and then a slow but large one with everything.

This is very much like L2 cache, CPU, main memory, hard disc, and cloud storage

Scheduling

Reducing maximum lateness is one option.

Another is shortest processing time, which is part of GTD

The optimal strategy for that goal is a simple modification of Shortest Processing Time: divide the weight of each task by how long it will take to finish, and then work in order from the highest resulting importance-per-unit-time (call it "density" if you like, to continue the weight metaphor) to the lowest. And while it might be hard to assign a degree of importance to each one of your daily tasks, this strategy nonetheless offers a nice rule of thumb: only prioritize a task that takes twice as long if it’s twice as important.

The best time to plant a tree is twenty years ago. The second best time is now. ~ Proverb

It turns out, though, that even if you don’t know when tasks will begin, Earliest Due Date and Shortest Processing Time are still optimal strategies, able to guarantee you (on average) the best possible performance in the face of uncertainty. If assignments get tossed on your desk at unpredictable moments, the optimal strategy for minimizing maximum lateness is still the preemptive version of Earliest Due Date—switching to the job that just came up if it’s due sooner than the one you’re currently doing, and otherwise ignoring it. Similarly, the preemptive version of Shortest Processing Time—compare the time left to finish the current task to the time it would take to complete the new one—is still optimal for minimizing the sum of completion times.

Bayes’s Rule

Laplace’s Law, and it is easy to apply in any situation where you need to assess the chances of an event based on its history. If you make ten attempts at something and five of them succeed, Laplace’s Law estimates your overall chances to be 6/12 or 50%, consistent with our intuitions. If you try only once and it works out, Laplace’s estimate of 2/3 is both more reasonable than assuming you’ll win every time, and more actionable than Price’s guidance (which would tell us that there is a 75% metaprobability of a 50% or greater chance of success).

The mathematical formula that describes this relationship, tying together our previously held ideas and the evidence before our eyes, has come to be known—ironically, as the real heavy lifting was done by Laplace—as Bayes’s Rule.

You still need some previous knowledge (priors) for it to work

The Copernican Principle says that if you want to estimate how long something will go on, look at how long it’s been alive, and add that amount of time

This doesn’t work for things that have a known limit though, like a human age

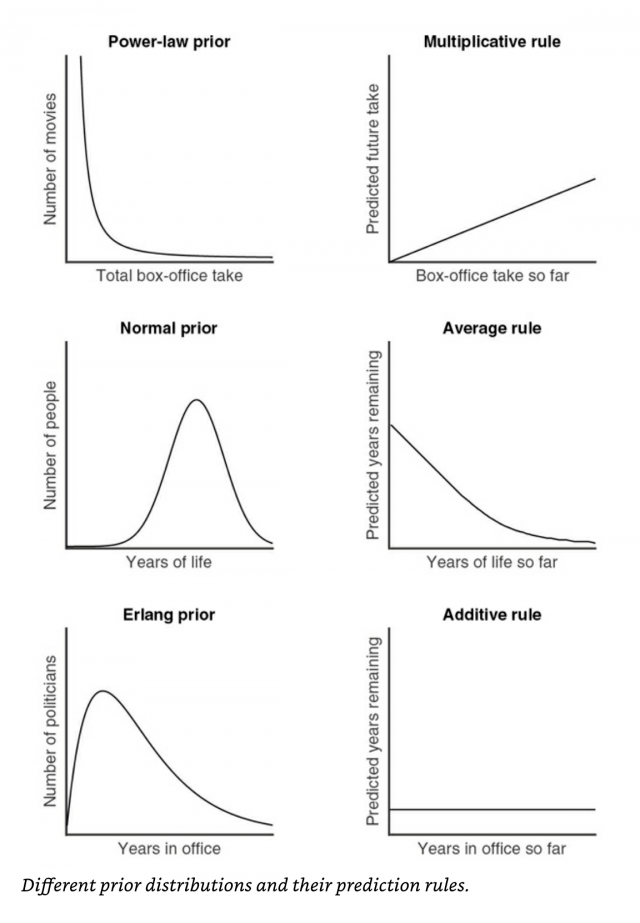

In the broadest sense, there are two types of things in the world: things that tend toward (or cluster around) some kind of "natural" value, and things that don’t.

Power law distributions or scale-free distributions are ranges that can have many scales, so we can’t say that "normal" is any one thing.

And for any power-law distribution, Bayes’s Rule indicates that the appropriate prediction strategy is a Multiplicative Rule: multiply the quantity observed so far by some constant factor. For an uninformative prior, that constant factor happens to be 2, hence the Copernican prediction; in other power-law cases, the multiplier will depend on the exact distribution you’re working with. For the grosses of movies, for instance, it happens to be about 1.4. So if you hear a movie has made $6 million so far, you can guess it will make about $8.4 million overall; if it’s made $90 million, guess it will top out at $126 million.

When we apply Bayes’s Rule with a normal distribution as a prior, on the other hand, we obtain a very different kind of guidance. Instead of a multiplicative rule, we get an Average Rule: use the distribution’s "natural" average—its single, specific scale—as your guide. For instance, if somebody is younger than the average life span, then simply predict the average; as their age gets close to and then exceeds the average, predict that they’ll live a few years more. Following this rule gives reasonable predictions for the 90-year-old and the 6-year-old: 94 and 77, respectively.

A third type is Additive, where you just add a constant to the end. Like "five more minutes!", or "20 more hands".

If you want to be a good intuitive Bayesian—if you want to naturally make good predictions, without having to think about what kind of prediction rule is appropriate—you need to protect your priors. Counterintuitively, that might mean turning off the news.

Overfitting

Once you know about overfitting, you see it everywhere. Overfitting, for instance, explains the irony of our palates. How can it be that the foods that taste best to us are broadly considered to be bad for our health, when the entire function of taste buds, evolutionarily speaking, is to prevent us from eating things that are bad? The answer is that taste is our body’s proxy metric for health. Fat, sugar, and salt are important nutrients, and for a couple hundred thousand years, being drawn to foods containing them was a reasonable measure for a sustaining diet.

You can also combat overfitting by penalizing complexity.

One way ML does that is by reducing the weights incrementally until only the strongest signals are considered, also know as Regularization

The Lasso is an algorithm that penalizes algorithms for their total weight, so it pulls the weights so low that most factors end up at zero, and only the strongest remain (at low numbers)

Early stopping is an algorithm based on finding the strongest signal, then the next, then the next, instead of just taking all of them at face value to start with

When to Think Less As with all issues involving overfitting, how early to stop depends on the gap between what you can measure and what really matters. If you have all the facts, they’re free of all error and uncertainty, and you can directly assess whatever is important to you, then don’t stop early. Think long and hard: the complexity and effort are appropriate. But that’s almost never the case. If you have high uncertainty and limited data, then do stop early by all means. If you don’t have a clear read on how your work will be evaluated, and by whom, then it’s not worth the extra time to make it perfect with respect to your own (or anyone else’s) idiosyncratic guess at what perfection might be. The greater the uncertainty, the bigger the gap between what you can measure and what matters, the more you should watch out for overfitting—that is, the more you should prefer simplicity, and the earlier you should stop.

When we start designing something, we sketch out ideas with a big, thick Sharpie marker, instead of a ball-point pen. Why? Pen points are too fine. They’re too high-resolution. They encourage you to worry about things that you shouldn’t worry about yet, like perfecting the shading or whether to use a dotted or dashed line. You end up focusing on things that should still be out of focus. A Sharpie makes it impossible to drill down that deep. You can only draw shapes, lines, and boxes. That’s good. The big picture is all you should be worrying about in the beginning.

The power of relaxation

When optimum solutions are elusive, you can often get most of the benefit by relaxing the requirement for precision.

Constrained optimization is where you are working within a particular set of rules and a scorekeeping measure

The prarie lawyer problem is the same as the traveling salesman problem

Constraint Relaxation is where you solve the problem you wish you had instead of the one you actually have, and then you see how much this helped you.

For instance, you can relax the traveling salesman problem by letting the salesman visit the same town more than once, and letting him retrace his steps for free. Finding the shortest route under these looser rules produces what’s called the "minimum spanning tree." (If you prefer, you can also think of the minimum spanning tree as the fewest miles of road needed to connect every town to at least one other town.

The final step, as with any relaxation, is to ask how good this solution is compared to the actual best solution we might have come up with by exhaustively checking every single possible answer to the original problem. It turns out that for the invitations problem, Continuous Relaxation with rounding will give us an easily computed solution that’s not half bad: it’s mathematically guaranteed to get everyone you want to the party while sending out at most twice as many invitations as the best solution obtainable by brute force. Similarly, in the fire truck problem, Continuous Relaxation with probabilities can quickly get us within a comfortable bound of the optimal answer.

There are many ways to relax a problem, and we’ve seen three of the most important. The first, Constraint Relaxation, simply removes some constraints altogether and makes progress on a looser form of the problem before coming back to reality. The second, Continuous Relaxation, turns discrete or binary choices into continua: when deciding between iced tea and lemonade, first imagine a 50–50 "Arnold Palmer" blend and then round it up or down. The third, Lagrangian Relaxation, turns impossibilities into mere penalties, teaching the art of bending the rules (or breaking them and accepting the consequences). A rock band deciding which songs to cram into a limited set, for instance, is up against what computer scientists call the "knapsack problem"—a puzzle that asks one to decide which of a set of items of different bulk and importance to pack into a confined volume. In its strict formulation the knapsack problem is famously intractable, but that needn’t discourage our relaxed rock stars. As demonstrated in several celebrated examples, sometimes it’s better to simply play a bit past the city curfew and incur the related fines than to limit the show to the available slot.

Randomness

Sampling is super powerful, and so is simply starting with a random value and moving from there.

The answer may well come from computer science. MIT’s Scott Aaronson says he’s surprised that computer scientists haven’t yet had more influence on philosophy. Part of the reason, he suspects, is just their "failure to communicate what they can add to philosophy’s conceptual arsenal." He elaborates: One might think that, once we know something is computable, whether it takes 10 seconds or 20 seconds to compute is obviously the concern of engineers rather than philosophers. But that conclusion would not be so obvious, if the question were one of 10 seconds versus 101010 seconds! And indeed, in complexity theory, the quantitative gaps we care about are usually so vast that one has to consider them qualitative gaps as well. Think, for example, of the difference between reading a 400-page book and reading every possible such book, or between writing down a thousand-digit number and counting to that number.

There’s a concept where you try an equation with a piece of data to see if it works, if it does that’s good. Try it with a few more random pieces of data. If they all work then the odds of this not being a good solution continue to fall. It doesn’t mean you’ve found THE solution, but it does mean that the more you do this the more likely that becomes.

Once you’ve assembled a baseline itinerary, you might test some alternatives by making slight perturbations to the city sequence and seeing if that makes an improvement. For instance, if we are going first to Seattle, then to Los Angeles, we can try doing those cities in reverse order: L.A. first, then Seattle. For any given itinerary, we can make eleven such two-city flip-flops; let’s say we try them all and then go with the one that gives us the best savings. From here we’ve got a new itinerary to work with, and we can start permuting that one, again looking for the best local improvement. This is an algorithm known as Hill Climbing—since the search through a space of solutions, some better and some worse, is commonly thought of in terms of a landscape with hills and valleys, where your goal is to reach the highest peak.

Another approach is to completely scramble our solution when we reach a local maximum, and start Hill Climbing anew from this random new starting point. This algorithm is known, appropriately enough, as "Random-Restart Hill Climbing"—or, more colorfully, as "Shotgun Hill Climbing." It’s a strategy that proves very effective when there are lots of local maxima in a problem. For example, computer scientists use this approach when trying to decipher codes, since there are lots of ways to begin decrypting a message that look promising at first but end up being dead ends. In decryption, having a text that looks somewhat close to sensible English doesn’t necessarily mean that you’re even on the right track. So sometimes it’s best not to get too attached to an initial direction that shows promise, and simply start over from scratch. But there’s also a third approach: instead of turning to full-bore randomness when you’re stuck, use a little bit of randomness every time you make a decision. This technique, developed by the same Los Alamos team that came up with the Monte Carlo Method, is called the Metropolis Algorithm. The Metropolis Algorithm is like Hill Climbing, trying out different small-scale tweaks on a solution, but with one important difference: at any given point, it will potentially accept bad tweaks as well as good ones.

James thus viewed randomness as the heart of creativity. And he believed it was magnified in the most creative people. In their presence, he wrote, "we seem suddenly introduced into a seething caldron of ideas, where everything is fizzling and bobbing about in a state of bewildering activity, where partnerships can be joined or loosened in an instant, treadmill routine is unknown, and the unexpected seems the only law." (Note here the same "annealing" intuition, rooted in metaphors of temperature, where wild permutation equals heat.)

When it comes to stimulating creativity, a common technique is introducing a random element, such as a word that people have to form associations with. For example, musician Brian Eno and artist Peter Schmidt created a deck of cards known as Oblique Strategies for solving creative problems. Pick a card, any card, and you will get a random new perspective on your project. (And if that sounds like too much work, you can now download an app that will pick a card for you.) Eno’s account of why they developed the cards has clear parallels with the idea of escaping local maxima: When you’re very in the middle of something, you forget the most obvious things. You come out of the studio and you think "why didn’t we remember to do this or that?" These [cards] really are just ways of throwing you out of the frame, of breaking the context a little bit, so that you’re not a band in a studio focused on one song, but you’re people who are alive and in the world and aware of a lot of other things as well.

Networking

Protocol is how we get on the same page; in fact, the word is rooted in the Greek protokollon, "first glue," which referred to the outer page attached to a book or manuscript.

The breakthrough turned out to be increasing the average delay after every successive failure—specifically, doubling the potential delay before trying to transmit again. So after an initial failure, a sender would randomly retransmit either one or two turns later; after a second failure, it would try again anywhere from one to four turns later; a third failure in a row would mean waiting somewhere between one and eight turns, and so on. This elegant approach allows the network to accommodate potentially any number of competing signals. Since the maximum delay length (2, 4, 8, 16…) forms an exponential progression, it’s become known as Exponential Backoff.

TCP works with a sawtooth, which says more, more, more, SLOW WAY DOWN. More, more, more, SLOW WAY DOWN

ACKS are super important in speed of communication. If you can’t ACK, you don’t know if you’re being heard and thus can’t speak quickly

This is also why you don’t want to completely eliminate background noise from phones, because it’ll make the speaker think there’s nobody on the other end

UDP says better never than late

Game Theory

A Nash Equilibrium is where both sides should keep doing what they’re doing, assuming both sides keep doing what they’re doing.

Every two player game has at least one Nash equilibrium.

"In poker, you never play your hand," James Bond says in Casino Royale; "you play the man across from you." In fact, what you really play is a theoretically infinite recursion. There’s your own hand and the hand you believe your opponent to have; then the hand you believe your opponent believes you have, and the hand you believe your opponent believes you to believe he has … and on it goes. "I don’t know if this is an actual game-theory term," says the world’s top-rated poker player, Dan Smith, "but poker players call it ‘leveling.’ Level one is ‘I know.’ Two is ‘you know that I know.’ Three, ‘I know that you know that I know.’ There are situations where it just comes up where you are like, ‘Wow, this is a really silly spot to bluff but if he knows that it is a silly spot to bluff then he won’t call me and that’s where it’s the clever spot to bluff.’ Those things happen."

A dominant strategy is the best one no matter what your opponent does.

Redwoods are getting taller and taller, but for no reason other than stupid competition, since their canopy takes the same amount of light if it were lower. There’s just no agreement that would save them from having to make such a tall trunk.

The Dutch auction keeps lowering the price until someone pays.

The English auction does the opposite and keeps raising until someone won’t pay.

Named for Nobel Prize–winning economist William Vickrey, the Vickrey auction, just like the first-price auction, is a "sealed bid" auction process. That is, every participant simply writes down a single number in secret, and the highest bidder wins. However, in a Vickrey auction, the winner ends up paying not the amount of their own bid, but that of the second-place bidder. That is to say, if you bid $25 and I bid $10, you win the item at my price: you only have to pay $10.

This incentivizes honesty.

One of the implicit principles of computer science, as odd as it may sound, is that computation is bad: the underlying directive of any good algorithm is to minimize the labor of thought. When we interact with other people, we present them with computational problems—not just explicit requests and demands, but implicit challenges such as interpreting our intentions, our beliefs, and our preferences. It stands to reason, therefore, that a computational understanding of such problems casts light on the nature of human interaction. We can be "computationally kind" to others by framing issues in terms that make the underlying computational problem easier.

I’ve always been about this. This is what curation is. It’s why you should be concise in most things.

Asking someone what they want to do, or giving them lots of options, sounds nice, but it usually isn’t. It usually transfers a burden, from you to them. Don’t transfer burdens. Give them simple options where most of the work is already done.

He calls this Computational Kindness. Beautiful.

Designs should be computationally kind.

Takeaways

When you’re finding yourself stuck making decisions, consult this book, and other similar resources and see if there’s a better way to approach the problem. It could be that a heuristic or algorithm exists that will calm your mind and get you to a better decision at the same time.

In almost every domain we’ve considered, we have seen how the more real-world factors we include—whether it’s having incomplete information when interviewing job applicants, dealing with a changing world when trying to resolve the explore/exploit dilemma, or having certain tasks depend on others when we’re trying to get things done—the more likely we are to end up in a situation where finding the perfect solution takes unreasonably long. And indeed, people are almost always confronting what computer science regards as the hard cases. Up against such hard cases, effective algorithms make assumptions, show a bias toward simpler solutions, trade-off the costs of error against the costs of delay, and take chances. These aren’t the concessions we make when we can’t be rational. They’re what being rational means.

You can find my other book summaries here >.

Notes

The best way to have this be useful is to have a before and after algorithm, which you update as you learn.