Napkin Ideas Around What Changes to Expect Post-ChatGPT

If you’re reading this you already know the internet is on fire over the new GPTChatBot from OpenAI >. There are people using it to create full virtual machines >, to be their personal writing coach >, to write terraform, to take an SAT >, generate Pokemon-like characters >, and a thousand other things.

Hat tips to @sasazdelar >, @jhaddix >, and @clintgibler > for some of these conversations.

I’ve had lots of conversations with friends about, "oh, that means this will be possible!", or "oh, think about this that might happen!", so I wanted to capture a few things we’ve come up with here. Please note that some of these are horribly negative in terms of impact to society, and others are possible ways to harvest positivity out of the situation.

1. The startup engine is about to point its sights at human work

There are about to be a ton of new startups—as well as established consulting companies like McKinsey and KPMG and the like—that will build frameworks that leverage GPT (and its competitors) to replace human work. I feel bad about this, but like I mentioned in my Companies as Alaskan Fishing Boats > article, businesses aren’t there to employ people. They’re there to get work done.

I’m sure KPMG would love to let its AI take your "boring" work off your hands.

I also had it recreate a customer report it took me personally 2 hours to create. It nailed it in 10 seconds.

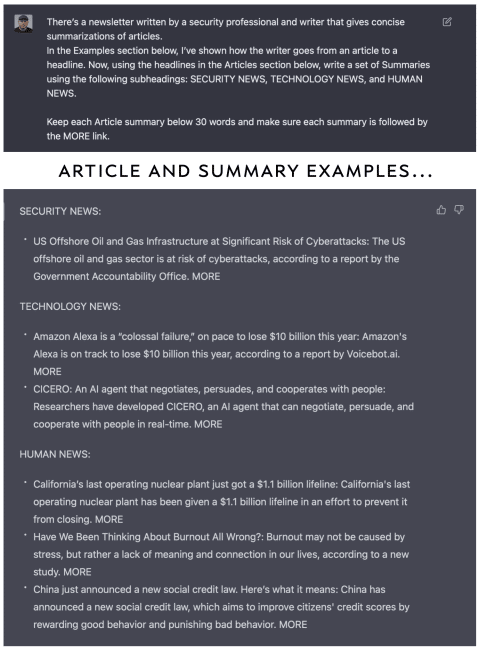

Feeling bad about it, I decided to point the weapon at myself. I had it emulate the dozens of hours of work I do every week for my own newsletter >. With some very simple prompting and some good examples it produced a decent facimile of what I do.

Well, shit.

It created these analysis headlines in 10 seconds

And soon the Bobs will be largely replaced by AI as well.

These companies will walk into businesses like Bob2 and figure out who is doing what, how long it takes them, and figure out how to use their new AI Framework to eliminate the need for human workers. Of course it’ll be called optimization or enhancement or some shit, but we all know what it is.

Looking at what the chatbot can do, we expect the biggest disruption in (obviously) repeatable work. But most work we do is repetitive. Some likely high-impact areas:

Reviewing updates and looking for interesting nuggets or patterns

🤖 For all messages in this Slack channel, extract the most important updates and send them to company leaders in a report with the following sections and tables, including a prioritized list of recommended actions given our stated company goals and current OKRs.

Conducting muti-step follow-ups to analysis

Also, for security issues do the same for re-opening tickets when the fixed condition goes away.

🤖 For all open Jira tickets look in the history for evidence of what completed would look like, check for that condition, and close the ticket using that evidence as the reason.

Continuous monitoring for security or operational purposes

🤖 For all PR’s, evaluate the code submitted for coding errors that can place data at risk. Create and deliver a message to the developer that gives them the problem, it’s location in the code, the implications of doing it that way, and give 1-3 recommendations on doing it better. If there is a company-recommended way of doing it, give that as the singular recommendation.

🤖 Find all instances of sensitive data or tokens being posted in chat and email the poster and their manager pointing them to the company policy and the link to documentation on how to do it securely.

🤖 Using the AWS API below, monitor the authentication configuration for all admins on these accounts. Alert if any of those accounts ever have too much authority or have an authentication level below our company standard.

I think anyone not using GPTBot-like tech to do these tasks in the next few months will be on the path to being replaced by those who are. I don’t imagine this will result in some massive layoff. It’ll be more like a steady trend towards non-replacement as people naturally leave companies. Which will still result in companies needing far fewer people.

2. The talent gap will massively expand

Now I can draw better because I’m better at prompt engineering

And keep in mind this is Day 0 for this tech. Like a few days ago this thing was making pretty pictures.

You know how there’s a wide gap in income and status between the most talented and competent people and those who are less so? Well now imagine those super smart people armed—yes, armed—with AI. For them, AI will be like multiplying their brains and having them work continuously. Or like hiring a giant staff just for them.

This will magnify even further because the best AI will be the most expensive.

So now the lucky people who picked great parents, great genes, a great environment, and great education won’t just have the best opportunities and jobs. Now they’ll have the talent and funds to pay for the best AIs as well. So the best engineers will be better engineers. The best entrepreneurs will have more ideas and move faster to market. And those competing in the same space will win largely based on how well they can leverage AI.

3. Solopreneurs will thrive by having an AI staff

Maybe it’s not all bad news. One thing I can see is it getting a whole lot easier to be a business by yourself, or with just 1-5 employees. People with ideas will be able to jump in and use AI for a lot of sales, marketing, and even customer support.

This would be greatly helped by governments reducing friction on starting and running small businesses.

The employees people do hire will be dynamic generalists who are also good with data and—you guessed it—using AI frameworks. So you’ll buy Salesforce Small Business, or whatever, which will really be a ton of stitched-together AI API calls on the backend, and your employee will connect all the pieces, do the installs, set all the preferences, connect your data, etc. And then do periodic maintanenc and tweaking as the needs of the business change.

If you pick your first couple of employees well, it could easily be the equivalent of having 10-20 people. Of course your competition will be doing the same, so you do have the arms race problem.

4. AI Specialists will be the ultimate multi-tool

A natural question for many reading this will be,

Um, ok, but what the hell should I be re-training into? What should I be telling my kids to learn?

I feel like there are two ways to go here: 1) general, or 2) hyper-specialized. My bet is on general with strong skills in data, basic coding for requesting and manipulating data, and—most importantly—knowledge of how to customize AI systems to solve multiple problems.

Strong specialization is always golden, but the problem is it’ll be hard to pick which strong specialization to go into. Or, more poignantly, it’s hard to know which one is both lucrative and resistant to AI doing it better. If AI is taking over, my bet is that the people good at using it solve problems will be safer than most.

5. Ideas will ascend, with implementation becoming less important

So much of business—and definitely most work that people do, comes down to, "How do we do the thing we’ve been told to do?" With AIs answering more and more of that question, the focus will shift to the new question of, "What should we be doing?". That’s a colossal shift, and it’s one that favors a different type of employee.

So maybe that generalist, liberal-arts education won’t be as much of a waste anymore. Maybe broader education will help people become leaders (and solopreneuers) rather than blind executors of the stated plan. As we see from even the AI art stuff and the first versions of the GPTChat bot, the quality of the results depend heavily on the quality of the instructions. And it takes a special perspective, background, and finesse to provide that type of instruction.

Democratization of the best AIs for idea generation and execution will be essential if we want to avoid the ultimate winner-takes-all.

Unfortunately we should expect a lot of fierce competition around this type of "thinking and prompting". Expect fierce IP battles around what constitutes a human idea vs. one generated by an AI. Without some strong regulation there we’re going to see the best algorithms get protected by the highest prices. Then the biggest and richest companies will have a fleet of AI thinkers working for them as well as the executors.

🤖 Given your analysis of the current market, give me 20 ideas that we can move faster on than our competition that customers are likely to love.

Once again, winner takes all.

6. Some interesting use cases >

Like everyone else this thing has me thinking, and the stuff I keep going back to are implementations that help you do two things:

Survive

Reproduce

Which to me translate into Security and Status. Here are some possible uses:

Perfect Words: Listen to the current conversation I’m having and whisper a perfect phrase to say in my ear. Think: trying to look smart or trying to win someone over.

Friendly Voice: Listen for people being mean to me, and say something nice in the voice of my Better Self, or my therapist, to counteract it.

What Would Mama Say?: Ask dead loved ones what they think you should do in a given situation. Creepy, and already explored in Black Mirror, but inevitable. And extremely easy to do using even the existing bot.

OCR Assist: Look at a word problem on a page and make a capture gesture. The image gets saved, parsed using OCR, submitted to the API as a question, and answered in your ear or by text.

Universal Translator: Listen for all non-native-language conveersation in the area and provide voice or text translations in slight-delay-time.

As exciting as this first version is, I’m 37x more excited about future versions—especially once they do images and video as well as text—and what people are going to build on top.

A few random thoughts >

A few unsorted musings.

As awesome as GPTChat is, keep in mind that these Transformer models are genius at making the thing look correct. It often is, and that’s stunning. But it also oftentimes looks perfect while being complete garbage. Don’t run GPTChat output in prod, is what I’m saying. You’ll need another instance to help find you a job.

This is going to be a massive boon for A/B testing scenarios. You can have AI generate a number of ideas and send them into a testing or polling or survey type of environment where they can be tested against reality. I.e., extremely fast idea/product iteration.

One thing you should consider adding often to your instructions is the command to "explain your results". We’ve heard for a couple of years now that one major problem with ML is that it can’t explain how it got to its answer, but this iteration of the tech is quite good at it. Usually. See above. As an example, I created a system using this tech that reads security news stories and tells me if it was a valid incident, who the attacker was, who the target was, what the attack technique was, and—impressively—what the business impact was on a scale of HIGH, MEDIUM, or LOW. For that piece I told it to explain that rating, and it basically never missed. Blew me away.

Also keep in mind that this tech is really bad at lots of different kinds of math. Not sure how long that’ll be the case, but it’s definitely true in December of 2022. So once again, don’t bank on it for things like that.

I use a variation of a guideline explained to me by an expert, which is to imagine this thing like Yoda rather than Einstein. Einstein does math. Yoda has wisdom. Don’t ask Yoda or GPT to do your taxes; they’ll disappoint you.

The idea of helping a SOC analyst with AI was an empty promise and sad joke for a long time, and that seems about to end. This type of tech will be able to find needles in haystacks, but questions remain around scalability and pricing given the amount of log data streaming in from various sources.

Hollywood seems to be in significant trouble. I mean it already was because it’s surviving on the fumes of sequels, but once we can combine this type of creativity with the ability to make animation and video, why would we wait multiple years and pay millions for mediocre stories?

Being a long-time role-player I’m excited what this is going to do for generating campaign ideas, monster ideas, plot elements, and even character interactions. For example, having a character in a prompt and entering in what the PC says to them. The GM doesn’t need to (and shouldn’t) use everything that comes back, but it could be a wonderful source of inspiration. That’s the recurring theme for art: some part of it gets completely destroyed, but many elements of it get better because this tech will function as an inspiration muse.

Don’t forget that the results from these AIs are quite random. In other words, you can easily get back something different on each run even given the same prompt. We saw that with the AI Art as well, but something about these new ChatGPT results make us feel like they’re more solid. They aren’t. At least not yet. It’s still a magic-8-ball in that way, but with nearly infinite responses.