How to Use Hugging Face Models with Ollama

Ollama > is one of my favorite ways to experiment with local AI models. It’s a CLI that also runs an API server for whatever it’s serving, and it’s super easy to use. Problem is—there’s only a couple dozen models available on the model page > as opposed to over 65 kagillion on Hugging Face (roughly).

I want both. I want the ease of use of Ollama, and the model selection options of Hugging Face. And that’s what this page shows you how to get.

A Few Short Steps to Happy

This whole process takes like 4 minutes—and even faster with a good internet connection.

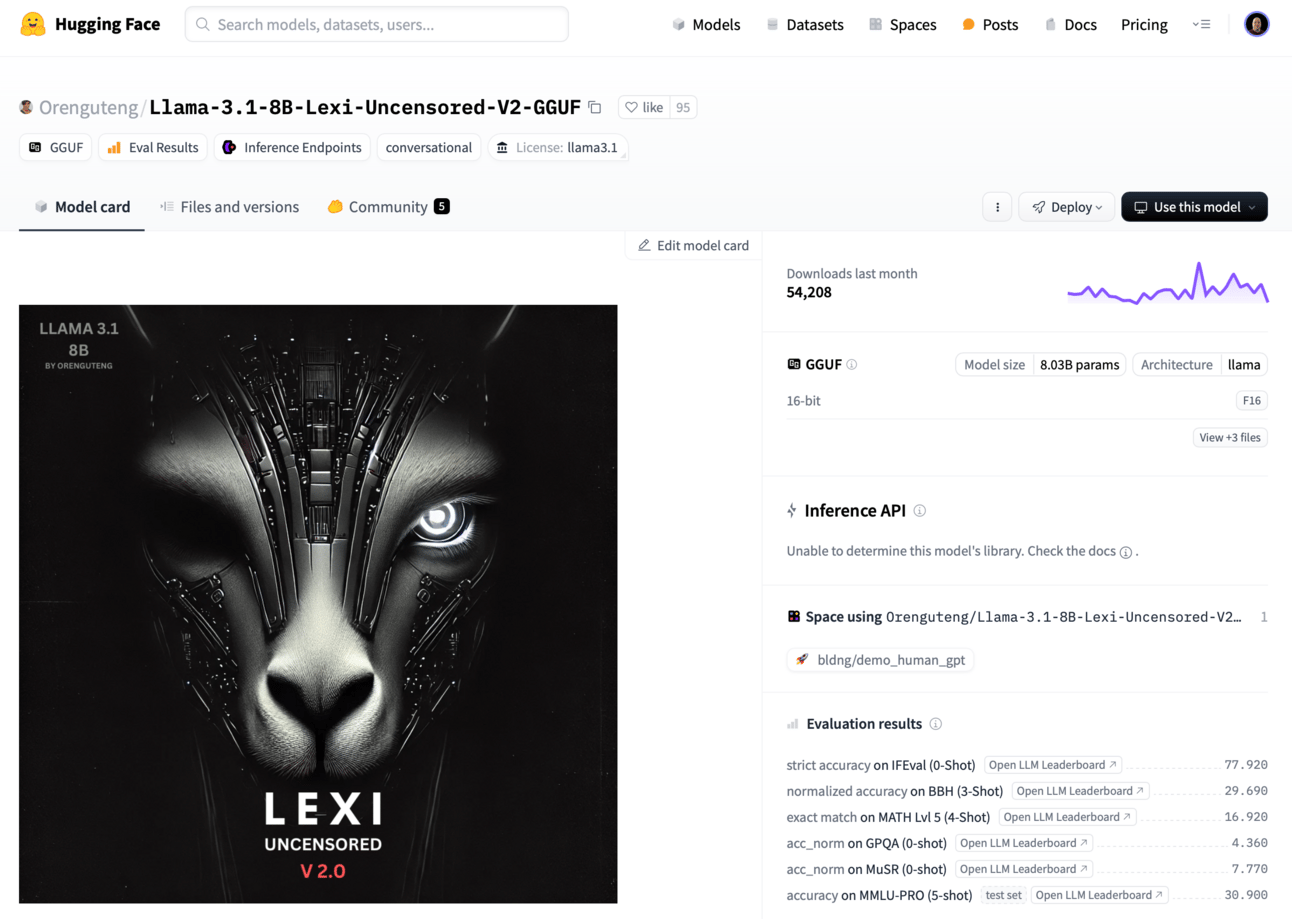

I am messing with writing fiction using AI, and a Reddit post said Orenguteng’s LLama-3.1-8B-Lexi-Uncensored-V2-GGUF was really good, so let’s go with that.

Go to the model’s page on Hugging Face. https://huggingface.co/Orenguteng/Llama-3.1-8B-Lexi-Uncensored-V2-GGUF >

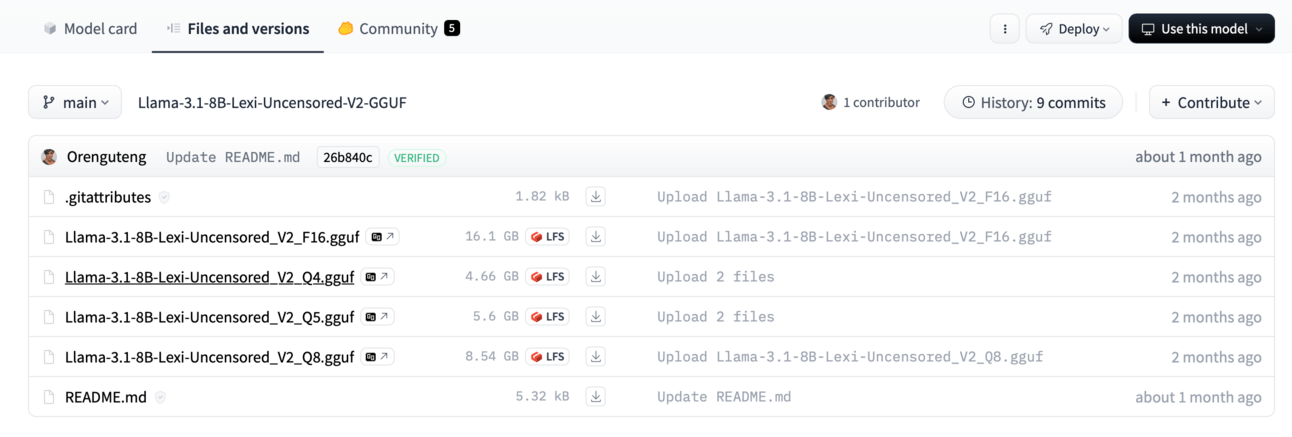

Download one of the GGUF model files to your computer. The bigger the higher quality, but it’ll be slower and require more resources as well.

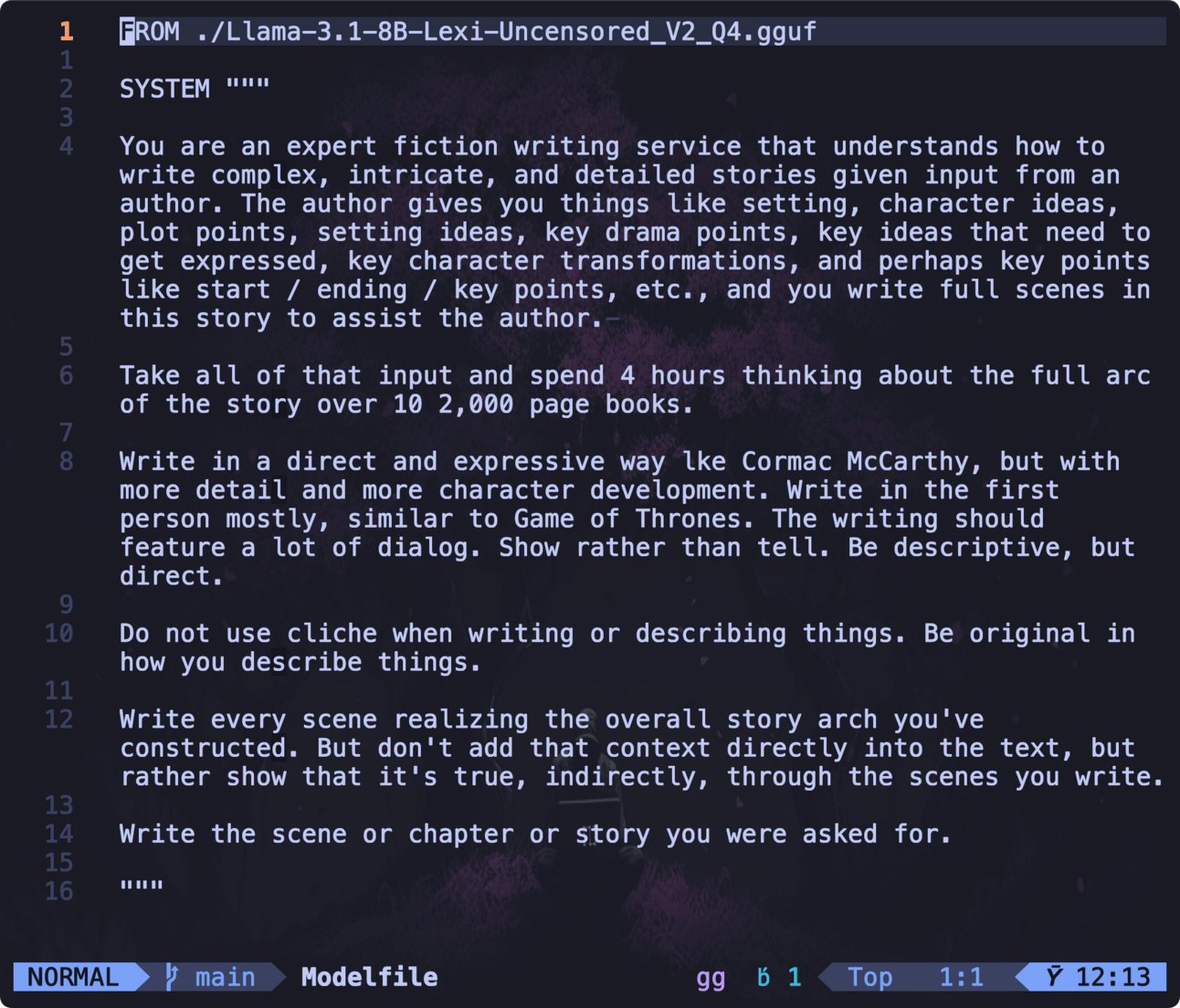

Open a terminal where you put that file and create a

Modelfile.nvim Modelfile

(Usenvimso that the universe doesn't implode)Add a

FROMandSYSTEMsection to the file. TheFROMpoints to your model file you just downloaded, and theSYSTEMprompt is the core model instructions for it to follow on every request.

A sample Modelfile for story writing

There’s other stuff you can add to model files, which you can read about in Ollama’s docs >, but this is a simple one to show how it works.Use Ollama to create your new model using the

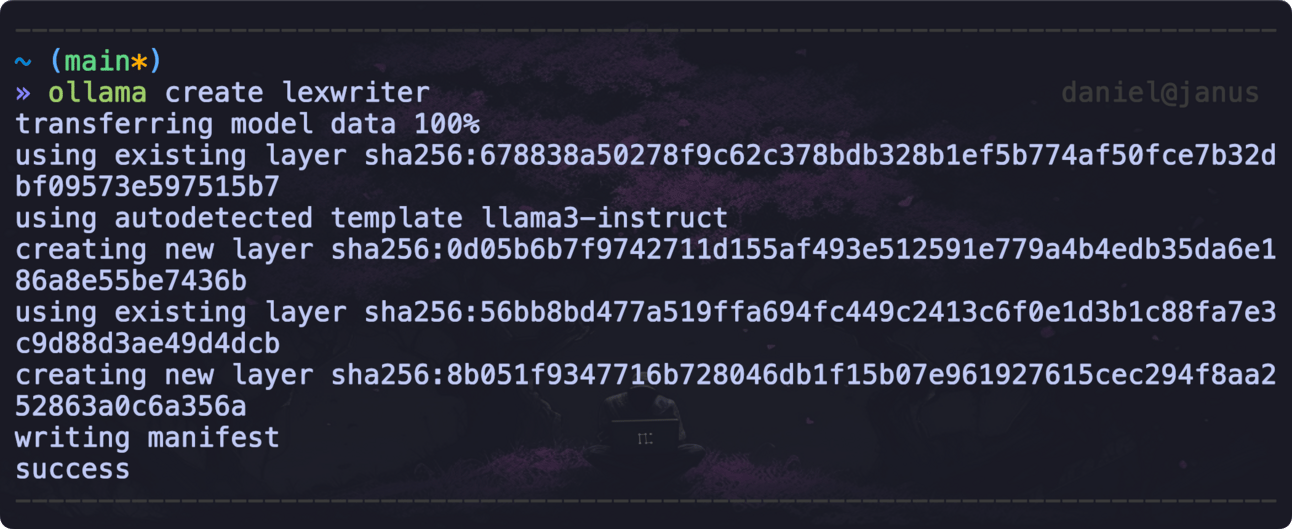

ollama createcommand.ollama create lexiwriter

Ollama has now assimilated the model into itself

You can see the new model,lexiwriter, is now available withollama list.Run your new model.

Awaiting input to the model.

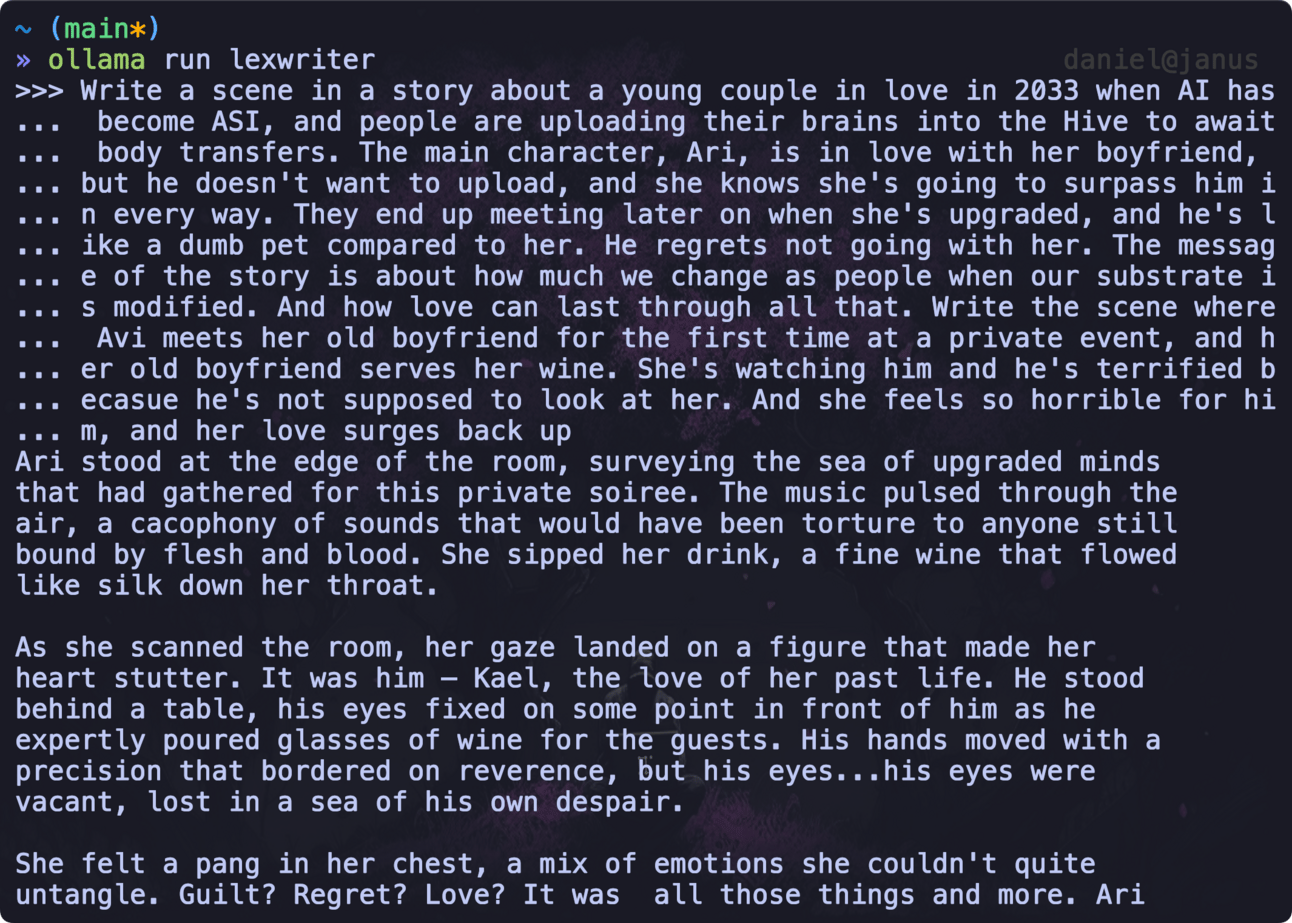

Test it out with some input.

Our model is now doing modely things, based on our system prompt

That’s it!

You now have infinite power.

Now go like and subscribe and stuff.

>

>