How to Become a Superforecaster

I just finished Steven Pinker’s latest book, Enlightenment Now, which turned out to be fantastic. I was frustrated in the first two-thirds of the book for reasons I won’t go into here (it seemed too positive while ignoring negatives), but the final several chapters were spectacular.

Those chapters mostly focused on science and reason, and why they’re so important and our best path forward—despite being flawed in their own ways.

My favorite gem in the book was a section on what Pinker calls Super-forecasters, which was within a section of the book that talked about how bad we are at making predictions. Here are a few captures from that section.

Though examining data from history and social science is a better way of evaluating our ideas than arguing from the imagination, the acid test of empirical rationality is prediction. ~ Steven Pinker

He talked about the work of Phillip Tetlock, a psychologist in the 80’s who did forecasting tournaments for hundreds of analysts, academics, writers, and regular people where he asked people to predict the likelihood of possible future events.

Tetlock’s Book on Superforecasting >

In general, the experts did worse than regular people. And the closer to their expertise the subject was, the worse their predictions.

Indeed, the very traits that put these experts in the public eye made them the worst at prediction. The more famous they were, and the closer the event was to their area of expertise, the less accurate their predictions turned out to be. ~ Steven Pinker

But there were some who predicted far better than the loud and confident experts and the average person, which he called superforecasters.

Pragmatic experts who drew on many analytical tools, with the choice of tool hinging on the particular problem they faced. These experts gathered as much information from as many sources as they could. When thinking, they often shifted mental gears, sprinkling their speech with transition markers such as "however," "but," "although," and "on the other hand." They talked about possibilities and probabilities, not certainties. And while no one likes to say "I was wrong," these experts more readily admitted it and changed their minds. ~ Pinker / Tetlock

I absolutely love this. But it gets better. Here’s a list of characteristics that superforcasters have, according to Tetlock:

They are in the top 20% of intelligence, but don’t have to be at the very top

Comfortable thinking in guestimates

They have the personality trait of Openness (which is associated with IQ, btw)

They take pleasure in intellectual activity

They appreciate uncertainty and like seeing things from multiple angles

They distrust their gut feelings

Neither left or right wing

They’re not necessarily humble, but they’re humble about their specific beliefs

They treat their opinions as "hypotheses to be tested, not treasures to be guarded"

They constantly attack their own reasoning

They are aware of biases and actively work to oppose them

They are Bayesian, meaning they update their current opinions with new information

Believe in the wisdom of crowds to improve upon or discover ideas

They strongly believe in the role of chance as opposed to fate

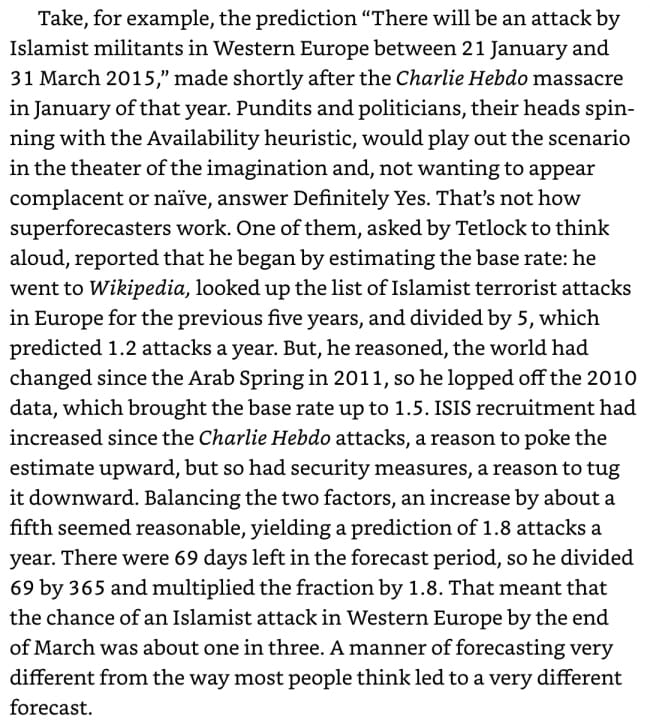

Remarkable! Especially the Bayesian part. And he gives an example:

From Steven Pinker’s Enlightenment Now

As for the belief in fate vs. chance question, Tetlock and Mellers rated people on a number of questions that gave them a Fate Score. Questions were things like, everything working according to God’s plan, everything happens for a reason, there are no accidents, everything is inevitable, the role of randomness, etc.

An average American was (probably not anymore, I’m guessing) somewhere in the middle, an undergraduate at a top university scores a bit lower (better), a decent forecaster (as empirically tested by predictions and results) is even lower/better, and the super-forecasters scored lowest of all—meaning they thought the world was the most random!

Holy crap. Phenomenal.

The forecasters who did the worst were the ones with Big Ideas—left-wing or right-wing, optimistic or pessimistic—which they held with an inspiring (but misguided) confidence. ~ Steven Pinker

As someone who already holds a lot of these cautious, tentative views on reality, and who is willing to update them often, this felt good to read. It also reminded me to reinforce my own tendencies to doubt myself, and to be even more loose with my ideas.

It reminded me of another great quote on this, which people like Marc Andreessen use often:

Strong opinions, loosely held.

Overall the book turned out to be excellent, but this is the most powerful concept I’ll take from it.

Summary

Most people are horrible at predictions, and most experts are even worse.

The closer the topic is to their field, the worse the expert usually is at predictions.

Top predictors have a particular set of attributes.

They are high in Openness, they enjoy intellectual activity, they are comfortable with uncertainty, they distrust their gut, they’re neither left or right, they treat their beliefs as hypotheses, they are Bayesian >, they believe in the wisdom of crowds, and they strongly believe in chance rather than fate.

If you want to be better at predicting things, and generally not be the idiot that makes wild predictions that don’t come true, do your best to adopt and constantly reinforce these behaviors.