Data Processing Using the UNIX Philosophy

Source: Apache Kafka, Samza, and the Unix Philosophy of Distributed Data >

This is a fantastic article that mirrors how I like to think of operating systems and data. Let me try to capture some of my takeaways from it:

Kafka is a scalable, distributed message broker. It takes stuff in from one or many places, and it puts that stuff out to one or many places. Kafka deals in messages instead of byte streams

Samza is a stream processor, which makes sure that input and output are understood by receivers and senders

MapReduce is a framework for processing parellelizable problems across huge datasets using a large number of computers

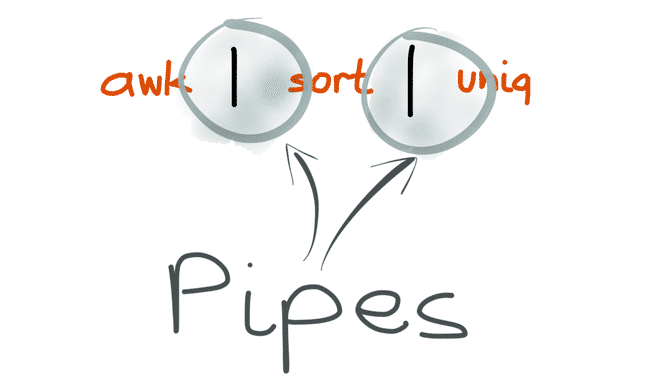

Kafka is like the Unix pipe, and Samza is like a library that helps you read stdin and write stdout.

So the idea is to create specialized parts of a larger system that can produce content for, and read, any other part of the system. And they can use many different types of technology to accomplish their part.

And this is what LinkedIn created (and uses) Kafka for. They have one Kafka stream that goes to anti-abuse, analytics, relevance, monitoring, and other jobs. Each of those are done their own way, and are managed by different teams.

Fascinating. It’s Unix for large scale data operations.

I want my databases to work the same way.