ATHI — An AI Threat Modeling Framework for Policymakers

My whole career has been in Information Security, and I began thinking a lot about AI in 2015. Since then I've done multiple deep dives on ML/Deep Learning, joined an AI team at Apple, and stayed extremely close to the field. Then late last year I left my main job to go full-time working for myself—largely with AI.

Most recently, my friend Jason Haddix and I joined the board of the AI Village at DEFCON to help think about how to illustrate and prevent dangers from the technology.

Many are quite worried about AI. Some are worried it'll take all the jobs. Others worry it'll turn us into paperclips, or worse. And others can't shake the idea of Schwarzenegger robots with machine guns.

This worry is not without consequences. When people get worried they talk. They complain. They become agitated. And if it's bad enough, government gets involved. And that's what's happening.

People are disturbed enough that the US Congress is looking at ways to protect people from harm. This is all good. They should be doing that.

The problem is that we seem to lack a clear framework for discussing the problems we're facing. And without such a framework we face the risk of laws being passed that either 1) won't address the issues, 2) will cause their own separate harms, or both.

An AI Threat Modeling framework

What I propose here is that we find a way to speak about these problems in a clear, conversational way.

Threat Modeling is a great way to do this. It's a way of taking many different attacks, and possibilities, and possible negative outcomes, and turning them into clear language that people understand.

"In our e-commerce website, 'BuyItAll', a potential threat model could be a scenario where hackers exploit a weak session management system to hijack user sessions, gaining unauthorized access to sensitive customer information such as credit card details and personal addresses, which could lead to massive financial losses for customers, reputational damage for the company, and potential legal consequences."

A typical Threat Model approach for technical vulnerabilities

This type of approach works because people think in stories—a concept captured well in The Storytelling Animal: How Stories Make Us Human, and many other works in psychology and sociology.

A 20-page academic paper might have tons of data and support for an idea. A table of facts and figures might perfectly spell out a connection between X and Y. But what seems to resonate best is a basic structure of: This person did this, which resulted in this, which caused this, that had this effect.

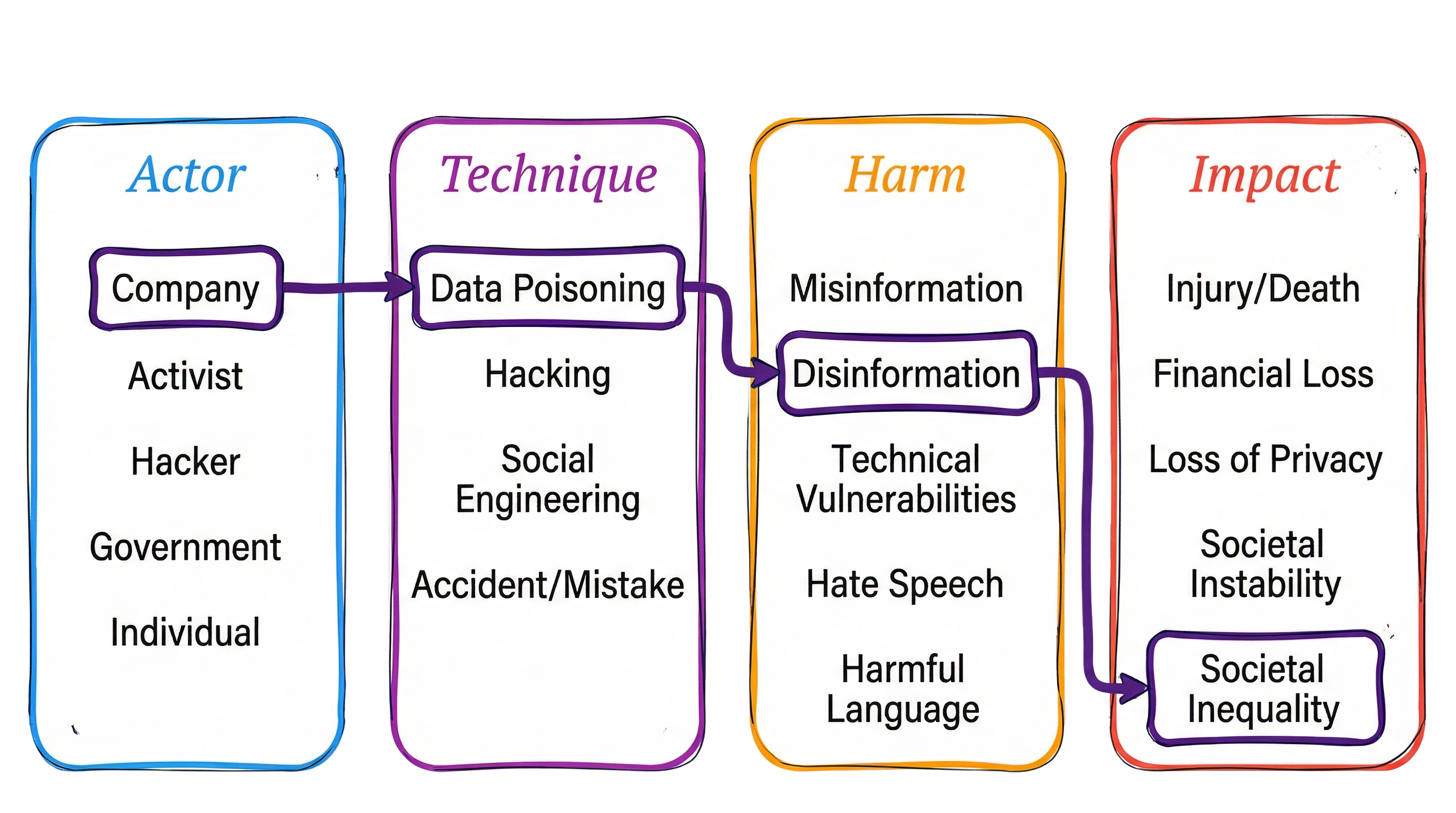

ATHI Structure = Actor, Technique, Harm, Impact

And so that is precisely the structure I propose we use for talking about AI harm. In fact we can use this for many different types of threats, including cyber, terrorism, etc. Many such schemas exist already in their own niches.

I think we need one for AI because it's so new and strange that people aren't calmly using such schemas to think about the problem. And many of the systems are quite intricate, terminology-heavy, and specific to one particular niche.

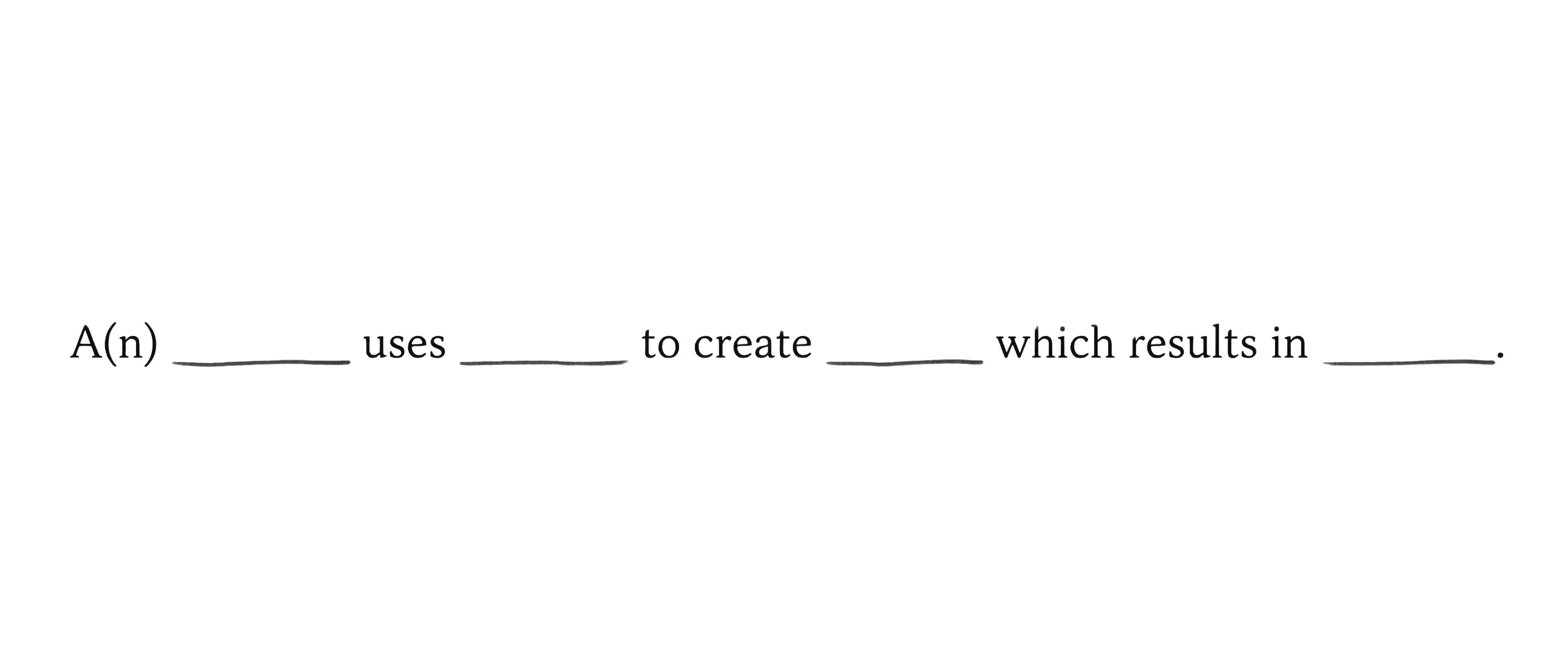

Basic structure

This ATHI approach is designed to be extremely simple and clear. We just train ourselves to articulate our concerns in a structure like this one.

Filled-in structure

So for poisoning of data that is used to train models it would look something like this in sentence form:

Or like this in the chart form.

Note that the categories you see here in each section are just initial examples. Many more can/should/will be added to make the system more accurate. We could even add another step between Technique and Harm, or between Harm and Impact.

The point is to think and communicate our concerns in this logical and structured way.

Recommendation

What I recommend is that we start trying to have these AI Risk conversations in a more structured way. And that we use something like ATHI to do that. If there's something better, let's use that. Just as long as it has the following characteristics:

- It's Simple

- It's Easy to Use

- Easy to Adjust as needed while still maintaining #1 and #2.

So when you hear someone say, "AI is really scary!", try to steer them towards this type of thinking. Who would do that? How would they do it? What would happen as a result? And what would the be the effects of that happening?

Then you can perhaps make a list of their concerns—starting with the impacts—and look at which are worst in terms of danger to people and society.

I think this could be extraordinarily useful for thinking clearly during the creation of policy and laws.

Summary

- AI threats are both new and considerable, so it's hard to think about them clearly.

- Threat Modeling has been used to do this for other security arenas for decades, and we should use something similar for AI.

- We can use a similar approach for AI, using something simple like ATHI.

- Hopefully the ability to have clear conversations about the problems will help us create better solutions.

Collaboration

Here is the GitHub Repo for ATHI, where the community can add Actors, Techniques, Harms, and Impacts.

Notes

- Thanks to Joseph Thacker, Alexander Romero, and Saul Varish for feedback on the system.