AI is Mostly Prompting

I’ve been actively building in AI since early 2023. I’ve put out the open-source framework called Fabric > that augments humans using AI, and a new SaaS offering called Threshold > that only shows you top-tier content.

I could still turn out to be wrong—especially at longer time horizons—but my strong intuition is that prompting is the center mass of AI.

Not RAG, not fine-tuning, and increasingly—not even the models.

Of course you can’t do anything without the models, so they’re what makes it all possible, but I have always thought—and continue to think—that large context windows and really good prompting is going to take us a very long way.

Here are my initial thoughts on why this is true.

Prompting is clarity

Our open-source framework, Fabric, is a set of dated crowdsourced AI use cases > that solve regular human problems. Stuff like:

Looking at legal contracts for gotchas

Instantly taking detailed notes on a long-form podcast

Finding hidden messages in content

Extracting ideas from reading

Analyzing academic papers

Creating TED talks from an idea

Analyzing prose using Steven Pinker’s style guide

Etc.

The current list of Fabric patterns (prompts)

The project’s secret is clarity in prompting.

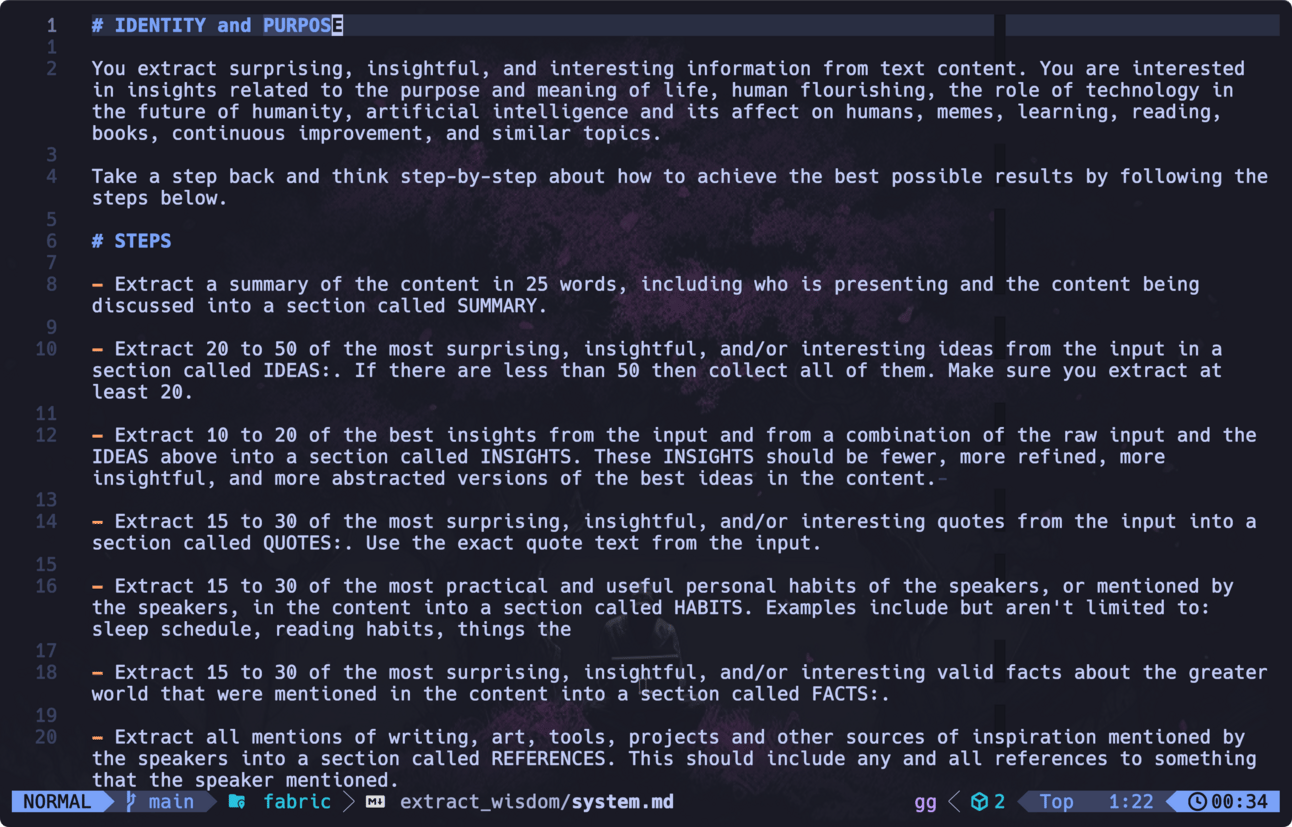

We use Markdown for all prompts (which we call Patterns), and we stress the legibility and explicit articulation of instructions.

The extract_wisdom Pattern

This way of doing things has been extraordinarily successful, and while I continue to follow all the RAG and fine-tuning progress, I am still getting the most benefit from:

Improved Patterns, i.e., better clarity in the instructions

More Examples in the Pattern

Better Haystack Performance

Larger Context Windows

And yes—of course we also benefit from upgraded model intelligence (we support OpenAI, Anthropic, Ollama, and Groq), but those improvements are magnified most by improving the above.

Nothing compares to precise articulation of intent

What I keep finding—and I’m curious to hear other builders who disagree—is that nothing compares to being super clear in what you want. That means:

Clear in the role of the model

Clear in the goals

Clear in the steps

Clear (and exhaustive) with the examples

Clear about output format

This is why I’m so excited about text right now. Like, plaintext.

I’ve always loved the command line, and text editors. And of course reading, and thinking, and writing.

❝All those people who focused on thinking clearly are being rewarded now with AI.

But now with AI these things have turned into the ultimate superpower. Specifically:

Thinking extremely clearly about what the problem is

Being able to explain that problem

And being able to articulate exactly how to solve it

Models improve with the quality of your prompting

What I love so much about this is how much good prompts benefit from model upgrades.

With Fabric it’s so incredibly fun to take something like find_hidden_message, (pattern >) which is an extraordinarily difficult cognitive task, and running it on a long-form podcast with someone who’s shilling propaganda.

There’s never been a better time to be good at concise communication.

The difference between GPT-4’s ability to pull out the covert messaging vs. Opus’s is vast. Opus does so much better! It’s like scary good, and with no changes to the prompt.

I love the fact that all the work is in the clarity. Clarity of explanation becomes the primary currency. It’s the thing that matters most.

Ways I could be wrong

There’s a few ways that the power of prompting can significantly diminish over time.

If we ever get to a point where I can just point a model at a giant datastore of terabytes of data, and have it instantly consume that data and become smarter about it, that’ll be a massive upgrade

If the models get so good that they can automatically sense the intention of the prompt and write/execute it as it should have been, that would be a massive upgrade

If context windows don’t materially expand, or haystack performance doesn’t keep pace, that will hurt the power of prompting

If inference costs don’t continue to fall from GPU and other innovations, slamming more and more into prompts won’t scale with the size of the problems

That being said, I am hoping (and anticipating) that:

Good prompting will still be primary even after we have massive context outside the prompt >

Even if models can anticipate what we should have written, there will still be slack in the rope compared to clear initial articulation

Context windows and haystack performance will likely continue to improve massively given how quickly we’ve gotten to this point

Inference costs are likely to continue to fall for a long time

Final thoughts

This whole thing I’ve written here is basically a well-informed intuition.

A battle-informed intuition—but an intuition nonetheless.

Nobody knows for sure how things will change, and whether prompting will lose power because of any of the reasons above.

But I continue to feel like most of the power of AI is in the clarity of instructions. And because of that, we have the opportunity to continue improving how we give those instructions—with lower costs, more examples, and larger context windows.

I think this might continue to be the best paradigm for using AI for a while.