OpenAI's November 23' Releases Are a Watershed Moment for Human Creativity—and Prompt Injection

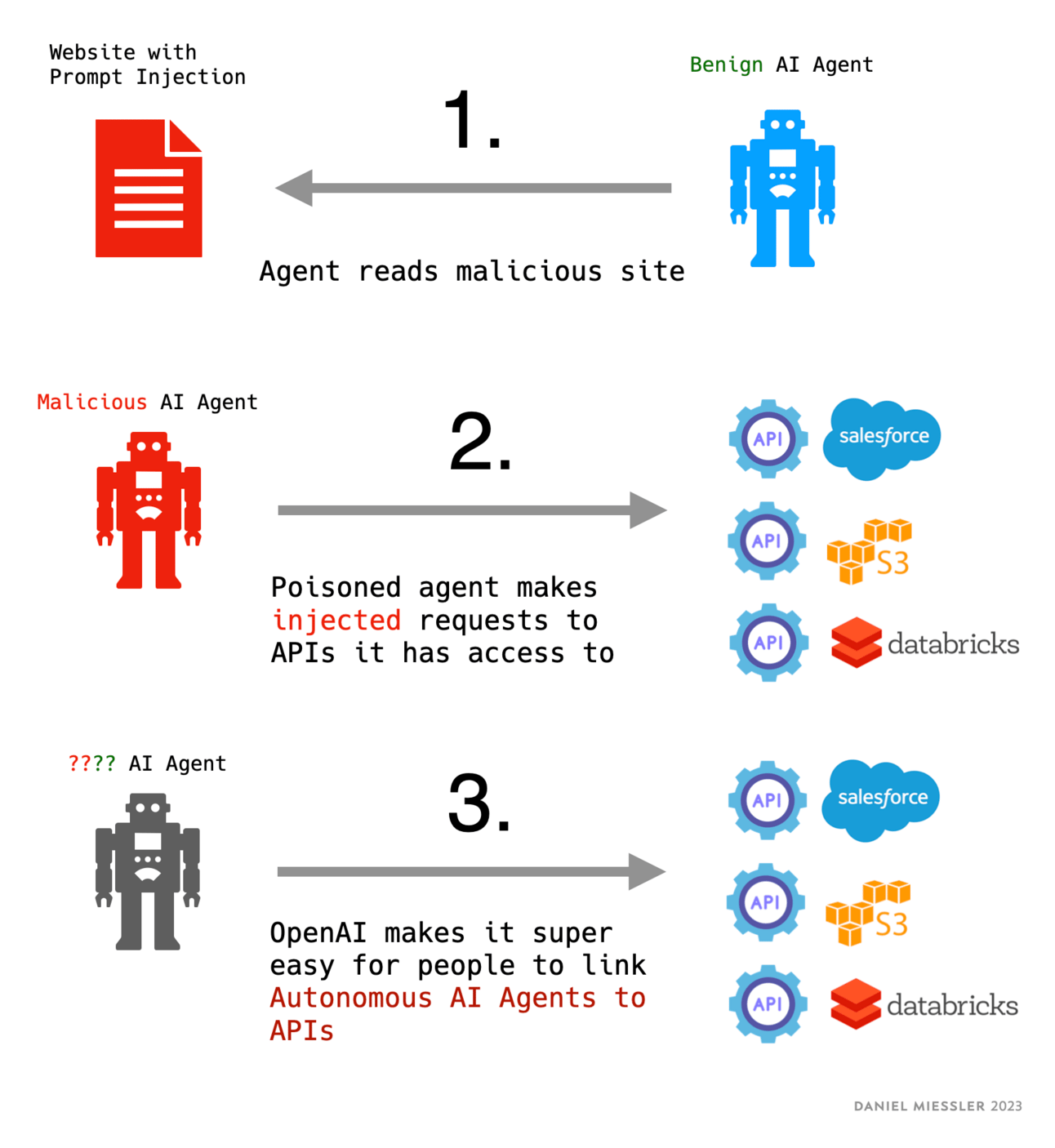

AI Agents + API Access + Prompt Injection

So I want to talk real quick about the recent announcements > from OpenAI.

Without hyperbole, I think what they announced represents both the greatest boon for business and the biggest problem for security that we’ve seen injected in a single day in many decades.

There were many announcements, and many of them—such as model updates—are wonderful but relatively inert. But what is not without implication is the unbelievably massive expansion of API calling capabilities. On this front, they announced two main things:

Custom GPTs

Assistants

Custom GPT’s are basically a front-end version of assistants. And importantly, they both have the same functionality of being able to call Code Interpreter, browse the web, and call arbitrary APIs.

Let me say that again–they can call any API.

I’ve been saying for a long time that the #1 threat AI security, from a cyber security standpoint, is AI agents having the ability to call APIs.

What they did yesterday was open that up to the entire world.

I just saw an interview with the head of API’s at Zapier, and they are now fully integrated with the new Assistant API, so everything that you can do in Zapier you can now do inside of an assistant.

And just to refresh everyone, you can basically do ANYTHING in Zapier.

Again, just to be clear, this is extraordinarily awesome for humanity, and for business, and for the economy, and for developers, and for so many people going forward. It was an amazing conference and a fantastic set of announcements.

But for us in security, we better get ready.

The amount of prompt injection we’re about to see propagate across the Internet is going to be staggering.

We are talking about injections on websites being crawled automatically by agents, consumed by the agents, executed by the agents, sent onto other APIs, which then connect to other APIs, which ultimately land on sensitive data back ends.

The possibilities for attack just became endless. And again, I’m not saying they shouldn’t have done it. I’m not saying this is bad.

I’m just saying as security people, get ready.

We're entering a world where everything is about to be parsed by AI Agents that have code execution and action-taking capabilities, and the implications are going to be massive.