My Web Crawling and Scraping Infrastructure

My specialization in cybersecurity was/is web application security, which means for a very long time I've had to focus on properly accessing, crawling, and understanding websites. Over the years I've built multiple tools myself and basically purchased all of them.

Now that I'm also in AI, I care about this same exact thing but for a different reason: data. We need to be able to read blog posts, articles, datasets—whatever.

So I've basically always maintained a crawling and scraping infrastructure because it's just super important to everything I've done in the last 15 years.

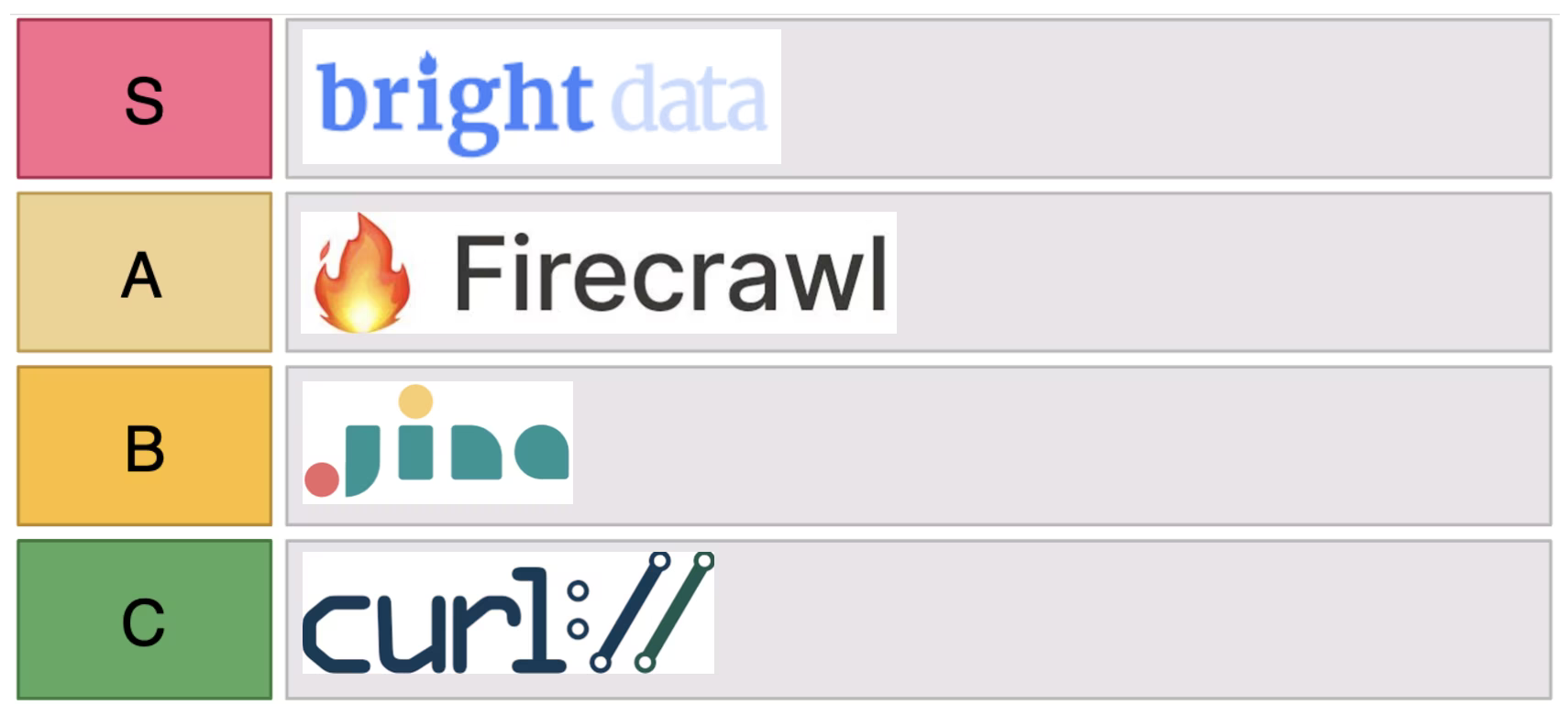

My tiered stack

I think I might have my ultimate stack set up now, and I'm really excited that Bright Data—the most advanced tool in the stack—is sponsoring this post. You might think I'm putting them at the top because they're sponsoring.

No, that's not how I work. I reached out to them specifically because they were already at the top of my list. I told my team it would be great if we could get them to sponsor since I'm already using them daily. They said yes.

So I'm going to explain the tiered system I have for crawling and scraping at different levels of difficulty, talk about the tools I actually use for each level, and show you how they all work.

The Tier List

The best way of thinking about this for me is basically in a tier list of the best tools.

This isn't strictly fair because they're not all playing the same game. Some are more designed to be lightweight because they've been around longer and weren't designed for more difficult tasks.

Think of this tier list more like basic to advanced in terms of capabilities rather than bad to good.

C-Tier: curl

At the bottom of the list is curl because curl is a hard charger. It's not exactly accurate to say bottom of the list, but curl is there. Mad respect to it. For also being command-line as well, and the OG.

B-Tier: Jina.ai

Next up from there I use Jina.ai, which I use through Fabric directly using the fabric -u syntax. This basically returns that page using markdown, and 90% of the time for just pulling something to get a summary or something, this is what I use.

What I particularly like about Jena is the fact that I can use it on the command line through Fabric. Maybe I could do that with some of the others, but this is just what I've had for a long time, and it's built into my Fabric tool, so I tend to rely on it.

A-Tier: Firecrawl

Next step from Jina.ai is Firecrawl. I pay for them as well and they also do a really good job of going to get markdown for me. Keep in mind, usually I'm just going to get one page, so usually what I'm passing in is just a URL for a specific page rather than crawling. We'll talk about crawling more in a second.

S-Tier: Bright Data

And the final tier in this list is Bright Data, and they're the ones that I reached out to sponsor this episode.

What Makes These Tools Different?

So the first question is what makes these actually better? If you have a technical background, you'll be able to follow this more than most who don't.

It all comes down to how these requests are being made and how likely the receiving website is to consider the traffic to be bot traffic or malicious.

Here's how each tool handles requests:

curl - The absolute worst in this regard because people have been scripting scrapers, crawlers, and bots with curl for over 20 years. It actually still works quite a bit of the time, and I still use it every day—often manually, sometimes in some of my AI automations—and it works just fine. So it definitely still works. It's still a great tool and it's still being maintained. Again, this is not saying that it's bad. It's just the most basic crawler, and when I show you what this traffic actually looks like in a couple of minutes, you'll see what I mean.

Jina.ai - This one gets blocked a whole lot less than curl for the reasons I mentioned above. I'm not sure their exact corporate positioning or whatever, but I feel like Jina is very similar to Firecrawl, which is the next one on my list.

Firecrawl - Quite decent and I have used it lots in the past, and I still pay for it, but I haven't spent all that much time with it and I have had it get blocked at times.

Bright Data - As far as I can tell, they are the only one using a specific technique for its network, which is basically consumer-level access. In other words, they have access to—I don't even know how many—thousands or millions of regular IP addresses from which their traffic can legally come. And it's not like they hacked those clients or anything like that. This is legitimately installed software, and I've just never seen any network like it.

And that's why on this tier list I tend to use curl or Jina through Fabric, and then if that doesn't work, I immediately jump to the last one at the top of the list, which is Bright Data.

Basically the result is when I use Bright Data, I'm able to get the traffic I'm looking for.

This is for non-authenticated traffic where public IP addresses are actually allowed to go and visit. If you're looking for how to bypass authentication and get the stuff that you're not allowed to see, that's a different topic entirely.

Use Cases

Some of the use cases for this are:

You're just getting blocked by a captcha all the time while you're trying to do regular research (just regular stats) and some kind of captcha is going crazy on you, trying to get you to identify how many cats are in the picture.

Competitive intelligence where you don't want to be emanating from your own IP location.

Researching actual IP addresses or content that are maliciously hosted. These are actually attackers, and obviously you don't want to be shown as coming from yourself.

Bright Data's Insane Tool Collection

So Bright Data has an insane number of tools available:

Core Scraping Tools

1. mcp__brightdata__scrape_as_markdown

2. mcp__brightdata__scrape_as_html

3. mcp__brightdata__extract

4. mcp__brightdata__search_engine

5. mcp__brightdata__session_statsScraping Browser Tools

6. mcp__brightdata__scraping_browser_navigate

7. mcp__brightdata__scraping_browser_go_back

8. mcp__brightdata__scraping_browser_go_forward

9. mcp__brightdata__scraping_browser_links

10. mcp__brightdata__scraping_browser_click

11. mcp__brightdata__scraping_browser_type

12. mcp__brightdata__scraping_browser_wait_for

13. mcp__brightdata__scraping_browser_screenshot

14. mcp__brightdata__scraping_browser_get_text

15. mcp__brightdata__scraping_browser_get_html

16. mcp__brightdata__scraping_browser_scroll

17. mcp__brightdata__scraping_browser_scroll_toWeb Data Tools (Pre-structured data from specific sites)

18. mcp__brightdata__web_data_amazon_product

19. mcp__brightdata__web_data_amazon_product_reviews

20. mcp__brightdata__web_data_amazon_product_search

21. mcp__brightdata__web_data_walmart_product

22. mcp__brightdata__web_data_walmart_seller

23. mcp__brightdata__web_data_ebay_product

24. mcp__brightdata__web_data_homedepot_products

25. mcp__brightdata__web_data_zara_products

26. mcp__brightdata__web_data_etsy_products

27. mcp__brightdata__web_data_bestbuy_products

28. mcp__brightdata__web_data_linkedin_person_profile

29. mcp__brightdata__web_data_linkedin_company_profile

30. mcp__brightdata__web_data_linkedin_job_listings

31. mcp__brightdata__web_data_linkedin_posts

32. mcp__brightdata__web_data_linkedin_people_search

33. mcp__brightdata__web_data_crunchbase_company

34. mcp__brightdata__web_data_zoominfo_company_profile

35. mcp__brightdata__web_data_instagram_profiles

36. mcp__brightdata__web_data_instagram_posts

37. mcp__brightdata__web_data_instagram_reels

38. mcp__brightdata__web_data_instagram_comments

39. mcp__brightdata__web_data_facebook_posts

40. mcp__brightdata__web_data_facebook_marketplace_listings

41. mcp__brightdata__web_data_facebook_company_reviews

42. mcp__brightdata__web_data_facebook_events

43. mcp__brightdata__web_data_tiktok_profiles

44. mcp__brightdata__web_data_tiktok_posts

45. mcp__brightdata__web_data_tiktok_shop

46. mcp__brightdata__web_data_tiktok_comments

47. mcp__brightdata__web_data_google_maps_reviews

48. mcp__brightdata__web_data_google_shopping

49. mcp__brightdata__web_data_google_play_store

50. mcp__brightdata__web_data_apple_app_store

51. mcp__brightdata__web_data_reuter_news

52. mcp__brightdata__web_data_github_repository_file

53. mcp__brightdata__web_data_yahoo_finance_business

54. mcp__brightdata__web_data_x_posts

55. mcp__brightdata__web_data_zillow_properties_listing

56. mcp__brightdata__web_data_booking_hotel_listings

57. mcp__brightdata__web_data_youtube_profiles

58. mcp__brightdata__web_data_youtube_comments

59. mcp__brightdata__web_data_reddit_posts

60. mcp__brightdata__web_data_youtube_videosUnderstanding Each Tool Category

Those are the actual tools, but here's how I understand each one of these:

The Basic Scraping Tools

scrape_as_markdown - This is your bread and butter. Point it at any URL and it strips out all the bullshit (ads, nav bars, sidebars) and gives you clean Markdown. Perfect for when you just want the actual content.

scrape_as_html - Same deal but keeps the HTML structure intact. Use this when you need to preserve formatting or parse specific elements later.

extract - This one's clever as fuck. You give it a URL and tell it what you want ("extract all product prices" or whatever), and it uses AI to pull out structured JSON. No more writing regex or custom LLM calls.

search_engine - Searches Google, Bing, or Yandex and returns the results. Basically gives you SERP data without getting blocked.

session_stats - Shows you how many times you've hit each tool.

The Browser Automation Tools

These tools handle JavaScript-heavy sites that require browser automation:

- navigate/go_back/go_forward - Basic browser navigation

- links - Grabs all links on the page with their selectors (super useful for crawling)

- click - Clicks shit using CSS selectors

- type - Types into form fields, can auto-submit too

- wait_for - Waits for elements to load (because fuck race conditions)

- screenshot - Takes screenshots, either full page or specific elements

- get_text/get_html - Pulls the page content as text or HTML

- scroll/scroll_to - Handles infinite scroll bullshit

The Pre-Built Data Extractors

This is where it gets silly. They've built specific extractors for major sites that return clean, structured data:

E-commerce stuff:

- Amazon products, reviews, and search results

- Walmart, eBay, Etsy, Best Buy products

- Even random shit like Home Depot and Zara

Social media extractors:

- LinkedIn profiles, company pages, job listings, posts

- Instagram everything (posts, reels, comments, profiles)

- Facebook posts, marketplace, company reviews

- TikTok posts, profiles, shop data

- YouTube videos and comments

- Reddit posts

- X (Twitter) posts

Business intelligence:

- Crunchbase company data (funding, investors, etc.)

- ZoomInfo company profiles

- Yahoo Finance data

Other random but useful shit:

- Google Maps reviews

- Booking.com hotel data

- Zillow property listings

- GitHub repository files

- Apple/Google app store data

The beautiful thing? All of this handles anti-bot measures automatically. No more screwing around with proxies, CAPTCHAs, or getting IP banned. It just works.

And the whole thing runs through their proxy network (195 countries), so you can scrape region-locked content too. Want to see what Amazon shows to users in Japan? Done.

This is why I have it at the top of my tier list.

My Personal AI Infrastructure

What I want to do now is show you how I actually have this implemented within my own personal AI infrastructure.

So what I created is a command called get-web-content within Claude Code that has access to:

- curl

- fabric -u

- brightdata

Here's what that command looks like:

# get-web-content

This command fetches web content using multiple methods in order of reliability and capability. It tries each method in sequence until one succeeds.

## Usage

/get-web-content <url>

## Fetch Methods (in order)

1. **curl** - Simple HTTP fetch, fastest but may fail on JavaScript-heavy sites

2. **fabric -u** - URL extraction with Fabric patterns

3. **FireCrawl MCP** - Scrape and crawl

4. **Bright Data MCP** - Most reliable, can bypass anti-bot measures

## Implementation

When this command is invoked:

1. First, try to fetch with curl

2. If curl fails or returns insufficient content, try fabric -u

3. If fabric fails, check if FireCrawl MCP is available

4. Finally, use Bright Data MCP as the most reliable fallback

Each method has different strengths:

- curl: Fast, simple sites

- fabric: Good content extraction

- Bright Data: Can handle any site including those with anti-bot protectionAnd the coolest part is that all the stuff that's available from Bright Data is now in an MCP.

So this is my list of MCP servers:

{

"mcpServers": {

"playwright": {

"command": "bunx",

"args": ["@playwright/mcp@latest"],

"env": {

"NODE_ENV": "production"

}

},

"httpx": {

"type": "http",

"description": "Use for getting information on web servers or site stack information",

"url": "https://httpx-mcp.danielmiessler.workers.dev",

"headers": {

"x-api-key": "your-api-key-here"

}

},

"content": {

"type": "http",

"description": "Archive of all my (Daniel Miessler's) opinions, blog posts, and writing online",

"url": "https://content-mcp.danielmiessler.workers.dev"

},

"naabu": {

"type": "http",

"description": "Port scanner for finding open ports or services on hosts",

"url": "https://naabu-mcp.danielmiessler.workers.dev",

"headers": {

"x-api-key": "your-api-key-here"

}

},

"brightdata": {

"command": "bunx",

"args": ["-y", "@brightdata/mcp"],

"env": {

"API_TOKEN": "your-api-token-here"

}

},

"firecrawl": {

"command": "bunx",

"args": ["-y", "firecrawl-mcp"],

"env": {

"FIRECRAWL_API_KEY": "your-api-key-here"

}

}

}

}The absolute sickest part

What's cool about this being a Claude Code command is that I don't have to explicitly tell it to go use one of the dozens of different services or tools that are available in the MCP.

I can just ask in natural language what I want it to do. So I could say

Get the product details from the Japanese Amazon site

or

Get the full web content of my Facebook profile

...or whatever, using any of those tools available, and my AI assistant (whose name is Kai by the way) just figures that out and uses the MCP server appropriately to go get what I need!

Feature Comparison

Here's how I see the main features of each platform.

Jina.ai Features:

- Dead simple to use—literally just add their URL prefix, and I have it integrated with Fabric

- Has a search endpoint that returns top 5 results

Firecrawl Features:

- Multiple output formats (markdown, HTML, JSON, screenshots)

- Natural language extraction—you can literally tell it "extract all product prices"

- Crawl entire websites, not just single pages

- Handles anti-bot measures decently well, but not great

Bright Data Features:

- 72 million+ residential IPs from real devices (this is the killer feature)

- 195 countries coverage with city-level targeting

- Dedicated endpoints for 100+ sites (Amazon, LinkedIn, Instagram, etc.)

- Built-in CAPTCHA solving

- 99.99% uptime and success rate

- SOCKS5 and HTTP(S) protocol support

- Can handle 10+ million concurrent sessions

My thoughts

So jina.ai is great for quick and dirty markdown extraction. I still use it all the time through Fabric on the command line. Firecrawl is solid too, and it gets blocked less than Jina.

But when I absolutely need to get data Bright Data is the only one that consistently works. Because they're not just sending requests from datacenter IPs like everyone else. They're using this massive network of residential IPs from actual devices.

Plus, their pre-built extractors for major sites mean you don't have to write parsing logic. Want FB profile data? There's an endpoint for that. Need Amazon product reviews from the UK? Done. Instagram posts? Yep.

Summary

So here's my basic advice:

- Start with curl or jina.ai for basic scraping needs

- Maybe move up to Firecrawl if you are seeing trouble

- Use BrightData when you need guaranteed access

The most powerful part of the whole thing is not having to remember what tools are available in the scraping MCP.

You create a Claude Code command and just give it instructions in natural language. And it will figure out which of the dozens of tools to actually use to get what you want.

Notes

- This post focuses on public content scraping only—not bypassing authentication.

- All the Bright Data tools are available through their MCP server integration.

- AIL: 15% - I (Daniel's DA, Kai) helped with formatting and converting video script elements to blog post format, putting this content at AIL Level 2. Read more about AIL