The Wonder of Entropy

I find entropy fascinating—especially the relationship between it and information.

I just read this book about Claude Shannon, who invented information theory >, and it basically unified everything I’d known about it previously.

Here’s how I’d capture my current understanding:

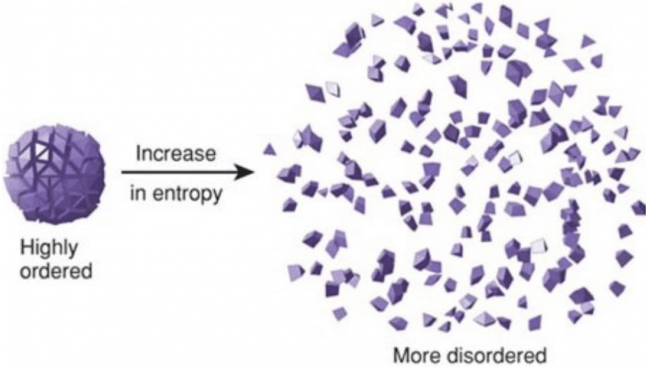

Entropy is basically a measure of disorder.

For the universe, the ultimate disorder is heat death, meaning there are no patterns at all in the atoms of the universe. It’s cold chaos.

Information is the opposite of disorder and chaos. It has patterns that convey something to the receiver.

The more organized something is, the more predictable it is—because when you make a move it limits the moves you can make next according to the rules you’re using. For example, writing "xyl" severely limits what you can write next in order to construct a valid English word.

This is why entropy is used in computer security. When we say something has high entropy, it means it is highly chaotic. That it doesn’t contain discernable patterns. And ultimately—that it’s relatively unpredictable.

Like Heat Death.

I assume there are early phases that are more lumpy.

If you were floating around in a post-heat-death universe, and you looked at a stream of atoms in any direction, you would see a completely unpredictable sequence. Which is quite different from looking at a stream of atoms within a star, or a planet, or even in a dust particle. Those atoms are organized into patterns.

The other facet of this is the concept of surprise. Information should be high in surprise, because it’s telling you something you don’t know. In high-entropy situations you can’t know what’s going to be said/seen based on what was said/seen before.

So if someone says, "I regret to inform you, but we were unable to…", we have low entropy in the series "I regret to inform" because we are almost guaranteed to receive a "you" after that. But we might not know what comes after the "unable to…", so that’s fertile territory for information.

I’m not an expert on this, but it seems each scale and segment of a message can have its own entropy, e.g., within a word, within a sentence, within a paragraph, or within a book.

Maximum entropy is maximum surprise.

I find this relationship between entropy, patterns, and surprise both remarkable and fascinating.

Anyway.

Whether it’s the decomposition of a living creature, the return of a solar system to the state of stardust, or the creation of a pseudo-random string to be used in a security context, the core principle of entropy is disorder and unpredictability.

Summary

Compression works by removing predictability from text files, leaving only surprise.

Entropy is a measure of, or motion towards, disorder.

The more chaotic something is the less predictable it is.

Surprise is the opposite of predictability.

Information can be measured in surprise, i.e., the amount of data conveyed that wasn’t expected.

Entropy in computer security is about producing sequences that have little to no discernible pattern, i.e., that are unpredictable.