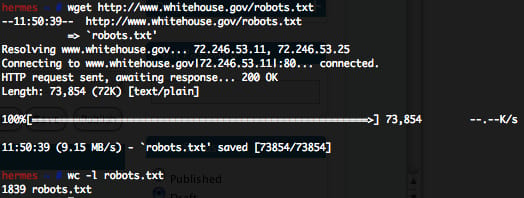

The Whitehouse.gov Website’s Robots.txt File Has 1839 Lines In It

1839?

I’m sure they’re not trying to hide anything, as doing so with a robots.txt file would be asinine, but 1839 entries is just insane. At some point you have to start wondering whether the content should be online at all if you don’t want it indexed.

I wonder if they’re using any of the directories as honeydirs (I just made it up). The idea being that you would have no links to a given directory, tell people in the robots.txt file absolutely NOT to go there, and then sit and log people who show up. It’d be easy to do in Apache:

grep $bad_directory access_log | cut -f1

...and there’s your list of "curious" people.

Probably not worth it for this kind of site, but an interesting thought. In fact, I’m going to implement this tonight for my site.