The Definition of a Green Team

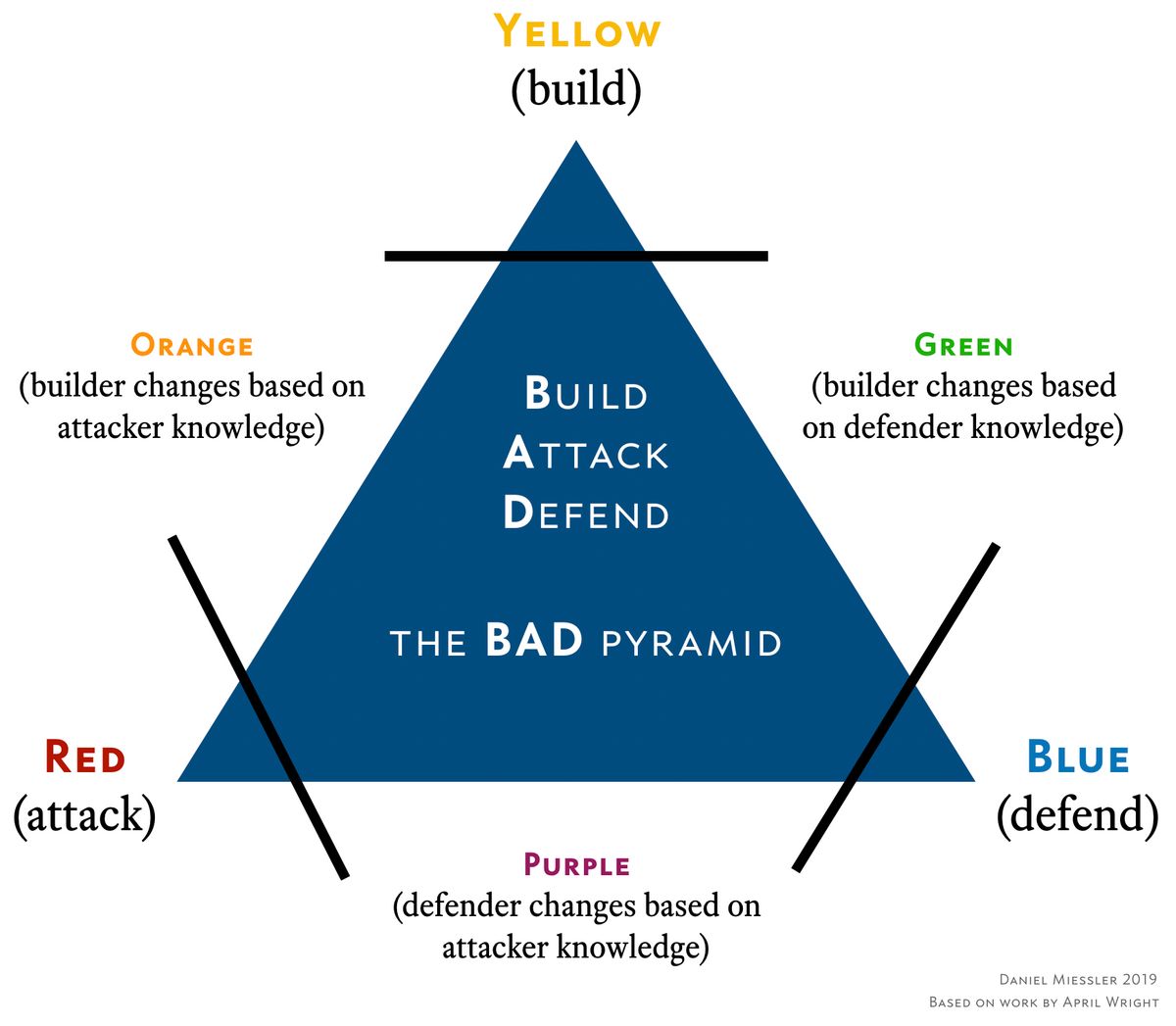

As I talk about in my article on Red, Blue, and Purple Teams >, there are many advocating for other team types beyond just those colors.

See my article on the different Security Assessment Types >.

In April Wright’s work >—who appears to have started the idea of these alternative team colors—the new ones look like this.

Yellow: Builder

Green: Builder learns from defender

Orange: Builder learns from attacker

Other groups have proposed a White team as being compliance and audit related.

The Green Team

The earlier in a term’s life the more drift you’re likely to have in its definition.

Here we’ll talk a bit about the concept of a Green Team, which according to my experience has a bit of a different meaning than the definition above. My best definition of a Green Team based on numerous conversations and a good amount of research is the following:

See all my Information Security Articles >

Note that this is not a baked definition, as the term is very new and has still yet to receive wide adoption. But taking that as a starting point, here’s how I break down the most important components:

The team has an adversarial/offensive security focus, meaning their discovery techniques come from Red Team and/or Pentesting mindsets.

Their mission is fixing things as efficiently as possible, across as much of the organization as possible.

Differences between Green and Red

The key difference is what’s done with the results.

Using this definition, the primary difference between Green and Red is that Red is focused on improving the Blue Team, meaning the company’s ability to detect and respond to the Red Team and real adversaries.

The Green Team, on the other hand, is focused on removing as many of the vulnerabilities and misconfigurations used by the Red Team as possible, and doing so as efficiently as possible across the entire organization. So they’re thinking about where the mistakes are being made at an organizational level, and they’re going to the source to work to change behavior.

Where the Red Team helps Blue to detect and respond, the Green Team uses those same skills to remove footholds for attackers across the company.

It’s about moving as left as possible for fixes.

Examples of this would include things like working with the people who build new Linux images, or with cloud admins, or with app developers to make sure all these groups are doing things like using secure defaults, disabling older protocols, enabling logging, and doing anything else that reduces attack surface and removes footholds for attackers.

Team structures

Red and Green are similar in many ways. Both use the attacker mindset and highly manual techniques to do things that automated scanners miss when looking for vulnerabilities.

Discovery can potentially be unified across teams.

Additionally, both could potentially be broken into two phases of 1) discovery, and 2) follow-up. In the case of Red, you first find issues by running your campaigns, and then you share that information with the Blue Team. With Green, you first find issues and you then go to the various organizations and work to address them at the most fundamental (build and configure) levels within the organization.

This makes it possible to unify offensive discovery to some degree—or in some situations—and then break off into the separate learning phases after issues are found. In other cases it’s best to have each team handle their own discovery.

Summary

Red uses the offensive security mindset to discover issues that improve Blue.

Green uses the same techniques to fundamentally address the issues that allow Red to succeed.

Both have discovery and follow-up phases, which could potentially be combined in some ways in some organizations.

The Green Team designation is still quite young and could either not survive or could evolve into a meaning not captured here.

TL;DR: Green is about the systemic addressing of issues discovered by offensive security testing.