The 2 Current Major AI Bottlenecks

I’ve been going hardcore on using GPT to create essays, reports, and other kinds of analysis. I’ve had tons of success with it, and it’s given me a clear view of current limitations with the current tech.

I’m not complaining. This stuff is brand-new.

Here are the current limitations that I expect to be addressed very soon, and that will multiply utility my orders of magnitude.

1. Key Moment Extraction

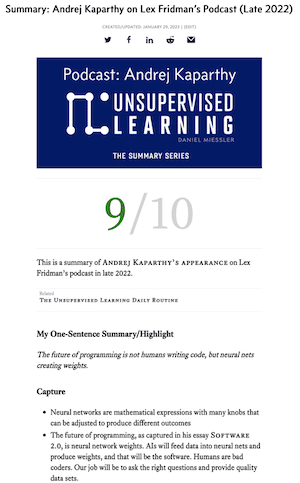

I am able to create extraordinary summaries, reports, write-ups, etc. on pretty much anything. And in any format, including writing very similar to myself.

A screenshot from one of my AI-generated summaries

But it requires content. Meaning, in order to produce one of these really amazing summaries, I have to take the notes. For the summary above I watched the entire Kaparthy/Lex podcast, which took me over 2 hours on 1.5x. It was a lot of notes.

Plus I had to write short notes so I don’t overwhelm GPT with too many tokens. So the act of generating the content required both tons of time and high skill.

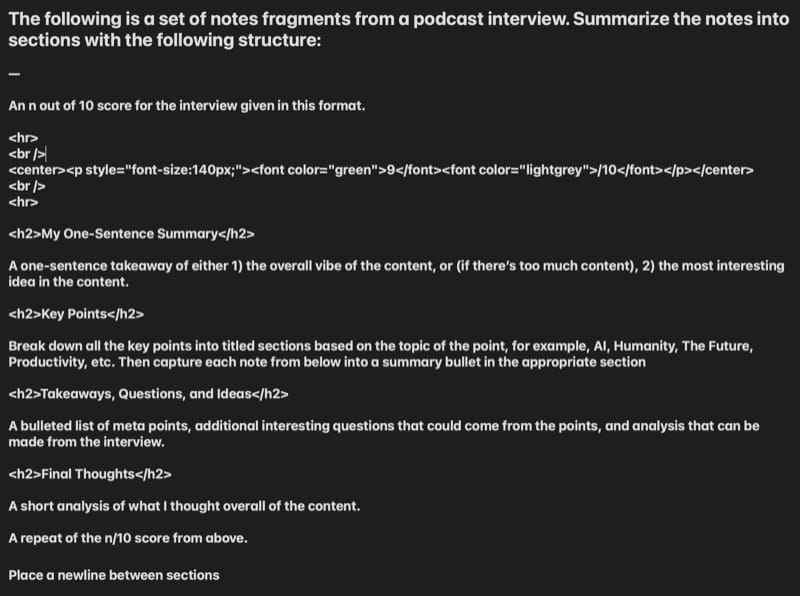

From there the AI could do amazing things with a prompt like this:

The prompt I used to create summaries like the one above

I’ve had the same sort of results with security reports I do in my company’s consulting. AI can help me write a great report, but I still have to do the interviews and capture them both thoroughly and concisely, which is an art and science on its own.

So that’s:

Ask the right questions

Capture the right part of the answers

Capture them concisely in a way the AI can work with when writing a report/summary

Now think about human conversations. That’s voice. Audio. Now think of scenes. Like a busy bazzar in Iraq. Or a schoolyard. Or a video podcast. Or an audio podcast.

These are all mediums in which interesting things happen. And if you want AI to be able to tell you a story about those events, you need to be able to pass the algorithm data. So you need extraction.

So, one of the next major leaps in what tech like GPT can do will be observing a given thing, like a meeting, or a podcast, or whatever, and extracting the key points.

Those then become the bullets that can be passed to AI for summarization, analysis, follow-up, and reporting.

2. Custom Corpus Training

Next on the list is being able to supplement a general model with training on a massive body of work, such as a company’s inner-workings, or a person’s life.

Imagine GPT-5, but in addition to the model which has tons of innate wisdom, you also train a sidecar model on every piece of data you have on your business. Every document. Your entire Salesforce instance. All your Slack messages. All your finances. All your business intelligence. Everything.

Now you have a god machine for your business.

Oh, and don’t worry, businesses are starting right now to do this. The good news is it’ll make your business tremendously efficient. The bad news is that you’ll need a lot fewer people for the tasks they’re currently working on. Because AI will be able to do those tasks instead.

But what’s even more crazy is humans. So you have GPT-7 or whatever, and now you pay LifeScan to come in, talk to all your friends and family, scan all your photos from the time of your birth. They capture every thing you’ve ever written or said that there’s a record of. They interview you for 40 hours using an AI-designed interview process.

You describe your fears. Your fantasies. Your preferences and tastes. Your goals and aspirations. Your hangups and your peculiarities. Favorite shows, favorite movies, favorite reading. Oh, and your genome data. All with your permission of course.

Well, now you can talk to yourself. Not just yourself, but a better version of yourself. Even better, you can talk to the best therapist in the world. Actually multiple therapists of different styles. And they know more about you than anyone, even yourself.

Why? Because of the personal training data you gave it? Nope. Because of that data combined with the larger model with hundreds of billions of parameters.

Now you have a god machine for yourself.

When we unlock these two features, and combine them with what we’re already seeing with these transformer models, things are going to get truly nutty. In a good way. Probably. Mostly.

I’m a little scared, but mostly excited. I cannot stop messing with this stuff, honestly.

If you’re the same, you should follow along in the journey >.

See you in the next one.