RobotsDisallowed: Find Content People Don’t Want You to See

I’ve just launched RobotsDisallowed, a project that helps web security testers find sensitive content on target sites.

The concept was pioneered by the now-dead RAFT project, and is based on pulling the Disallowed entries from the internet’s robots.txt files.

Basically, the robots.txt file is where webmasters tell the search engines, "Don’t look here.", and it’s often full of truly sensitive content. I’ve seen things like:

/admin/

/backdoor/

/site-source/

/credentials/

/hidden/

…where people have stored usernames and passwords, the source code for dynamic websites, SSH keys, admin interfaces that didn’t have a password…and many more. Seriously, it’s unbelievable what people put in their robots.txt.

The project

So what the RobotsDisallowed project did is take the Alexa Top 1 Million sites, pull all their robots.txt files, and pulled out all the Disallowed directives into a single file.

I’m also breaking the files into smaller versions in case you have a time-limited assessment, e.g., the top 10, 100, and 1000 directories.

You can then use these files to augment your discovery process during a web assessment using tools like Burp Intruder. Just feed it the file as the payload for a GET request, and see if you get any hits.

TL;DR: If you’re a web tester, you should strongly consider adding this to your repertoire.

Notes

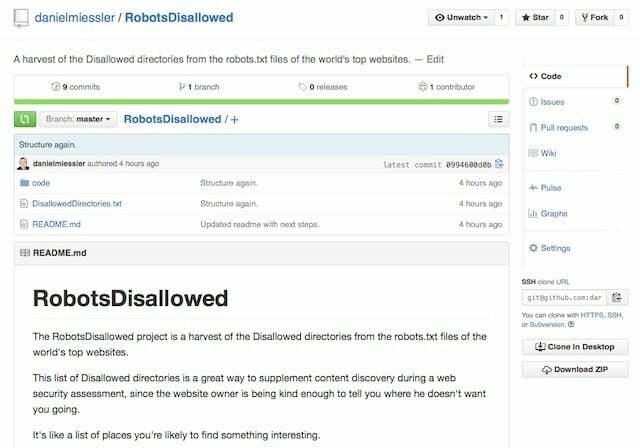

The RobotsDisallowed project can be found here on Github.

The lists will also be added to the SecLists project.

I plan on keeping the lists up to date from year to year.

If you have any ideas for what we can do to improve the project, let me or Jason Haddix know.