Preparing to Release the OWASP IoT Top 10 2018 (Updated: Released)

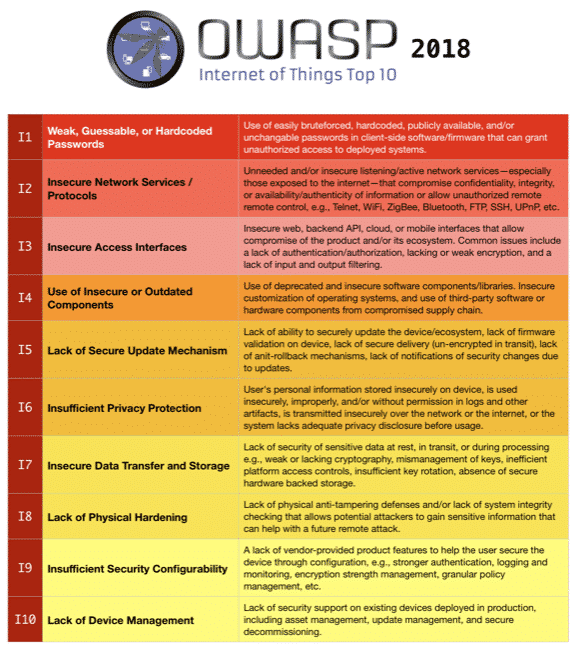

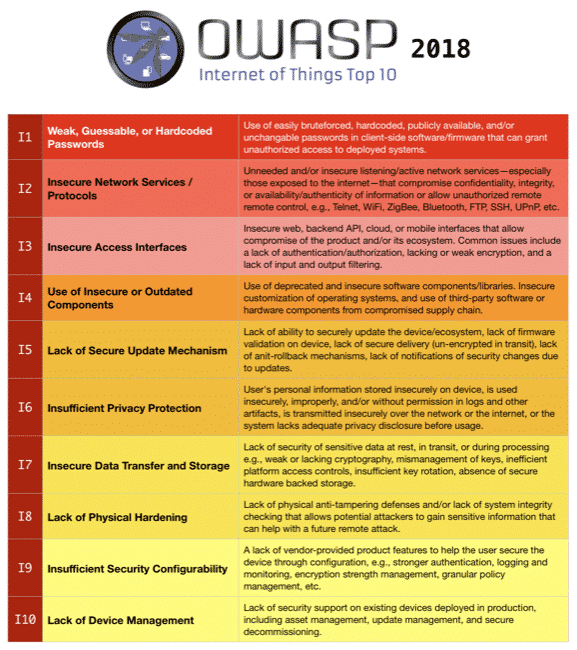

The OWASP IoT Top 10 2018 (Draft)

[ UPDATE: The project is now live here >. ]

Please give your feedback on the list here >.

The OWASP Internet of Things Top 10 has not been updated since 2014, for a number of reasons. First of which was the fact that we released the new umbrella project > that removed focus from the Top 10 format. This, in retrospect, seems to have been a mistake.

The idea was to just make a vulnerability list, and get away from the Top 10 concept.

As it turns out, people like Top 10 lists, and things have changed enough in the last few years that the team has been working this year to update the project for 2018.

This is what we’ve come up with so far, and I wanted to just talk through some of the philosophy and methodology for this year’s release.

Philosophy

So the philosophy we worked under was that of simplicity and practicality. We don’t believe the big data approach works as well as people hope it would, i.e., collecting datasets from hundreds of companies, looking at hundreds of thousands of vulnerabilities, and then hoping for the data to somehow speak wisdom to us.

The quality of the project comes from picking great team members who have the most experience, the least bias, and the best attitudes toward the project and collaboration

Many of us on the team have been part of the OWASP rodeo for quite some time, and what we’ve learned over the years is that ultimately—no matter how much data you have—you end up relying on the expertise and judgment of the team to create your final product. In the end it’s the people.

That being said, we wanted to take input from as many sources as possible to avoid missing vulnerabilities, prioritization, that others have captured elsewhere. We basically took it as a philosophy to be the best of what we knew from everywhere in the industry—whether that’s actual vulnerability data or curated standards and guidance from other types of IoT project.

What kind of widgets are these?

One thing that we deliberately wanted to sidestep was the religious debates around whether to call these things in the Top 10 vulnerabilities, threats, or risks. We solve that by referring to them as things to avoid. Again, practicality over pedantry.

Who is the audience?

The next big consideration from a philosophy standpoint was looking at who this list is meant to serve. The classic three options are developers/manufacturers, enterprises, and consumers. One option we looked at early on was creating a separate list for each. We might still do this as a later step, but having learned our lesson from removing the Top 10 we decided it’s hard enough getting people to focus on a single one—so three would make it at least three times as hard.

So the 2018 IoT Top 10 is a composite list for developers/manufacturers, enterprises, and consumers.

Methodology

Given that as a backdrop, here are the source types we ended up using to collect from and start analysis.

A number of sanitized *Top N* vulnerability lists from major IoT manufacturers.

Parsing of a number of relatively recent IoT security standards and projects, such as CSA’s Future-proofing the Connected World >, ENISA’s Baseline Security Recommendations for IoT >, Stanford’s Internet of Things Security Project >, NIST’s Draft 8200 Document, and many more.

Evaluation of the last few years of IoT vulnerabilities and known compromises to see what’s actually attracting attention and causing damage.

Many conversations with our networks of peer security and IoT experts about the list, to get feedback on priority and clarity.

We very quickly saw some key trends from these inputs.

The weak credential issue stuck out as the top issue basically everywhere

Listening services was almost universally second in all inputs

Privacy kept coming up in every list, project, and conversation

These repeated manifestations in projects, standards, and conversations lead to them being weighted heavily in the project.

Prioritization is critical

And that brings us to weighting.

We saw the project as basically being two phases:

Collecting as many inputs as possible to ensure that we weren’t blind to a vector, vulnerability, category, etc.

Determining how to rank the issues to do the most good.

Naturally the ratings are based on a combination of likelihood and impact, but in general we leaned slightly more on the likelihood factor because the main attack surface for this list is remote. In other words, it might be (and usually is) much worse to have physical access than to have telnet access, but if the device is on the internet then billions of people have telnet access, and we think that matters a lot.

This is why Lack of Physical Hardening is I8 and not I4 or I5, for example.

So our weighting was ultimately a combination of probability and impact, but with a significant mind towards how common an issue was, how available that issue usually is to attackers, and how often it’s actually being used and seen in the wild. We used our vulnerability lists from vendors to influence this quite a bit, seeing that as a signal that those issues are actually being found in real products.

The ask of you

The OWASP IoT Top 10 2018 (Draft)

So what we have now is a draft version that we’re looking to release as final within the next month or so. What we’d like is input from the community. Specifically, we’d love to hear thoughts on two aspects:

List contents, i.e., why did you have X and not Y?

List prioritization, i.e., you should put A lower, and B higher.

And any other input would honestly be appreciated as well. And if you want to join the project you can do that at any time as well. It’s been an open project throughout, and we’ve had many people join and contribute. We meet every other Friday in the OWASP Slack’s #iot-security channel at 8AM PST.

You can give us feedback there, or you can hit me up directly on Twitter at @danielmiessler >.

The team

I really want to thank the team for coming together on this thing. It’s honestly been the smoothest, most pleasant, and most productive OWASP project I’ve ever been part of, and I attribute that to the quality and character of the people who were part of it.

Thanks to Jim Manico for the input as well!

Vishruta Rudresh (Mimosa): Mimosa was extraordinarily awesome throughout the entire project. She was at virtually every meeting, and took over meetings when I could not attend. Her technical expertise and demeanor made her an absolute star of a team member.

Aaron Guzman: Aaron is an OWASP veteran and all-around badass in everything IoT Security. His input and guidance were invaluable throughout the entire process.

Vijay Pushpanathan: Vijay brought his significant IoT security expertise and professionalism to help provide constant input into direction and content.

José Alejandro Rivas Vidal: José was a consistent contributor of high-quality technical input and judgement.

Alex Lafrenz: Significant and quality input into the list formation and prioritization.

Charlie Worrell

Craig Smith

Daniel Miessler

HINT: No egos.

Seriously—I wish all OWASP projects could be this smooth. The team was just phenomenal. If anyone wants to hear how we managed it, reach out to me and I’ll try to share what we learned.

Summary

We’re updating the OWASP IoT Top 10 for the first time since 2014.

It’s a combined list of vulnerabilities, threats, and risks.

It’s a unified list for manufacturers/developers, enterprises, and consumers.

Our methodology was to go extremely broad on collection of inputs, including tons of IoT vulnerabilities, IoT projects, input from the team members, and input from fellow professionals in our networks.

We combined probability with impact, but slightly favored probability due to the remote nature of IoT.

We need your help in improving the draft before we go live sometime in October.

Please give your feedback here >!!!

Thank you!