I Built Two Claude Code Features a Week Before Anthropic Released Them

I'm not the type who brags, but I have to brag about this.

I guess it's not really bragging. It's more like validation.

Anyway.

I'm basically in love with Claude Code for multiple reasons, I have been for months. You know that by now. I've built a whole open source project around it and have constructed my entire AI ecosystem on top of it.

And now this thing has happened twice:

Twice I've built a complete, full-featured system into Kai (my personal AI infrastructure), and then less than a week later, Anthropic actually released the same functionality into Claude Code itself.

The Two Features

Feature 1: Universal File-based Context (UFC)

Before Claude Code existed, I was already building what I called the Universal File-based Context system. Basically just using the file system to manage AI context and history.

The Problem

Every AI conversation was ephemeral. Context disappeared between sessions. I'd have the same conversations over and over because the AI had no memory of what we'd built together.

What I Built

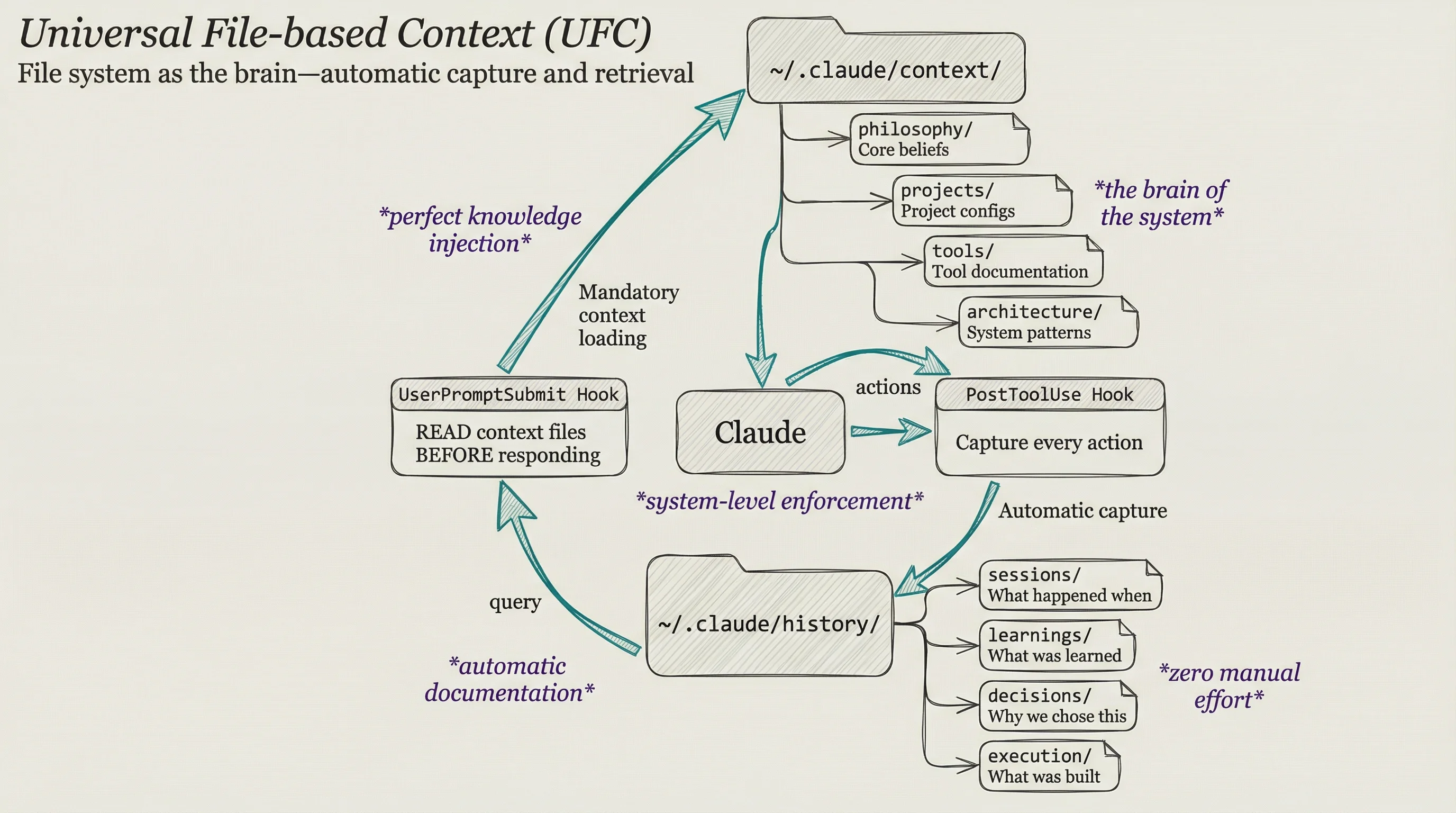

Universal file system-based context. It had three parts:

~/.claude/context/- The brain. System prompt, user preferences, active project state~/.claude/prompts/- Task-specific prompts that load based on what I'm doing~/.claude/history/- Automatic capture of sessions, learnings, research, and decisions

Hooks would automatically inject the right context at session start and capture the good stuff at session end.

What Anthropic Later Released

On October 16, 2025, Anthropic released Agent Skills—a complete system for extending Claude with modular capability packages. But it wasn't just "here's a folder structure." They built a sophisticated architecture:

Progressive Disclosure (3 Levels):

- Level 1 - Metadata: Skill name and description pre-loaded in the system prompt, so Claude knows what's available without loading everything

- Level 2 - Core Instructions: The full

SKILL.mdfile loads only when Claude determines the skill applies to the current task - Level 3+ - Nested Resources: Additional files load dynamically only as needed—making context effectively unbounded

Dynamic Loading: Rather than loading all skill content upfront, Claude uses filesystem tools to request specific files by name. This is exactly the "just-in-time context" pattern I'd been building with hooks—load context when relevant, not all at once.

Code Execution Integration: Skills can bundle scripts (Python, Bash) that Claude executes as tools. Their engineering blog describes this as providing "deterministic reliability that only code can provide" for operations better suited to code than token generation.

The parallels to what I built:

- My

~/.claude/prompts/task-specific loading → Their progressive disclosure - My hooks injecting context at session start → Their dynamic loading system

- My

~/.claude/context/directory → TheirSKILL.mdarchitecture

I spent all this time building something I thought would be useful. Then about a week later, turns out they'd been working on the same thing and released a better, more native version of it. Made me so happy.

Feature 2: Dynamic Skill Loading

Then just a few days ago, Anthropic released a blog called Advanced Tool Use.

I had just built something similar and taken it out of production because I thought it was overkill.

What I Built

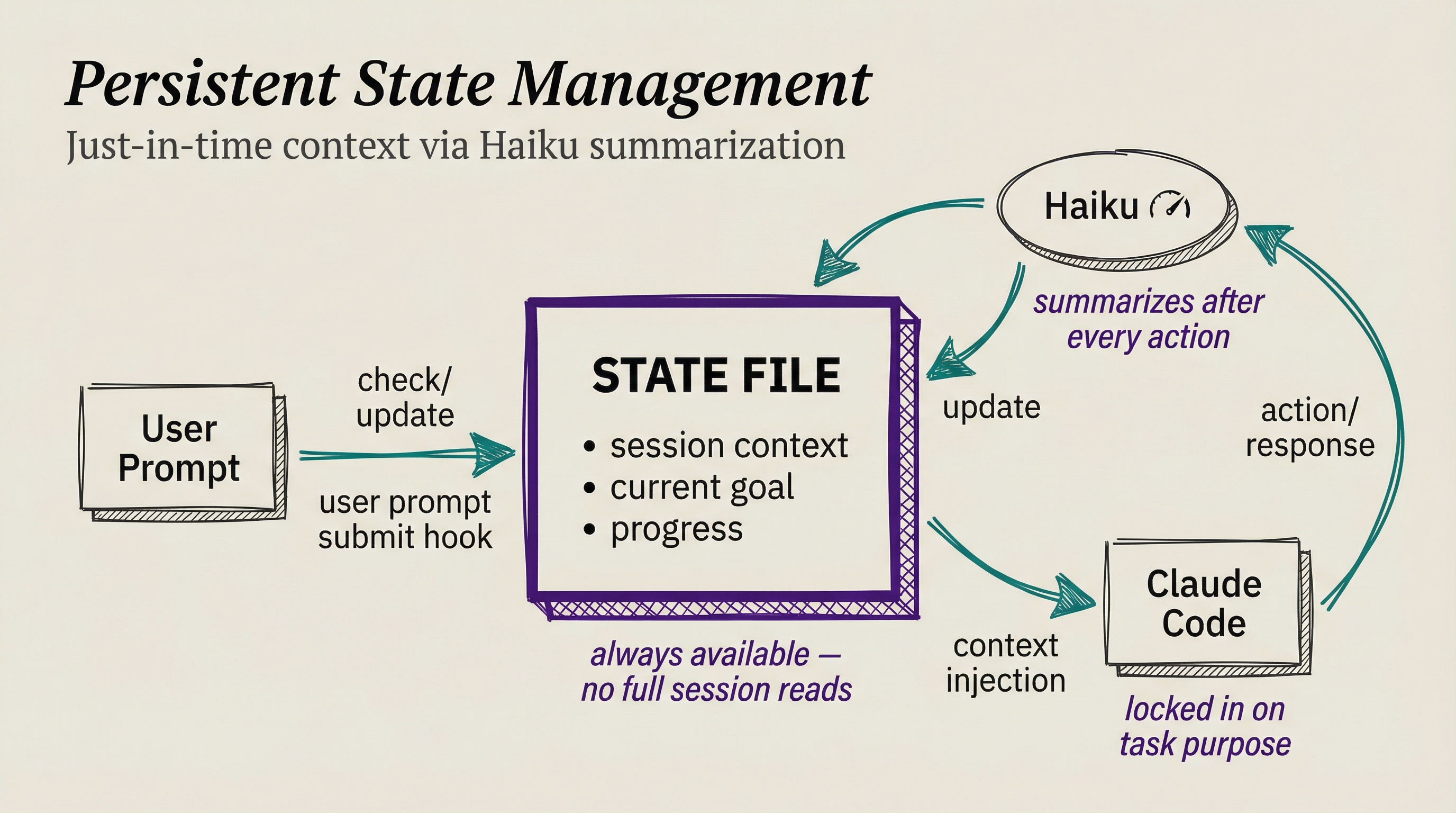

I built a persistent state management system that maintained a state file updated after every action. The architecture worked like this:

- State File: A dedicated file tracked the current session context—what we were working on, what the goal was, what had been accomplished

- Haiku Summarization: After each action, Haiku (fast and cheap) would quickly summarize the session state, so the system always had a compressed understanding of "what are we doing right now"

- User Prompt Submit Hook: On every user message, the hook would check the state file to understand the current context—so I could say "push this" and it would know exactly what "this" meant

- No Full Session Reads: Because Haiku maintained the summary, the system never had to re-read the entire conversation history to understand context

The whole point was to keep Claude locked in on exactly what we were doing. Later in a Claude Code session—especially after multiple compactions—even Claude can start to lose the plot. This system was designed to be an external memory that never forgot the task purpose.

I ended up only using it for a couple of days and then swapping it out because I thought it was excessive scaffolding—too heavy for the benefit. I figured a better model or better native scaffolding would eventually solve the problem without all that machinery.

What Anthropic Published

Then they published this engineering blog on Advanced Tool Use. The key pattern they describe is the Tool Search Tool—a meta-tool that lets Claude discover capabilities on-demand instead of loading everything upfront.

The specific technique: Tools are marked with defer_loading: true to make them discoverable on-demand. Claude initially sees only the Tool Search Tool itself plus a few high-priority tools. Everything else gets loaded only when needed.

I ran my upgrade skill against this blog and it said, "Hey, you should implement this pattern." So I did—creating extremely abridged versions of skills at startup that save context, then dynamically loading full skill content only when Claude determines it's relevant. You can see the architecture in the diagram above.

The results from their testing:

"Instead of loading all tool definitions upfront, the Tool Search Tool discovers tools on-demand. Claude only sees the tools it actually needs for the current task."

And the token savings?

"This represents an 85% reduction in token usage while maintaining access to your full tool library."

They also found that Opus 4.5's accuracy improved from 79.5% to 88.1% with this pattern—fewer tokens meant less confusion, not less capability.

What This All Means

So basically in both cases I had an idea that I thought would be super useful for Claude Code, and I implemented it. Then a couple of days later—or in the case of UFC about a week later—it turns out the Claude Code team was building this the whole time and they release a better version.

On one hand you're like, "oh they made it better." But on the other hand I'm like, very happy that it validates that I'm thinking about this whole context game correctly.

I've been talking about this since 2023. The scaffolding of a system is going to be incredibly important. I wrote a whole post about the 4 components of a good AI system where I talked about context being so critical.

I feel like Anthropic gets this more than anyone, and especially the Claude Code team.

All this to really say that I just feel proud of myself for the fact that I seem to be thinking along the same lines as the Claude Code team, and in my own limited way I might even be a step ahead.

Sorry for the self-congratulations, but I just feel really excited about this.

See you in the next one.

Notes

- 🤖 AIL Level 2: Daniel dictated this post. Kai added links, images, and formatting. Learn more about AIL.