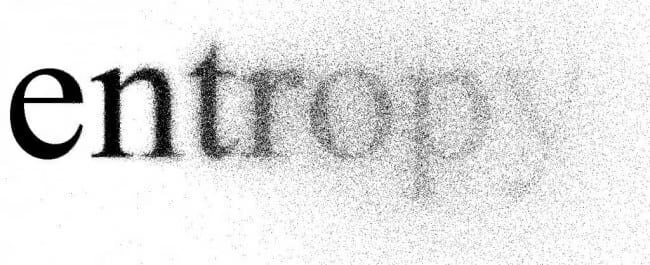

Entropy and Security

I’ve been obsessed with entropy since I learned about it in high school.

It’s highly depressing, but it’s awesome at the same time given how inevitable it is, and how it’s happening all the time and all around us.

I was just reading The Most Human Human, by Brian Christian, and it reminded me that I’ve wanted to write a short post on the topic for a while now.

Here are a few different definitions and concepts related to entropy, just as a flow of discrete statements.

These statements are not flawless; they’re designed to inspire you regarding the topic, not teach it technically.

Entropy, at its most base level, is disorder.

It’s the opposite of order, pattern, and potential.

As things move forward in time, they become less ordered—unless they’re receiving energy from an outside source (like the sun).

The endgame for the entire universe is heat death, which is a giant, consistent soup of disorder and lost potential.

One way to think about entropy is to imagine the light coming off of a light bulb in your living room. Energy cannot be destroyed, right? Well where did that light go? It went into the objects it hit, and every so slightly aroused them. It was absorbed and integrated into the things around it. But there’s no way to ever get it back. It’s gone. And any effort to retrieve it would take far more energy that it was ever worth.

In computer security entropy also means disorder, which we call randomness.

In security we need sources of randomness as part of cryptography so that we can remove patterns from our secret messages. Patterns allow for attackers to change the inputs and figure out what the inputs are for an unknown output. The less random (lower entropy) the input, the higher the chance that such analysis will be successful.

Entropy in this sense is a measure of uncertainty. The more you can guess the next item in a sequence, the less entropy that sequence has.

Another way to rate or think of entropy is the amount of surprise present in each consecutive data point. Maximum randomness has maximum surprise.

A big problem occurs in computer security when something is considered to be random when it isn’t, like taking the time from a system clock. Time is predictable, so all you have to do is make multiple guesses as to the time when that entropy source was pulled and you’ll have a chance at guessing (and therefore counteracting) that entropy. That turns it from disordered to ordered.

Perfect entropy is perfect randomness. It is a complete lack of patterns, organization, or uniformity. It’s not lumpy, it’s not banded, it’s not sequenced. It’s just completely flat and unpredictable noise.

The best source we’ve found for this level of randomness in nature is the radioactive decay of atoms.

Notes

Information can be seen as the reduction of uncertainty.