Authentication Types and Their Impact on Forced Device Access

We’re hearing a lot about what Apple is doing with the new iPhone, specifically around facial authentication replacing TouchID.

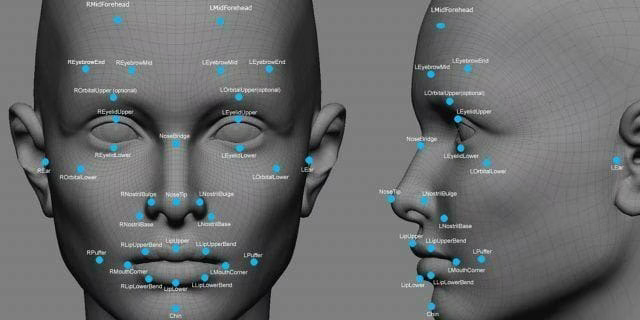

I think there is some cool stuff around this, particularly how it seems that there are a lot more authentication points with a facial scan then with a fingerprint scan.

But there’s one thing that I find interesting about the switch: forced unlock—especially by law enforcement.

Since the explosion of smartphones there’s always been a risk of law enforcement forcing you to give access to that device, potentially to learn of something malicious you’ve done.

It started with passwords. They could compel you to give yours up—or not—based on the law. That one’s pretty clear: either they scare you into giving up a secret that’s in your head, or they confiscate the phone and attack the password mechanism (enter the San Bernardino situation).

Then there was Touch ID. Touch ID is interesting because it’s more secure in a lot of ways but at the same time allows a different mechanism for police compelling access. In many jurisdictions it’s a different type of law that determines if they can force you to divulge a password vs. forcing you to supply your finger. Apple has gone so far as to build a Cop Mode feature into iOS 11 that allows you to disable TouchID if you think you’re going to deal with law enforcement.

And now we’re entering the world of facial and iris based authentication. This is another model entirely. So now you don’t have to get a secret out of somebody’s head, and you don’t even have to get their physical body to touch something. All you have to do to unlock the device is to show it to their face.

It’s a weird mix of more and less secure, and in ways that people are going to have to understand better.

Passwords are easier to guess than brute-forcing TouchID. Facial scanning probably has way more data points than fingerprints. But it’s also getting easier and easier to force someone to authenticate using those methods.

TouchID works if the person is unconscious. And it’ll be interesting to see how easy it is to authenticate someone’s face while they’re asleep (or worse) as well.

The whole evolution of personal device authentication is a great example of the necessity of threat modeling to understand how effective various controls are.

Notes

Here’s an example of how not to do it >.