AI-powered Visual Sensors Will be Wielded By Digital Assistants to Build us Custom UIs

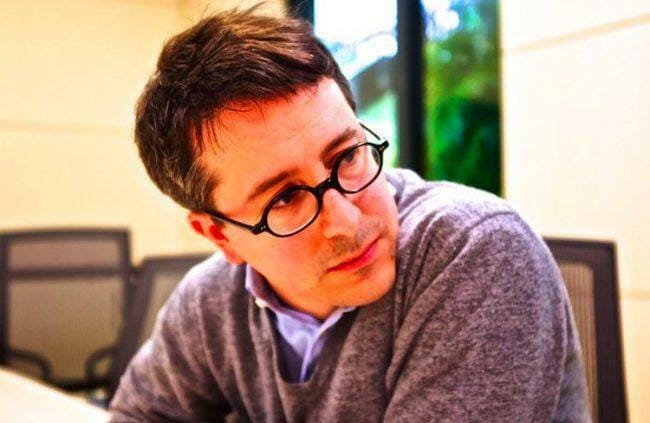

Benedict Evans is one of my favorite tech analysts. His content is usually great, I often agree with it, and his presentation style is quite unique. His annual presentation on technology trends should not be missed.

I had a chat with him in person once, and it was dissapointing to show him my long-term thoughts on where technology was going only to have him completely dismiss the ideas as obvious and vague.

Basically my book highlights what I think humans want from technology long-term, which can be predicted. And it then breaks down those desires into modules or components, which all fit together. I also talk about how some of the tech itself will likely work in a somewhat specific sense.

I wasn’t terribly offended about it, since he works for a a VC company that focuses on what they can invest in immediately. So the bias for the tangible was understandable.

Benedict didn’t really hear the specifics of what I was saying, but instead latched onto the grandiose, high-level of what I was saying. He heard, "And digital assistants will do this, and they’ll do that…", and immediately assumed it was just capability prediction, and not anything useful in the short or mid-term. Crucially, he said everyone knew where we were going, and it was just a question of how we were goinn to get there.

I think this is quite wrong, actually, and over the last 5 years I’ve talked to a number of prominent technologists—including a few in charge of large research budgets—who, when they heard my entire outline, said that it was the clearest roadmap they’ve ever heard to what’s coming.

Anyway, this post isn’t about the meeting with Benedict, or how he is a bad person or anything. I think his position was completely defendable given his position and not having known me. And perhaps I could have presented it differently. Doesn’t matter.

What does matter is that I keep coming across more examples of my point.

In Benedict’s recent piece > (which is excellent, by the way), Benedict writes about how cameras will be able to look at scenes and figure out more and more about what they’re seeing.

He goes on to talk about how this will allow AI to answer deeper and deeper questions.

This is precisely what I talk about in the chapters, "Algorithmic Experience Extraction" and "Businesses as Daemons", where the feed from your sensors on your body will be shipped to dozens or thousands of very specific AI algorithms that will completely decipher what is being seen.

The point of this is not to show that Benedict was wrong, or I was right—we’re both right, and we’ll both be wrong about some part of this as it moves forward.

I believe this enough to have three patents in-flight around what I talk about in the book.

The point is that I still believe it possible to dive deeper into our human requirements on technology, and to effectively and usefully reverse engineer specific technologies that can make those things happen.

The core premise of my book is:

The human has desires and needs, some passive and invisible (feeling depressed), and others are explicit and overt (clicking a button or giving a command).

The DA will be consuming all these pieces constantly.

The DA will also be parsing everything in the real world constantly, extracting meaning, and matching it to the principal’s state.

This way, the DA can anticipate requests, do things in the background, and generally improve the life of the principal in a continuous way.

Examples here include opening doors for you as you walk, having your food ready for you when you get into a restaurant, having your favorite sport playing on the display when you get there, etc.

Benedict is rightly talking about how computers will interpret inputs using language translation, and object identification, and this will all happen at increasing levels of complexity.

But who’s being obvious now? Of course that will happen.

The real question is how all those things will combine to fulfill real human needs and desires, and that is what I’m interested in. That is what I wrote my book about.

It is useful to move as far forward as possible into the tech, because you can then move backwards looking for milestones that we can possibly hit to make the longer term picture possible.

But we can’t do that if everyone is thinking short-term, which I think too many are.

Benedict thinks everyone sees what I see, but I think he got that wrong becaue he didn’t see it either.

The key innovation here is not the AI interpretation of objects in the view of its sensors. The key innovation will be the DA that merges those interpretations and matches them to current states within their principal, and then uses that combination to make requests to external Daemons (which are Businesses as Algorithms) to make things happen.

I understand that this is further out, but it doesn’t make it any less inevitable than the evolution that Benedict is talking about. It’s not like this requires AGI to happen. These are small incremental steps adding to each other until something extraordinary happens.

And my only point—in all of this—is to say that anyone trying to build the whole thing needs to keep their eye on the bigger picture as they build their incremental steps.

The companies that do this best will have a major advantage.