AI Art Just Opened The Threat to Human Work We Were Expecting from AGI

Let me start with the punchline: Something like 80% of most "knowledge work" is about to get replaced by artificial intelligence.

I’m not professionally educated or trained in AI, but I’ve read probably 30 reading and spent thousands of hours thinking about it.

I am not talking about ten to thirty years from now. 20-40 years is an easy prediction for such things. And when you go that far out it gets increasingly silly to even think about.

AI Art is doing what we thought would come from AGI.

No, I’m talking about major attacks on knowledge work within 5 years, with something like 50% to 80% of knowledge work is doable by AI within 8-15 years. Whether it will be done by AI that’s another story, but the capabilities will be there.

Why would I think such a thing?

I know, you’ve heard this all before. I have too. We all have.

AI is taking over! Skynet! Blah blah blah

It’s a meme of a cliche of a meme at this point. But this isn’t that.

Silicon Valley, and indeed the entire world, is about to experience the biggest Gold Rush ever. It’s starting already actually, and you know it as AI Art.

And I know you’ve seen the art. Or at least heard about it. It’s cool. It’s impressive. But how does that get us to human work replacement?

AI Art only works because it deeply "understands" human concepts.

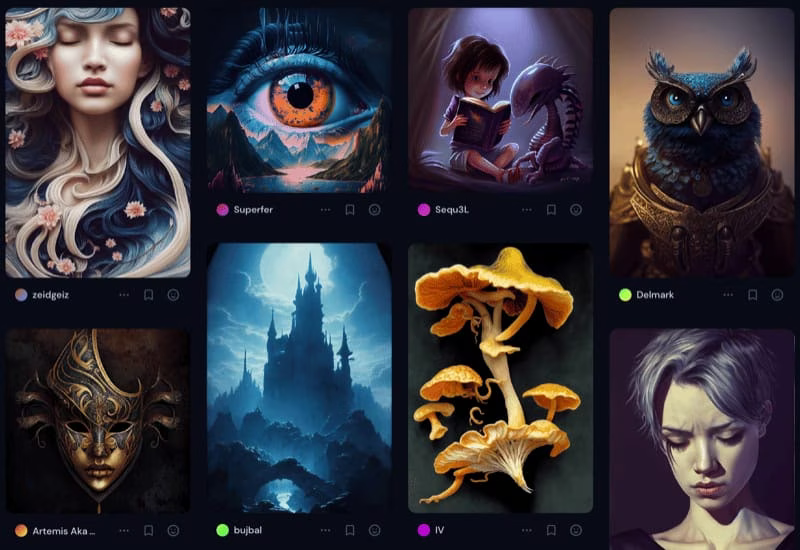

Look at these images.

Images From Midjourney’s Gallery

The AI is able to do this because it’s consumed billions of pieces of information about humans—from our creative output. It understands art styles, sadness, happiness, birds, cars, fights, kisses, space, toilets, and ice cream.

I just told Midjourney’s V4 engine to make:

a digital photo of a sad ice cream

A sad ice cream, by Midjourney Version 4

Now here’s the scariest part about this: the AI has no idea what sadness is. Not in any human sense.

The difference between effectively undersanding and truly understanding just became moot.

But it might as well. Despite not knowing, it can emulate it brilliantly, and mix and match it with concepts like ice cream or marriage or courage.

What we missed isn’t that it’s smarter than we thought, but rather how much it can accomplish despite not being smart at all.

Ok, but what does that have to do with knowledge work?

Now, think about the nature of most knowledge work. It’s answering emails, wrangling people in Slack. Creating reports. Reading reports. Creating PowerPoint decks. Arranging meetings, conducting meetings, summarizing meetings, sharing summaries of meetings. Connecting SMEs, arranging events. Making decisions. Providing the data to help make decisions. Etc.

Some of these are more distant and/or alarming than others.

All those things are ice cream and sadness. They’re all just concepts that can be learned from looking at millions of examples. And once the has been shown those examples, it’ll be able to do things like this:

Meetings

Join all meetings and create summaries of what was said

Provide statistics on how much everyone spoke

Analyze their facial expressions for engagement vs. apathy

Give them an EngagementTM score based on their recent interactions

Analysis

Capture all new ideas into the incoming ideas workflow

Rate the ideas based on creativity and originality

See if they’ve been recommended and evaluated before

If the idea is related to topic1, topic2, or topic3, send a summary of the idea to Tania

Actually, since you’ve already read everyone’s email and Slack, if there’s a new idea that gets talked about seriously, send it to the appropriate L2 leader as a Slack summary

Action

Turn all action items into the appropriate Jira tickets assigned to the appropriate team, and include all context from the meeting

Follow-up with regular pings of escalating importance, tied to escalations to higher bosses

Read all open Jira tickets and see which actions can be done automatically given the AI’s access, e.g., locking down Github settings, launching some infrastructure to A/B test an idea from a meeting and emailing participants and leaders with the results

When the A/B testing comes back, make a recommendation of how to move forward based on the cost and current budgets plus the current economic outlook

Factor in the company’s stated posture towards taking risks, moving fast, etc, which is included in its charter

Misc

Suggest better wording when communicating with people based on knowing how they like to receive information

Monitor all internal communications for toxicity, and take various automatic actions when it’s seen

Same for insider threat from espionage, sabotage, etc.

The insane part in all of this is that those can be (and will be) their own businesses. Their own AI businesses. You can launch a startup to do almost every line item in there, and many of them are starting already as a result of figuring out exactly what I’m describing here.

Art is the hand of the AI magician that you shouldn’t be watching.

This is the real AI gold rush. Forget the art. That was a parlour trick. But what it did was expose us to the insane capabilities of transformers and their ability to fill in the blank.

Summary

Shit is about to get crazy. We’re about to see an explosion of tech startups that are using transformers and other AI tech to automate human work. It’s going to feel like a tech boom, and it will be, but it’ll be a tech boom based on doing thousands of knowlege work tasks as good—and then better—than humans.

At scale. With no breaks. For a whole lot cheaper than having human staff.

And if you think you’re safe in management, how good do you think these systems will be at making most day-to-day decisions? We’ve already seen multiple examples of super-basic AIs making better decisions than judges, doctors, and all sorts of SMEs with significant experience. Many decisions made my leaders today will be easily done better by this type of AI.

The major separation will be between ideas and execution.

The safest will be those with the actual ideas, because execution and organization and such will be the realm of automation.

In short, AGI is not the threat we need to be worried about. A thing does not need to have feelings or self-awareness to do a job better than a human. And that’s what we just built. It’s not AGI, but it doesn’t matter.

What you can do

So here’s what I recommend based on this insane moment we’re about to enter.

If you’re looking for a first or new career, you should think very seriously about getting into AI. And not just the hard science of it (which will get increasingly exclusive), but the practical implementation side of it. Learn how to solve business problems using these tools. Welcome to Cyberdyne.

If you’re in any sort of knowledge work that includes lots of reading, parsing, and performing repetitive tasks, start thinking about alternatives.

If you have youngish kids, or are helping to guide some, make sure they understand what’s in this post so they can be ready. Try to steer them into being as close to the root ideas as possible.

If you already get it, and you just want to know what to do tactically, start mapping repetitive tasks that are done (especially poorly) within businesses, and either learn a framework/company that solves that problem using AI or learn the raw tools yourself and build your own frameworks.

Fun times. We’ve already got the rise of authoritarianism, countries pulling inward, and the rise of inequality. And now we’re adding human work replacement to the mix.

The next 15 years is going to be a hoot.

Notes

I am working on a counter follow-up post that shows a positive and optimistic trend that could come from all this, once the part above happens.

There will still be lots of jobs that are resistant to this push. Physical work, managing human teams where human interaction is a key part of retention, etc.

If you want to tell me how stupid I am, I’m @danielmiessler > on Twitter.