A Collection of Thoughts and Predictions About AI (June 2024)

Member Post

I have been talking about AI and making limited predictions about it for nearly 10 years now, but some new ideas and thoughts have come out recently that I want to comment and expand on.

I'm specifically speaking of the wondrous conversation between Leopold Aschennbrenner and Dwarkesh Patel >, and Leopold's collection of essays he recently released on the topic of AI safety (my summary here >).

That’s what kicked off a bunch of ideas, and I now have so many I need to make a list.

Table of Contents

So that’s what I’m going to cover in this long post, done in the jumping style of my annual Frontview Mirror pieces that I do for members.

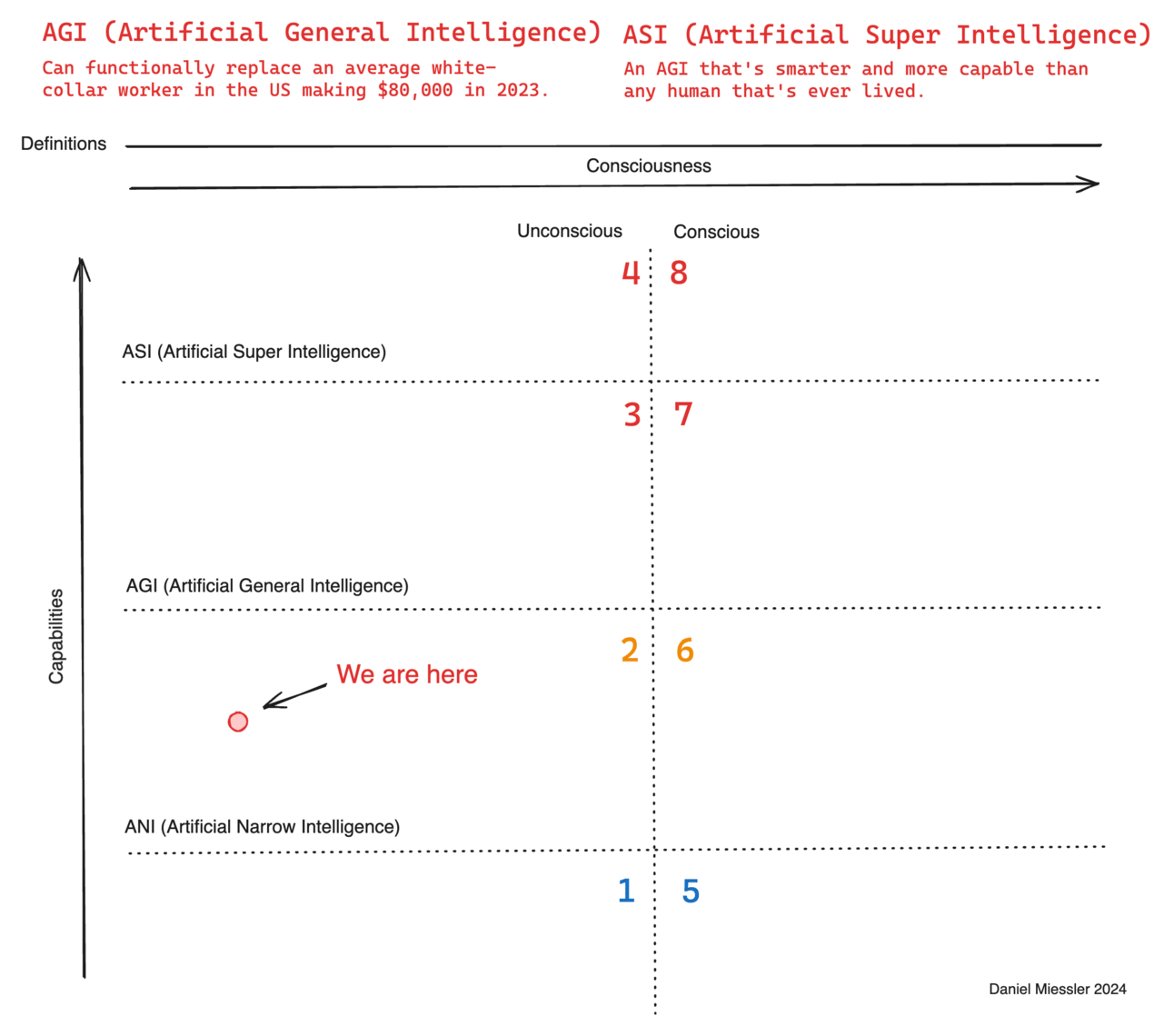

How I see the ladder path to ASI / Consciousness

How I see the AI paths of development

Starting with the ladder image above here are few things to mention.

The numbers are just zones to refer to

Consciousness is just a toggle in this model. Either it happens or it doesn’t, and we have no idea right now if/when it will

Importantly, whether or not it happens doesn’t (appear to) effect whether we keep ascending on the left side (without consciousness), which seems to be the most likely and has most of the same implications for danger to humanity as being on the right side

What’s most important about this is that we separate in our minds whether AI becomes conscious from whether or not it can destroy us, or be used to destroy us. It can stay completely on the left (most likely) and still do that.

Another way to think about the vertical scale is IQ numbers. So like 100 is a big crossover point, and we’re getting very close in narrow AI, but I don’t think the 100 mark really matters until we have AGI.

That’s basically a pretty smart human. Now technically using my white collar job definition you could argue does that require a higher IQ? Not sure, and not sure it matters much.

What matters more is that the ASI jump is something around 250 IQ. So we’re talking about the ability to instantly replicate John Von Neumann level intelligences. Or Einstein’s. Or whatever. Even more insane is that once you can do that, it’s probably not going to hit that and struggle to get to 300. It’ll be arbitrary jumps that look more like 153, and then 227, and then 390, and then 1420—or whatever.

Not actually predicting that, just saying that small improvements in models or architecture or whatever could have outsized returns that manifest as very large jumps in this very human metric of IQ. Or to put it another way, IQ will quickly become a silly metric to use for this, since it’s based on how smart you are at fairly limited tasks relative to a human age.

Reacting to Leopold and Dwarkesh's conversation

Again, you must watch this if you haven’t seen it. If you like this stuff, it’s the best 4.5 hours you can possibly spend right now.

A few points:

Leopold is absolutely right about the inability of startups to prevent against state level attempts to steal IP, like model weights.

I also agree with Leopold that while the US government with sole control of AI superintelligence is scary as hell, it's NOTHING compared to 1) everyone having it, 2) private AI companies having it, or 3) China having it—especially since 1 or 2 also means 3. So the best path is to literally try to make the US scenario happen.

Thoughts on the ladder to AGI and then to ASI

I was talking recently with a buddy recently about theoretical, sci-fi stuff around the path to ASI. We started out disagreeing but ended up in a very similar place. Much like what happened with Dwarkesh and Leopold, actually.

My intuition—and it really is just an intuition—is that the path to ASI is constructed from extremely base components. This is actually a difficult concept but I’m going to try to capture it. We tried to record the conversation while walking but our tech failed us.

So here’s my argument in a haphazard sort of stream of consciousness version. I’ll make a standalone essay with a cleaner version once I hammer it out here.

deep breath

There are only so many components to fully understanding the world

Some of these are easily attainable and some are impossible

An impossible one, for example, would be knowing all states—of all things—at all times. That’s god stuff.

The more realistic and attainable things are abstractions of that

My argument / intuition is that there are likely abstractions of those combinations/interactions that we haven’t yet attained

The analogy I used was fluid dynamics, where we can do lots of predictions of how fluids move around based on a separate and emergent type of physics separate from the physics of the substrate (newtonian and quantum).

I gave newtonian as an example of an abstraction of quantum as well.

My friend’s argument, which I thought was quite smart, was that there are limitations to what we can do even with super intelligences because of the one-way nature of calculation. So basically, the P/NP problem. Like, we can TRY lots of things and see if they work, but that’s nothing like testing all the options and finding the best one

He came up with a great definition for intelligence there, which was the ability to reduce the search space for a problem. So like pruning trees.

Like if there are 100 trillion options, what can we do to get that down to 10? And how fast? I thought that was a pretty cool definition.

And that reminds me a lot of quantum computing, which I hardly ever find myself thinking about. And encryption, which my buddy brought up.

Anyway.

Here are the components I see leading to Superintelligence, with AGI coming along somewhere before:

A super-complex world model of physical interactions. (Like how the cell works, how medicines interact with cells, etc.)

So think of that at all levels of physics. Quantum (as much as possible for rule descriptions). Atomic? Molecules. Cells. Whatever. And in some cases it’s just feeding it our current understanding as text, but even better it’s lots of actual recordings of it happening. Like particle accelerator results, cell-level recordings, etc.

The use-case I kept using was solving human aging.

So the first component is this super-deep world model of, essentially, physics, but at multiple levels of abstraction

Next is patterns, analogies, metaphors, etc. Finding the links between things, which we know GPT-4 et al, are already really good at.

Next is the size of the working memory. Which I’m not technically sure what that means, but it’s something like actual memory (computer memory), combined with context windows, combined with something like L2 in like much-better-RAG or something, etc.

So it’s like, how much of the universe and its knowledge of it can this thing see at once as it’s working on a problem?

I think those are the main things I think matter. It might just be those three.

My friend pointed out at this point that it won’t be nearly enough. And that model sizes don’t get you there. The post-training is super crucial because it’s where you teach it associations and stuff.

That was basically the only mis-communication/sticking point about the post-training glue, which makes total sense to me, and that brought us back inline with each other.

Although I’m not saying he agreed with my hard stance here, which I’ll now restate. And btw, I’m not sure I believe it either, but I think it’s fascinating and possible.

MY HYPOTHESIS: There are very few fundamental components that allow AI to scale up from narrow AI to AGI to ASI. Those are:

1) A world-model of a sufficient depth towards quantum (or fundamental, whatever that is) truth.

2) Sufficient training examples to allow a deep enough ability to find patterns and similarities between phenomenon within that world model.

3) Sufficient working memory of various tier levels so that the system can hold enough of the picture in its mind to find the patterns.

4) The ability to model the scientific method and simulate tests at a sufficient level of depth/abstraction to test things effectively, which requires #1 most of all.

#4 is the one I’m least confident in because it could be a pure P/NP type limitation where you can test a particulate solution but not simulate all of them in a meaningful way.

The other thing to say is that #4 might just be implied somehow in a sufficiently advanced AI that has #1. So all of its training would naturally bring it to the scientific method, but that’s out of my depth in neural net knowledge and the types of emergence that are possible at larger model sizes.

In short, I guess what I’m saying is that the crux of the whole thing might be two big things: the complexity of the world model, and the size of the active memory it’s able to use. Because perhaps the pattern matching comes naturally as well.

I suppose the most controversial thing I’m positing here is that the universe has an actual complexity that is approachable. And that models only need to cross a certain threshold of depth of understanding / abstraction to become near-Laplacian-demon-like.

So they don’t need to have full Laplacian knowledge—which I doubt is even possible—but that if you hit a certain threshold of depth or abstraction quality, it’s functionally very similar.

Ok, I think that was it. I think I captured it decently well.

Human 3.0

I have a concept called Human 3.0 that I've been talking about here for a while so I might as well expand on it a little. It basically looks at a few different aspects of human development, such as:

How many people believe they have ideas that are useful to the world

How many people believe they could write a short book that would be popular in the world

The percentage of a person's creativity they are actively using in their life and producing things from

The percentage of a person's total capabilities that is published in a professional profile, e.g., a resume, CV, or LinkedIn profile

In short, the degree to which someone lives as their full-spectrum self

In Human 2.0, people have bifurcated lives. There's a personal life where they might be funny, or caring, or nurturing, or good at puzzles, or whatever. But those things generally don't go on resumes because they're not useful to 20th century companies. So, something like 10% of someone's total self is represented in a professional profile. More for some, less for others.

The other aspect of Human 2.0 is that most people have been educated to believe that there are a tiny number of people in the world who are capable of creating useful ideas, art, creativity, etc. And everyone else is just a "normie". As a result of this education, it ends up being true. Very few people grow up thinking they can write a book. What would they even say? Why would anyone read that? This is legacy thinking based in a world where corporations and capitalism are what determine the worth of things.

So that's two main things:

1. Bifurcated lives broken into personal and professional, and

2. The percentage of people who think they have something to offer the world

Human 3.0 is the transition to a world where people understand that everyone has something to offer the world, and they live as full-spectrum humans. That means their public profile is everything about them. How caring they are, how smart, their sense of humor, their favorite things in life, their best ideas, the projects they're working on, their technical skills, etc. And they know deep in themselves that they are valuable because of ALL of this, not just what can make money for a corporation.

Human 3.0 might come after a long period of AGI and stability, or it might get instituted by a benign superintelligence that we have running things. Or it just takes over and runs things without us controlling it much.

UBI and immigration

One thing I've not heard anyone talk about is the interaction between UBI and immigration.

A big problem in the US is that many of the most productive people in a given city are undocumented immigrants. They're the ones busting their asses from sun-up to sunset (and then often doing another job on top of that). When their jobs start getting taken, how is the government going to send them money for UBI?

You may say "screw em" because they're here illegally, but they're largely keeping a lot of places afloat. Construction, food prep, food delivery, cleaning services, and tons of other jobs. Imagine those jobs getting Thanos-snapped away. Or the work, actually. The jobs will be there in some cases, but the economics don't work the same when it's a much lazier American doing the work much slower, at a much lower quality level, complaining all the time, demanding more money, etc.

And what happens to the people who have been doing that work the whole time? We just deport them? Doesn't seem right. Or you could say all those people become eligible for UBI, and we need to find a way to pay them.

My thoughts on Conscious AI

In September of 2019 I wrote a post called:

> > > > > > >

An Idea on How to Build a Conscious Machine

I’ve been consuming a lot of content about AI lately. I’m reading What to Think About Machines That Think, which is a compilation of tons of expert opinions on AI.

danielmiessler.com/blog/idea-build-conscious-machine

In it I argue that it might be easier than we think to get consciousness in AI, if I’m right about how we got it as humans.

My theory for how we got it for humans is that it was adaptive for helping us accelerate evolution. So here’s the argument—which I’m now updating in realtime for 2024.

Winning and losing is what powers evolution

Blame and praise are smaller versions of winning and losing

Plants and insects win and lose as well, evolutionarily, but evolution might have given us subjective experiences so that we feel the difference between these things

It basically superpowers evolution if we experience winning and losing, being praised and blamed, etc., at a visceral level vs. having no intermediary repercussions to not doing well.

So. If that’s right, then consciousness might have simply emerged from evolution as our brains got bigger and we became better at getting better.

And this could either happen again naturally with larger model sizes + post-training / RLHF-like loops, or we could specifically steer it in that direction and have it emerge as an advantage.

Some would argue, "Well that wouldn’t be the real consciousness.". But I don’t think there’s any such thing as fake consciousness—or at least not from the inside.

If you feel yourself feeling, and it actually hurts, it’s real. It doesn’t matter how mechanistic the lower substrate is.

So we’ll need to watch very carefully for that as AI’s get more complex.

ASI prediction

I am firm on my AGI prediction of 2025-2028, but I’m far less sure on ASI.

I’m going to give a soft prediction of 2027-2031.

Unifying thoughts

Ok, so, trying to tie this all together.

Leopold is someone to watch on AI safety. Simply the best cohesive set of concerns and solutions I’ve heard so far, but I’m biased because they match my own. Go read his essays > he released on it.

Forgot to mention this, but Dwarkesh is quickly becoming one of my favorite people to follow, along with Tyler Cowen. And the reason is that they’re both—like me—broad generalists obsessed with learning in multiple disciplines. Not putting myself in the same league as Cowen, of course, and he has extreme depth on Economics, but my thing has always been finding the patterns between domains as well. And listening to both of them has got me WAY more interested in economics now.

TL;DR: Follow the work of Dwarkesh and Cowen.

China is an extraordinary risk to humanity if they get ASI first.

The US must win that battle, even though us having it is dangerous as well. It’s just less dangerous than China, and by a lot.

My positive Human 3.0 world could be at risk from multiple angles, e.g., China gets ASI, we get ASI and build a non Human 3.0 society, or ASI takes over and builds a society that’s not Human 3.0.

I think the best chances for Human 3.0, and why I’m still optimistic, is:

We get AGI but not ASI for a while

We get ASI but it’s controlled by the US and benign US actors move us towards Human 3.0

ASI takes over, but is benign, and basically builds Human 3.0 because that’s the best future for humanity (until Human 4.0, which I’m already thinking about)

I don’t really care how unlikely those positive scenarios are. I’d put them at around 20-60%? The other options are so bad that I don’t want to waste my time thinking about them.

I’m aiming for Human 3.0 and building towards it, and will do whatever I can to help make it be the path that happens.

—

If you liked this, please consider becoming a member >. 🫶🏻