- Unsupervised Learning

- Posts

- Predicting Human Behavior by Combining Public Sensor Data with Machine Learning

Predicting Human Behavior by Combining Public Sensor Data with Machine Learning

This is part of my Real Internet of Things (tRIOT) Series—an extension of my book The Real Internet of Things—about my perspectives on the future of technology and its intersection with society.

In The Real Internet of Things I wrote a chapter about Realtime Data and how there will be countless ways to acquire it.

Then at CES a couple weeks ago I saw a motion towards that concept with sensors of all types being placed everywhere. Cars, homes, workplaces, etc. And there were countless systems for gathering the data in order to do something interesting with it as well.

In my model there are three basic components to the Information Architecture:

Realtime Data –> Algorithms –> Presentation

Realtime Data is all about the current state of the world as seen is as many contexts and from as many perspectives as possible.

Algorithms are what make sense of that world state data.

And the Presentation phase takes the algorithmic output and converts it into a usable form for others, which could be humans or could be machines.

Sensor types

We’ve heard lots about various sensor types over the years, although we aren’t yet trained to think of them as sensors yet. A great example is the camera.

This is a point that Benedict Evans makes well, when he talks about how there’s a fundamental difference between a human looking through a lens and getting a snapshot, vs. a computer taking in light constantly.

It’s remarkable when you realize this applies to lots of different kinds of sensors, paired with their legacy names:

Light (cameras)

Sound (microphones)

Air pressure

Gyroscopes

Accelerometers

Chemical detectors

Radioactivity detectors

LIDAR

SONAR

And now, wireless.

Fundamentally these things have much in common. Light sensors are just passive receivers of EM radiation, after all. LIDAR is something similar, except it’s an active system where you’re carefully measuring the time between send and receive.

But a team at MIT has done something similar with wireless that shows how deep this rabbit hole is about to become.

They’re bouncing wireless signal, similar to the Wifi that you’re familiar with, off of objects and then measuring how quickly they come back. Fair enough. Sounds like Sonar. Or LIDAR, or anything else that’s echo-based.

But the accuracy is staggering.

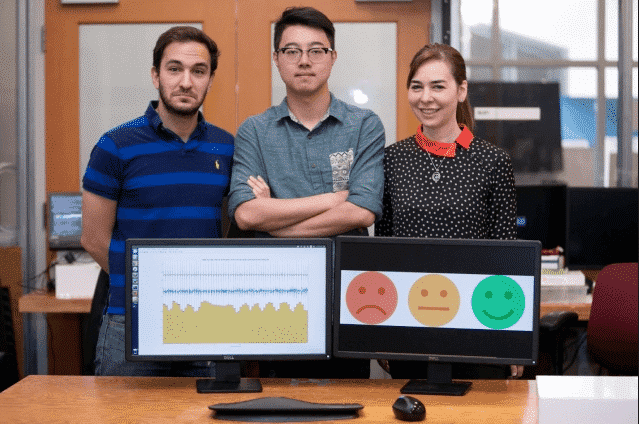

They’re not just identifying objects. They’re able to scan a person and tell you what they’re feeling. The system can detect emotions.

How?

The system is so precise that it can detect minute fluctuations in location, all the way up to facial expressions and heartbeats. And this is from across the room.

They feed that data into machine learning algorithms that tell you what the person is likely feeling based on training data.

Think about that.

But don’t just think about what that one project is doing, with a few people at a university. Think about the implications.

This is one sensor type—an active wireless signal—hooked up to a machine learning system that likely doesn’t have that much data in it. And they can already detect human emotions.

Imagine what happens when entire public areas are being scanned / parsed by ALL THE SENSORS.

Light (passive)

Sound (microphones, directional and omni)

Chemicals

LIDAR (active)

Wireless (active)

SONAR (active)

etc.

…but all that data is being fed directly into machine learning algorithms that have exabytes of training data.

Damn.

I knew what I was going to write before I wrote it, but I’m still knocked silly by the implications here. And no, I’m not talking about abuse and dystopias. There’s plenty of time for that. Let’s just stay focused on the functionality.

What can you know with this combination of [ Sensor + Algorithm + Training Data ] ?

It’s exhilarating and scary to even capture this list, and I’m just capturing a few main points.

You’ll know the current mood and emotional state for everyone moving through the area (walking gait, facial expressions, gesturing, tone of voice, etc.)

You’ll be able to identify relationships between people (proximity to each other, voice patterns, language use)

You’ll know if someone is communicating with someone else covertly (the algorithms will see nods and winks and glances that we would not)

You’ll be able to tell the current state of relationships between people (this couple is fighting, that couple is courting, this person hates that person, etc.)

You’ll have some indication of whether someone is moving with intent to harm someone else, either immediately or possibly in the future.

Using Unsupervised Learning you’ll find cluster matches with all sorts of stuff, e.g, even if you don’t know what to look for, the system will tell you that X person is 99.4% matching characteristics of someone who blew up a bus 24 hours later nearby.

The humans won’t know what the computer saw, and the neural net might not be able to explain it either, but it might be a good idea to ask that person some questions.

Endgame

And ultimately what are we talking about here? What’s the Grail for all machine learning?

Unsupervised Learning — Security, Tech, and AI in 10 minutes…

Get a weekly breakdown of what's happening in security and tech—and why it matters.

Prediction.

Imagine Grand Central Station with an AR display over everyone’s head showing a percentage chance that they’re going to engage in violent terrorist behavior within 72 hours.

There’s a whole sea of “Not Likely” floating over people’s heads, through the AR police visors worn by the officers in the station.

Then they spot a group of three people walking together and over their heads it says, “Moderate.” And suddenly a line forms between them and someone across the room who has a red, “Likely” above his head.

And now the officers swoop in.

Faster than you think

This isn’t science fiction. Not really. It’s still a ways out, but not nearly as far as most think. What’s crazy is that you could be running hundreds of algorithms simultaneously, using many of the different sensor types.

You could do one based only on language spoken combined with the way they walk. Or the clothing style combined with body language. Who knows which correlations will yield the most.

And it won’t just be security. Think about the marketing angle. Or the social engineering angle.

You can find gullible people. People who are givers. People who have money. Lonely people. Overconfident people.

It will be a veritable treasure of human insight, all of which will be mapped directly to goals and recommendations by software. Software for con-people. Marketers. Spys. Law enforcement. Single people. Whatever.

The more we know about human behavior, which will quickly become an unbelievable amount due to machine learning, the more we’ll be able to extract from even small snippets of time, and even from a few sensor types.

24/7 access to dozens of sensors? Oh. My. God.

Summary

We need to be re-thinking input devices.

It’s not about cameras and microphones. It’s about light and sound (and all the other input types).

Then start making a list of all the sensors that are possible.

Put active technologies like LIDAR, SONAR, Wireless on the list.

Realize that this data, when fed to ML algorithms, is going to create an explosion of human behavior insight.

This insight will be put to use for the penultimate use case, which is prediction.

It’ll be combined with purpose-built solutions that match target to opportunist.

This combination of sensors, algorithms, and human behavior knowledge is going to be one of the most important technological developments of our time, and it is going to merge seamlessly into the Internet of Things.

Exhilarating. Terrifying. Inevitable.

Notes

If you can think of other use cases please let me know and I’ll add them here.

No related posts.